Abstract

Sports analytics (SA) incorporate machine learning (ML) techniques and models for performance prediction. Researchers have previously evaluated ML models applied on a variety of basketball statistics. This paper aims to benchmark the forecasting performance of 14 ML models, based on 18 advanced basketball statistics and key performance indicators (KPIs). The models were applied on a filtered pool of 90 high-performance players. This study developed individual forecasting scenarios per player and experimented using all 14 models. The models’ performance ranking was developed using a bespoke evaluation metric, called weighted average percentage error (WAPE), formulated from the weighted mean absolute percentage error (MAPE) evaluation results of each forecasted statistic and model. Moreover, we employed a comprehensive forecasting approach to improve KPI's results. Results showed that Tree-based models, namely Extra Trees, Random Forest, and Decision Tree, are the best performers in most of the forecasted performance indicators, with the best performance achieved by Extra Trees with a WAPE of 34.14%. In conclusion, we achieved a 3.6% MAPE improvement for the selected KPI with our approach on unseen data.

Similar content being viewed by others

1 Introduction

Sports analytics (SA) is a vast, developing domain, significant for organizations, teams, and players. Many researchers are developing ideas to provide valuable insights. These can relate to performance evaluation, injury prevention, performance forecasting, or decision-making on tactics and strategies [1]. While basketball is a sport that combines a plethora of statistics, machine learning (ML) and data mining (DM) applications are becoming popular in the data science (DS) research community, with constant research and development, trying to apply and improve their ideas on real cases in NBA and other leagues. However, research requires valid data, collected via various techniques and media (cameras, sensors), to achieve improvements in the SA domain [2].

Each ML and DM technique can be implemented in sports, especially basketball. With the advanced statistics that many basketball leagues offer, there is room for improvement in ML and DM applications in SA. Comprehensive analysis and performance prediction are highly interesting for most prominent clubs, which invest in creating DS and SA department for scraping insights [3].

Researchers have developed statistics to provide a clear view of a player's performance throughout a game; some of the crucial key performance indicators (KPIs) are efficiency (EFF), game score (GMSC), player impact estimate (PIE), player efficiency rating (PER), tendex (TENDEX), (FP), Four Factors (FOUR FACTORS), and Usage Rate [4]. Based on these, teams, technical staff, and organisations rank and evaluate player performance. At the same time, the foresaid metrics are developed as a formula for attaching defensive and teamwork statistics. The analysis of a player or a game is now more straightforward and more apparent for people who need to make decisions [5].

This research aims to provide a clear overview of ML models' performance in 18 different kinds of advanced basketball metrics and KPIs, based on 90 high-performance players' case studies, for basketball player performance forecasting (BPPF). Fourteen models are evaluated in each player's case and his advanced targeted metrics. The list of models used include AdaBoost (AB), K-nearest neighbors (KNN), decision trees (DTs), extra trees (ET), light gradient boosting machine (LGBM), elastic net (EN), random forest (RF), gradient boosting machine (GBM), passive aggressive (PA) Regressor, Bayesian Ridge (BR), least angle regression (LARS), ridge regression (RR), Huber Regression (HR) and least absolute shrinkage and selection operator (LASSO).

To achieve that, we followed an original approach of 381 game-lag features and the application of ML regression models, to predict the upcoming performance of each of 90 high-performance players from the filtered pool in each case, using scraped data characterised as advanced statistics (Base, Advanced, Miscellaneous, Four Factors, Scoring, Opponents, Usage) related to players and teams from season 2019–20 up to season 2021–22. The selected players can be considered as high performance. These are filtered from all active NBA players by their GameScore (GMSC), FOUR FACTORS, TENDEX, FP and Efficiency (EFF) averages and their participation time, excluding the players that did not participated for at least 150 games during the last three seasons (2019–20, 2020–21, 2021–22) and at least 30 games during the last season (2021–22). They should have at least twenty minutes average participation time in the previous three seasons (2019–20, 2020–21, 2021–22). Besides, each player who’s foresaid KPIs averages are below the league's average score is excluded.

An additional aim of this study was to rank basketball forecasting models not only based on their forecasting performance, but also on how feasible it is to produce individual predictions for each targeted advanced basketball statistic and overall performance. To achieve this goal, we employed a comprehensive forecasting approach, which involved analyzing different prediction options and presenting an overview of the predictions that can be improved. The methodology for this study included two experiments. The first experiment focused on forecasting Fantasy Points (FP) as a single metric, while the second experiment predicted individual performance metrics such as Points (PTS), Rebounds (REB), Assists (AST), Steals (STL), Blocks (BLK), and Turnovers (TOV), which are used to formulate the FP. These individual predictions were then used to construct the forecasted FP formula. The results of both experiments were compared to assess the effectiveness of the two processes. The study found that by expanding the forecasting options and using a comprehensive forecasting approach, predictions of KPIs can be significantly improved.

This research is a significant contribution to ML applied to sports, as it evaluates the forecasting abilities of various ML models in predicting basketball player performance. The study focuses on a set of 18 advanced basketball statistics and KPIs and applies 14 ML models to a group of 90 high-performance basketball players. The main objective of this investigation is to identify the best ML models for individualized prediction of advanced basketball statistics and to evaluate their overall effectiveness in forecasting basketball player performance, assessing the complexity and dynamism of player performance.

The research is distinctive in its approach, developing individual forecasting scenarios for each player and utilizing a bespoke evaluation metric: weighted average percentage error (WAPE), to evaluate the accuracy of the predictions. This metric takes into account the weighted mean absolute Percentage error (MAPE) of each predicted statistic and model, providing a detailed comparison of different ML models.

By leveraging the latest three seasons of NBA advanced box-scores statistics and applying extensive data preprocessing and feature engineering, the study was conducted not only to evaluate the performance of ML models, but also to introduce an innovative and comprehensive approach to improve KPI forecasting results. This approach includes predicting individual statistics that contribute to a KPI and then modifying the KPI using these forecasts, which resulted in a significant improvement in the accuracy of future predictions.

The research findings have consequential implications for the sports analytics industry, as they offer valuable insights for researchers, coaches, data scientists, and stakeholders. The study sets a new benchmark in predicting player performance, combining sophisticated statistical techniques with practical usefulness in the competitive world of professional basketball.

2 Background

With the constant improvement of ML and DM applications, different industries are on DS and DM chase for evaluation, improvement, forecasting, and optimisation. SA is now an excellent tool for organisations, and professional teams to use to advise decision-making and plan their strategies. ML and DM use, especially in basketball, has been beneficial until now [6]. However, as professional leagues offer data, there is plenty of room for improvement and testing. Such applications include overall player performance evaluation and predictions, injury prediction, play style strategies and line-up combos. The recent years, researchers tried to get the best results with innovations and approached each case differently.

2.1 Basketball players’ performance prediction literature overview

All major sports organizations and professional teams use SA to assemble their teams, improve each player's performance, and pinpoint problems difficult for coaches and staff to detect. SA is a constantly involving domain, so technological advancements have made it possible and essential for coaches, staff, and corresponding teams [7]. Relying on decision-making on SA and predictive analytics provide teams and organizations with the decisiveness that their actions are taken based on valid data. Furthermore, with ML and DM techniques, predictive analytics development allows researchers to extend their experiments with SA, propose new approaches, and evaluate their findings [8].

For the first time, researchers in [9] forecasted the NBA player's performance using sparse functional data, providing a competitive method in contrast with the other traditional methods. Also, in the study [10], a unique network with ML and graph theory is developed to predict the performance of an NBA line-up anytime based on a founded metric called Inverse Square Metric, using an edge-centric method achieved 80% average accuracy and with graph-theory, performance prediction results yield 10% in comparison with baseline methods. Additionally, researchers in [11] claimed to determine the key factors and statistics for a team to win the game. Their case study of Golden State Warriors claimed that the winning success factors related firstly to shooting and after to defensive rebounds and opponent turnovers. Furthermore, the study [12] uses a graph theory neural network-based model for injury prediction.

In contrast, in the study [13], validation based on versatility or specialisation is done for basketball players, claiming that by filtering only the best players, a trend of higher numbers of versatility is shown compared to the specialisation. In addition, researchers [14] correlate NBA players’ performance with their personality features. Comparing All-Star players with the rest of the league, they concluded that the traits of conscientiousness and agreeableness had the biggest significant positive difference. With a different approach [15], data envelopment analysis (DEA), researchers investigated the correlation between winning probabilities and game outcomes for NBA teams, claiming that the DEA-based approach successfully predicts team performance.

The researchers [4] correctly predicted the NBA MVP for the 2017–18, 2018–19, and 2019–20 NBA seasons. In addition, based on verified data from seasons 2017–18 up to 2019–20, they forecasted the best Defender for the aforesaid NBA seasons. Each season’s dataset comprised 82 game events in each forecast scenario split into four groups(Q1-Q4). They selected a pool of twenty NBA players filtered by the number of games (at least thirty games per season) and their participation time (fifteen minutes per game-event). With extended analysis, they created two metrics, the Aggregated Performance Indicator (API) and the Defensive Performance Indicator (DPI). Based on these two metrics, using API, which is constructed by advanced statistics that illustrate the player’s general performance, they successfully predicted the NBA MVP for seasons 2017–18 up to 2019–20. With the use of DPI, a composition of advanced analytics variables focused on player contribution to Defence, they successfully predict the Best Defender for seasons 2017–18 up to 2019–20.

The study in [16] presents an approach to determining the critical factor on which each player’s shooting performance accuracy in the NBA depends. Researchers experimented with seven different models based on ten statistics related to shooting to predict whether a player could make the shot. Their results show that shot distance, the distance of the closest defence player and touch time are the three most crucial variables impacting a player’s successful field goal accuracy. Their results concluded that KNN (KNN) had performed best with 67.6% classification accuracy.

Furthermore, researchers at [17] also implement ML models to predict the potential shooting accuracy of NBA players, stating that someone must focus on the variables that this metric depends on, targeting to indicate a key performance metric like successful shooting points. For this reason, they tried to classify each player’s efficiency at shooting from various ranges and frequently employed defensive tactics. To reach their targets, they used eXtreme GBM (XGBoost) and RF, figuring that XGBoost was the best choice scoring 68% accuracy with parameter tuning and 60% without tuning. However, they claimed that RF is also a good choice scoring 57% in their experiment.

Considering basketball players’ performance evaluation, in this study [18], two methods were employed to determine the crucial variables for each player’s position and construct an alternative performance evaluation system similar to the Performance Index Rating (PIR). Firstly, they clustered the players based on their position for their research on data from Euroleague 2017–18. Secondly, DT and one-way ANOVA tests determine the critical variables for each position, and TOPSIS results are compared with PIR for indexing players into a ranking system. They claimed that it is possible with this alternative way to determine player performances finally.

The authors in [19] identified if a player belongs to All-Stars after the end of each regular season in the NBA, based on his advanced box score statistics; additionally, they targeted to identify the most important characteristics that make a player an All-Star player—started with the employment of RF model on data from seasons 1936–37 up to 2010–11, for classification. To continue, while they succeeded in creating an ML model capable of classifying correctly with an accuracy of 92.5%, they built up an application with Apache Spark to simplify the process. To conclude, even if the selection of players for the NBA All-Star game purely depends on votes, their approach can predict the potential NBA All-Star players.

However, since the previous work in performance prediction for the past years mainly focused on NCAAB, the study [20] tried to identify if there is a possibility to use data from NCAAB for ML and DM applications for performance prediction in NBA or the opposite. Across their research, several representations, training settings, and classifiers for comparing their results on NCAAB and NBA data. Additionally, they used three different metrics to evaluate and predict the team’s performance, adjusted EFF and Adjusted FOUR FACTORS. They discovered that adjusted efficiencies work well for the NBA; besides, for predicting the NCAAB post-season period, the regular season for training is not the best choice. Also, they claimed that to predict as better as possible team'’ performance, different classifiers with different bias needed for each league. Finally, based on their findings, they conclude that the best classifier for predicting the outcome of the NBA playoff series is the naïve Bayes.

Players' Performance predictions can be based on different metrics and KPIs; one of them that also has many applications in the betting domain is FP. Advanced box score statistics structure this KPI, which can show an overview of the attacking, defensive and teamwork performance of each player participating in a game. In recent years many researchers tried to predict players' performance with FP and, in many cases, use their findings or Fantasy Tournaments case studies for betting applications [21]. The researchers [22] tried to predict the potential FP and develop a system capable of predicting the best combination of players for the Daily Fantasy Line-ups application. Firstly, they used Bayesian random-effects model and data from season 2013–14 up to season 2015–16, in which they conducted their experiments. After the results were acquired, they compared two methods of constructing the forecasted line-up with a Bayesian random-effects model and a KNN model. Finally, they conclude that both approaches have successful results, with KNN coming first in generating profits in Fantasy Tournaments.

The study by [23] tried to predict the final score of an NBA game using data from seasons 2017–18. They experiment with a hybrid data-mining-based scheme using five data mining models, Extreme Learning Machine (ELM), Multivariate Adaptive Regression Spline (MARS), XGBoost and a KNN approach and game-lag features. The empirical results proved that the XGBoost mode achieved the best performance, using game-lag = 4. At the same time, they also presented the most critical vital statistics features for their forecast. Aiming for the same results, researchers [24] proposed a new intelligent ML framework that claimed to predict the results of a game played in the NBA. Nevertheless, based on this, they also experiment with the key factors and statistics that are critical for their forecast. Using Naïve Bayes, Artificial Neural Networks (ANNs), and DT, they were confident that defensive rebound is one of the essential features with others to follow, concluding on with the proper feature selection, models' performance increased from 2% up to 4%.

The authors of [25] experiment with data mining methods targeting to predict the correct NBA GMSC. Their applications involved the five most-known data mining methods, multivariate adaptive regression splines (MARS), KNN, extreme learning machine (ELM), extreme GBM (XGBoost) and stochastic GBM (SGB), finalising their research on creating a successful GMSC prediction model. While in [26], the authors tried to predict the outcome of NBA playoffs by creating a scheme with k-means clustering and the maximum entropy principle.

3 Methodology

This section outlines the methodology that followed. Starting with basketball's data availability, scrapped data are from the official NBA website [27] from the Seasons 2019–20, 2020–21, and 2021–22. Including plenty of evaluation and performance statistics, Player's and Team's Box Scores for each game, related to the attack, defence, teamwork and advanced KPIs, which overview total each player's performance [28]. Continuously, cleansing and transformations are performed on the data and the essential pre-processing on both Player's and Team's Box Scores related to each recorded game and merging them to continue with feature engineering. In the next stage, 1,3,5,7 and 10 game-lag features are created from base data for implementing regression ML model forecasting. In the forecasting phase, 18 different advanced basketball performance statistics and KPIs with 14 different types of ML models used, AB, KNN, DT, ET, LGBM, EN, RF, GBM, PA, BR, LARS, HR, RR and LASSO.

Furthermore, in each case study, per player of the selected pool, 18 advanced statistics and KPIs are tested, forecasted and evaluated with each of the 14 different ML models. As mentioned earlier, the goal was to create a performance ranking table for the trained models to assess which model or type of model performs better for forecasting each statistic and KPI that overview player performance [28]. The Ranking Table is based on MAPE results per model and metric, introducing also the WAPE metric. The created key indicator will be analysed in the following sections.

Finally, the last experiment is conducted to yield KPIs results. We are introducing a selective and models' comprehensive approach for calculating the average of KPIs performance prediction evaluation scores. The KPI for the last experiment is based on Fantasy Points (FP). This formula is constructed on different player statistics, evaluating the total players' performance from different perspectives. Per statistics results of the last experiment will be analysed, considered, and constructed with results as a formula. The summarized workflow of the methodology utilized is illustrated in Fig. 1. It outlines the progression from data collection to the final stages of forecasting and evaluation.

3.1 Research questions (RQs)

-

1.

Which ML Model is best for predicting individually Advanced Basketball Statistics? (RQ1)

-

2.

Which ML Models are the better performers in Basketball Player Performance Forecasting? (RQ2)

-

3.

How can the Basketball Player Performance be improved using a comprehensive forecasting approach for KPIs? (RQ3)

These questions are essential for organisations, teams and especially SA and DS departments, which provide executive advice for player decision-making and improvement at multiple levels. In addition, they offer a clear view of the critical contribution and its uses of KPIs in SA on how these can be useful for prediction-making and evaluating each athlete's existing or potential performance [29].

3.2 Aim and objectives

This study aims to accurately predict the key indicators that overview an NBA player's performance and identify and benchmark the available ML models forecasting performance for each key metric and KPIs contrasting 90 high-performance player cases. Resulting in an accurate and validated models’ performance ranking table based on 90 forecast case studies that previews which ML model’s type is the best suitable for SA performance forecasting under the methodology followed.

Additionally, based on the models’ performance ranking table, a forecasting approach for the selected KPI, FP, will be constructed. Since FP is one of the KPIs built by players’ key metrics related to the attack, defence, and teamwork, it is one of the preferable ones that overviews and evaluates each player’s performance. For this reason, based on the models’ performance ranking, a comprehensive ML models’ approach is followed to forecast and assess each of the 90 selected pool players, but as an average of the whole pool.

3.3 Data acquisition & pre-processing

Official NBA's website offers plenty of statistics and information, including Box Scores for players and teams [27]. The retrieved and used data were from the season 2019–20 up to 2021–22 for regular seasons and playoffs, targeting to find the latest trend for players' and teams' performance. Scraped data are referred to as advanced statistics, with different types; base, advanced, miscellaneous and scoring data for all players that participated in the referred seasons, same with base, advanced, miscellaneous, scoring, four factors and opponents type of data for all NBA teams. Based on performance and participation criteria, the study focused on only high-performance players during the pre-processing and cleaning. Long-time injured players, rookies and players who do not still participate in NBA are excluded. It started with banning the players that had the selected KPIs, GmSc, FOUR FACTORS, TENDEX, FP and EFF averages below the average of the league for the three seasons. Also, we excluded the players that did not participate in at least one hundred fifty games in the last three seasons (2019–20, 2020–21, 2021–22) and at least thirty games in the previous season (2021–22), additionally, players that had less than twenty minutes average participation time in last three seasons were excluded.

In the pre-processing phase, each player's dataset is merged with their corresponding team, creating new features about per-game opponent performance. Furthermore, statistics about final rankings are excluded because those could not provide any information about players' potential performance in each upcoming game. The required 90 datasets included one hundred 90-seven features and statistics related to attack, defence, and teamwork, which are referred to as basic, advanced, and informative KPIs.

3.4 Feature engineering

This study uses game-lag features in feature engineering, creating 1, 3, 5, 7 and 10 games-lag features. Those game-lag features are designed as averages of the previous performance for each player in each statistic, except for the categorical features, which are calculated as sums. It is worth mentioning that all primary features transformed into game-lag, but the averages are applied only in the following advanced metrics [30] Plus-Minus (PM), FOUR FACTORS, Net Rating (NETRTG), EFF, TENDEX, GMSC, PIE, Effective Field Goal Percentage (EFG%), FP, Usage Percentage (USG%), Assists to Turnover (AST/TO), PTS, REB, AST, Assists Ratio (AST RATIO), STL, BLK and TOV. Additionally, 3-game-lag sums are created and used for the categorical features; Win/Lose, Double- Doubles, Triple-Doubles and Minutes of participation time. Lastly, to avoid players' participation in games in which they were injured, and their participation time was limited, causing outliers in the dataset, appearances with under twelve minutes of participation time are excluded. However, their historical information is kept under the game-lag features. After feature engineering, each of the 90 datasets contained 398 features, as presented in Table 1, with datasets structures.

Before starting each of the 90 case studies, the transformation of each target variable is done with the Yeo-Johnson method. That method is selected because basketball performance can be influenced by various reasons, like how many minutes the coach will decide that the player will participate in the game. Those reasons cause the distribution of each statistic, or KPI, to be non-symmetrical. With the Yeo-Johnson method [31], we made the distribution of those more symmetric. Additionally, even if basketball is full of statistics and metrics, the quantity of those is limited. Each NBA season has 82 games plus the playoffs, but not all players participate in all games each year. Across our study, the last three seasons were selected, and the ratio of the records and the generated features was not ideal. For this reason, three feature selection [32] methods are followed; in each experiment, different features did not add to the explained variance of the model and were removed.

The first method, removing multicollinearity between features [33], is applied because datasets contain highly correlated features. After all, a player's performance could be defined on specific levels (attack, defence, teamwork), and the variance of the coefficients is increased, generating noise. This method drops each feature highly linearly correlated with another feature and less correlated with the target variable. The threshold is set to 0.50, causing the features with inter-correlations higher than 0.50 to be dropped. At the next stage, the feature importance method is applied [34], aiming to constrain the feature space and improve modelling efficiency, using a mix of permutation importance approaches of linear correlation with the target variable, RF and AB. With the feature selection threshold set to 0.9, the model keeps only the features that explain at least 90 per cent of the dataset's variance. Lastly, the ignore low variance method [35] focuses on the categorical features, playoffs, opponent, and season year. According to this method, features with statistically insignificant variances are removed, and their variance is calculated by dividing the number of samples by the number of their unique values. The two conditions set were, firstly, the count of the unique values of each feature divided by the sample size to be less than ten per cent. Secondly, the count of the most common values divided by the count of the second most common values is greater than twenty. Data are available at each of the three stages throughout this research—initially as raw collections, then pre-processed, and finally after feature engineering—all of which are accessible in the linked GitHub repository.

3.5 Modelling

Across this research, the main target is to predict and compare the results of each of the selected ML models for each basketball performance statistics, KPIs and overall performance. By this, a ranking table based on the bespoke metric WAPE overviews and benchmarks the performance of each ML model. After data preparation, each of the 90 different datasets, one for each player, 18 BPPF metrics with 14 different ML models, will be tested.

The Pycaret library allows us to train, tune, test and evaluate the models simultaneously. Pycaret library has, except for regression applications, uses in classification, clustering, anomaly detection, natural language processing, association rules mining and time series [36]. Additionally, it offers automated feature selection and model ensembling (bagging, boosting, blending) methods, providing optimised model performance [37, 38].

3.6 Linear, tree based, non-parametric and online learning models in SA

In this study, an extending forecasting performance analysis has been done with a pool of 90 players with their corresponding nineteen performance metrics and KPIs. In addition, 14 different models have been trained in the case studies and will be evaluated based on their performance in SA applications. Those models can be separated into four categories; Linear-Based [39], Tree-based [40], Non-Parametric [41], Online-Learning [42].

3.6.1 Linear models

The models used for ML model creation are based on a linear combination of features, and the target value belongs in the Linear-based category. It refers to a linear approach for modelling between a scalar and explanatory variable, while the assumption that dependent and independent variables are linearly related, and the model works by finding the line which fits linearly better between the variables. Because of this, it performs excellently for linear separating data. Based on the methodology, the Linear-based models follow for regression applications; an execution formula is applied to find the best-fit line throughout the set of training data [43]. These models, foundation based on statistical modeling, highlight the intersection of data-driven prediction and statistical theory [44].

Least Absolute Shrinkage and Selection Operator (LASSO): A linear regression and regularisation technique from statistical learning [44], using shrinkage to determine the coefficients. Additionally, called as penalized regression method because it penalizes the less essential features and automatically performs feature selection. Therefore, it usually is preferred when data have high dimensionality and multicollinearity [45].

Elastic Net (EN): A penalized linear regression model that can consider a hybrid of RR and LASSO regularization. Similar to LASSO, it generates reduced models using zero-valued coefficients. Most cases are used on data in which predictors are highly correlated [46].

Bayesian Ridge (BR): with Bayesian, the regularization parameters are used in estimation, while RR is applied to the BR estimator and its coefficients to determine a posteriori estimator. Instead of other models' applications with point estimation, BR works with probability distributors [47].

Least Angle Regression (LARS): A model similar to forward stepwise regression because in datasets with many attributes, at each stage, identify the highest correlated attribute with the target value. If there is more than one variable with the same highest correlation value, average the attributes and proceed to the same angle. Continuously, keep directing the line regression to those mentioned above until it reaches another same or higher correlated variable [48].

Huber Regression (HR): A powerful regression technique with data including outliers. A difference from the least squares loss function is used with HR. With small residuals, penalties are the same with the least squares loss function, but with large residuals, HR's penalty is lower and increases linearly instead of increasing quadratically [49].

Ridge Regression (RR): A linear regression model in which the coefficients are not estimated by least squares (OLS) but by a RR estimator. Which is biased, and its variance is lower than the OLS estimator. The RR method is usually applied when multicollinearity problems occur, reducing the standard error [50].

3.6.2 Tree-based models

With the different constructed approaches, Tree-based models use rules of conditional statements on training data to generate predictions [51]. These models, deeply rooted in statistical decision theory, exemplify the fusion of statistical methods and ML [44]. The Tree-based models and Decision Trees (DT) structure starts with a node and, in the next level, split into branches, concluding that decisions are the final leaves. In addition, Ensemble methods, and Bagging, in which different Tree-based models are trained simultaneously and individually, and predictions are decided by voting or averages of the individual predictions [52]. In the next stage, boosting method based on Tree-based models uses a different method, which in contrast with bagging, creates and trains each selected model one by one and the next with the selected train dataset and mistakes from the previous model [53].

Gradient Boosting Machine (GBM): An ensemble technique, Boosting, uses DT for weak learners. Sequentially, train the weak learners and fit the negative gradient of the given loss function. Across this methodology, GBM Regressor performs excellently in finding a nonlinear relationship between features and the target variable [54, 55].

Random Forest (RF): An ensemble method, called bagging, using DT and techniques of bootstrap and aggregation. Works by combining several DT as base learning models to determine the final output. [56].

Light Gradient Boosting Machine (LGBM): A GBM technique constructed by DTs. Unlike other boosting methods, LGBM splits/grows the tree leaf-wise(horizontally). It uses two more techniques, Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB), resulting faster training process, lower memory usage and, at most times, higher accuracy [57].

Extra (Randomized) Trees (ET): An ensemble method related to bagging and RF that creates several DT and, without replacement, samples them randomized, resulting in unique datasets for each constructed tree. The difference between the ET method is that random select the split value of each feature [58].

Decision Tree (DT): A tree structure regressor is one of the most used techniques in ML. It is constructed with the root node, representing the whole dataset, and splits into other nodes (interior). Each interior node represents the dataset's features, and each branch represents the decision rules. Finally, each leaf node includes the outcome of the model. It is worth mentioning that DT is incrementally produced. In supervised learning, DT is the base of the creation of many ensemble ML models, and their application outperforms linear-based models on nonlinear datasets [59].

AdaBoost (AB): A competitive ensemble method, boosting model. Across his application, fits the primary regressor on the original dataset and, based on the prediction error, creates copies of the regressor on the same data but adjusts the weights of the instances based on the first error [60].

3.6.3 Non-parametric model

The Non-Parametric method works opposite to all the other Parametric methods, in which the assumptions are made on best-fitting training data. The model does not make primary assumptions transforming the based function, keeping it unidentified [61]. This approach demonstrates the statistical modeling principle of allowing data to guide model structure, providing flexibility in handling complex datasets [44].

K- Neighbors (KNN): Considered a non-parametric method that includes associations’ calculations between the target variable and features by averaging each observation in the same “neighbourhood”. In cases, neighbourhood size is set across cross-validation to minimize the mean-squared error [62].

3.6.4 Online-learning model

Online-Learning can be considered a fundamentally different approach in which the models are not trained at once. At the same time, data come in sequential order, and the best predictor is updated in each learning step [63, 64].

Passive Aggressive Regressor PA: A not commonly used model, which implementation is used for cases in which data is streaming continuously. It works by sequentially feeding individual data or mini batches, while its loss function is similar to a standard hinge loss function [65].

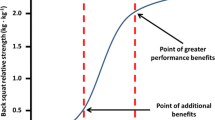

3.6.5 Performance forecasting optimization

The advantages of BPPF are plenty in various industries. The main target always is to predict as accurately as possible each player's potential performance for long or short-term decision-making. With the following in this research approach, there is the opportunity to investigate and evaluate methods for yielding forecasting results [66]. In basketball, formatted KPIs give an overview and evaluate a player's performance. Across this research, except for investigating different ML models forecasting performance in 90 players' case studies, an advanced formatted approach has been created and evaluated for KPIs.

The targeted KPI that has been evaluated with different forecasting approaches is the FP. As mentioned before, FP is a KPI constructed by advanced basketball statistics. The base methodology, in which FP is forecasted as a formatted formula. While alternatively, each statistic that contributes to the formula is forecasted independently, and the KPI is formatted afterwards with the predictions. With this method, we investigate if there is a possibility to yield KPIs forecasting results by comparing the prediction results of ten ML models with the average MAE and MAPE results of 90 players’ case studies.

4 Findings

Acquire highly accurate BPPF implements to extend the research in the state-of-the-art ML models. Open SA data is always advantageous in experiments with different DM and ML approaches [67]. At this point of research, the selected forecasting approach allows us to find the possible trends of the player's performance in his latest 10 matches in which he participated and forecast his upcoming performance. The results will provide an overview of how each ML model described above performs with the selected approach to forecasting the upcoming player's performance. In this research, not only 14 ML models are compared, but 18 key performance metrics are forecasted in each of the 90 different player case studies.

In the first stage, based on the key performance metric's usefulness in overviewing a player's performance, an evaluation ranking metric is constructed with selected weights for each metric’s results of MAPE. Based on that, an ML models ranking table is built, overviewing how well each ML model approach can forecast a player's performance via different metrics. In the second stage, we will compare previous results with generated new ones with an experiment following a different forecasting approach. We focused on one basketball player's performance KPI, FP, formulated from basic basketball key performance metrics. In this experiment, based on the ranking table, ten of the 14 models have been selected to be tested and compared on 90 players' case studies and evaluate results averaging the models' evaluation metric, Mean Absolute Percentage Error (MAPE) [68].

4.1 Results scope

This research is based on 90 different high-performance NBA players’ case studies. In each case, the dataset follows the same split, 70% for training, 20% for testing and 10% for validation with Unseen data. Data refers to the three latest seasons, including season 2021–22, and unseen has been considered the 18 latest matches of season 2021–22, excluding playoffs. The predicted target key performance basketball metrics are the following: AST/TO, BLK, EFF, FOUR FACTORS, GMSC, NBA, FP, NETRTG, PIE, PM, PTS, REB, STL, TENDEX, TOV, USG%. In addition, the ML models that are tested: are ET Regressor, RF Regressor, DT Regressor, LARS, LASSO Regression, EN, GBM Regressor, KNN Regressor, LGBM, BR, AB Regressor, RR, Passive Aggressive (PA) Regressor, HR Regressor. The selected evaluation metric for each model is MAPE and Mean Absolute Error (MAE) [68].

4.2 Machine learning models ranking score

The purpose of this section is to compare the overall performance scores of ML models in SA, and especially in basketball, WAPE, constructed as a formula (1) of weighted forecasted basketball performance metrics. The weights of each are selected based on the significance of each statistic in evaluating individual basketball players' performance overall for each played match. Each KPI or advanced metric that overviews player performance for one aspect contributes to the formula with a 4% share, statistics and KPIs that overview the domain impact of each player's performance contributes to the formula with a 5% share, and KPIs that evaluate overall players' performance, with considering his attacking, defence, teamwork, and contribution to the win are weighted with 7%.

The WAPE formula (1) is given below:

An extended presentation of the occurred results of both test and validation procedures is conducted in Appendices’ Tables 7, 8, 9, 10, 11, 12, 13,14, 15, 16, 17, 18 and 19 evaluating each ML model corresponding forecasting performance with MAE, MAPE, Root Squared Error (MSE), Root Mean Squared Error (RMSE) [69] and Root Mean Squared Logarithmic Error (RMSLE) [70].

4.2.1 Cross-validation strategy

The cross-validation strategy followed is the k-fold, with 10 folds in each ML trained/tested, and Appendix Table 5 is an overview of the average MAPE results. Table 2 is an overview of the average MAPE results of only higher performance models, both on the test data for each aforesaid key performance statistic and ML model, with the bold values indicating the lowest MAPE averages and WAPE metrics.

Comparing results on test procedure for individual metrics using BPPF (RQ1) and overall results based on WAPE (RQ2) we conclude that these are similar. However, the three most efficient models were ET with WAPE 35,83%, dominating in three target variables (NETRTG, TOV and USG%). DT, with a WAPE of 35.87%, performing best in five forecasted statistics and KPIs (AST, AST RATIO, 4Factors, REB, STL and TENDEX) and RF, with a WAPE of 35.92% and was more efficient in three players’ performance evaluation metrics (FP, PM and PTS). The other five ML-type models of Table 2 scored closer to each other, with LASSO coming first, forecasting better four metrics AST/TO, EFF, EFG% and GMSC). According to Table 5 in Appendices, in the last place on the WAPE table in testing came the HR with a WAPE of 41,47%. Furthermore, the last seven placed ML types of models and EN could not, on average, outperform all rest in any of the players’ performance evaluation statistics.

4.2.2 Forecasting on unseen data

Additionally, for validation purposes for our models’ performance, the trained ML models in each player’s case study and performance metric are challenged with unseen data (RQ1). Table 5 in Appendices is an overview of the average MAPE results, and Table 3 is an overview of the average MAPE results of only higher performance models, both on the unseen data for each aforesaid key performance statistic and ML model, with bolded values highlighting the lowest MAPE averages and WAPE metrics.

Compared with testing, again, the top three performing models (RQ2) were the ET, RF and DT. ET scored higher with WAPE 34,14%, performing best in four forecasted metrics (AST RATIO, PIE, PM and TOV). In the second place, the RF model evaluated WAPE at 34.23% outperforming others in three targets (AST, PTS and USG%), and the DT came third with WAPE at 34,41%, being more efficient in REB, STL and TENDEX forecasting. According to Table 5 in Appendices, HR was the least efficient ML model on forecasting in SA with the selected approach with WAPE 43.04% and the same pattern with testing results occurred, in which the bottom seven and EN did not manage to be more efficient in any of the forecasted players’ performance evaluation metrics.

4.2.3 Individual results per player’s performance evaluation metric

Based on the results of experiments in the validation procedure (RQ1), only six of the 14 models are qualified as best for predicting advanced individual statistics in basketball (based on MAPE). Besides scoring higher on WAPE, ET made better predictions than other models, for AST RATIO at 45.52%, for PIE at 12.3%, for PM at 24.21% and TOV at 42.71%. The RF came second in the ranking, predicting better the AST with 48.75%, the PTS scored with 42.62% and the USG% with 21.37%. In third place, DT outperforms the others in REB with 49.4%, STL with 44.64%, and TENDEX with 23.46%. LARS had superior performance in predicting FOUR FACTORS with 25.75%, FP with 33.39% and NETRTG with 20.29%. Also, LASSO achieved better in AST/TO at 63.6%, EFF at 32.09%, EFG% at 29.32% and GMSC at 28.86%. Concluding, GBM exceeds other models' performance only in BLK (RQ1).

4.3 KPIs forecasting optimization

The selected basketball KPI that extends experiments is the FP. Table 4 depicts the results of the forecasted FP as the primary target variable and the forecasted FP as a constructed formula (2) with the predictions of the metrics built with (PTS, REB, AST, STL, BLK and TOV). The lowest error values for MAPE are highlighted in bold.

The FP formula (2) is given as follows:

In the second experiment, the metrics, as mentioned earlier, were forecasted individually, and the FP KPI was created based on the predictions.

FP was forecasted as a prior-constructed formula in the first approach, and all ML models' performances were close. The best performing model (RQ3) was the LARS and EN with MAPE on forecasting with Unseen data, 33.39% and 33.4%, respectively. All ML models' performances were close in the second approach except for GBM. With a difference of more than 3,5%, GBM, with the second forecasting approach, outperformed LARS, with WAPE at 29.81%.

Across this experiment, each forecasted player's performance metric results were rounded as integers to be constructed FP KPI afterwards. Overviewing Forecasting of Reformatted FP for the other models, based on the validation procedure, MAPE results, AB scored 32.58%, the LGBM 32.62%, the LARS 32.92%, the LASSO 32.95%, the RF 33.1%, the K Neighbours and the EN 33.21%, the ET 33.27% and the BR 33.81%. It is worth mentioning that excluding GBM, whose MAPE result was better by 2.77% from second in FP MAPE ranking AB, other models' MAPE results did not vary significantly, with max the max deviation being 1.23%. Additionally, each model's performance yield respectively; GBM by 4.15%, AB by 2.02%, LGBM by 2.06%, LARS by 0.47%, LASSO by 0.52%, RF by 0.76%, KNN by 0.54%, EN by 0.19% and ET by 0.47%. The only case in which performance results did not improve was BR, which worsened by 0.68%. Concluding, each of the individual models' results turned out to be better than the forecasted FP as an already structured KPI (RQ3).

5 Discussion

In this research, we identified, with a variety of forecasting experiments, using 90 players' case studies, which type of model of the 14 selected, resulting in better predictions for 18 different players' performance metrics. Each player's case study was forecasted individually for each of the selected players' performance metrics with the selected ML types of models. This approach was selected to give us a clear overview of the potential performance of ML models in SA. With 1, 3, 5, 7, and 10 game-lag features, this research proves that it is possible to predict each player's next game performance, even if luck is a crucial volatile factor that affects player performance.

5.1 Models performance

Based on the test and validation results, a strong standpoint occurs that each of the Linear, Tree, Non-Parametric and Online-Learning based models, perform differently in SA (RQ1). Using a metric named WAPE, we identified each model's overall performance (RQ2). We extended our research on how it is possible with a different approach to forecast as well as possible an SA KPI (RQ3).

5.1.1 Linear models

The models that belong to this category and are tested are LASSO, EN, BR, LARS HR and RR. Based on Table 5 in the Appendix, the best performers are the Least Eagle in fourth place, LASSO in fifth place and EN in seventh place in the WAPE Ranking table. This research shows that linear-based methods can perform well in predicting each player's upcoming performance; however, since data are not linearly separable, because a player’s performance depends on imponderables [71], linear-based models can perform well, but it is not the best methodology.

In Table 13 in Appendices, LASSO in Table 12 in Appendices, reached place four in the ranking table with WAPE 34.53%, predicting best in the average of three players' performance evaluation metrics. LASSO penalized fewer essential features [72], which were crucial in his performance. Each player's corresponding final dataset contained 398 features; even if three different feature selection methods were applied, LASSO, with penalizing less important final features, achieved forecasting best AST/TO, EFF, EFG% and GMSC. EN, in Table 11 in Appendices, achieved being ranked in the top six of the WAPE ranking table, with 34.71%; however, EN, constructed as a hybrid of LASSO and RR using zero-valued coefficients, performed worse than LASSO but better than RR. Even if multiple features were highly correlated [73], EN did not manage to outperform other models in any forecasted metric but performed well overall. BR, in Table 10 of the Appendix, as RR is applied to Bayesian probability distribution applications [74], did not manage to forecast each player's performance metric better than other models overall.

However, even if he ranked tenth, its WAPE score was closer to winners with 35.42%. LARS, in Table 9 in Appendices, achieved place four in Ranking Table, with WAPE 34.52%, was the best Linear based model, predicting better than other essential metrics and KPIs which evaluate player’s overall performance, the FOUR FACTORS, the FP and the NETRTG. LARS is specialized for feature selection across cross-validation, and its often better performing than other models when the number of features is more than the data instances [75], a phenomenon which, with the followed approach in many players’ cases, occurred limited data instances (matches played). HR in Table 16 of the Appendix overviewed the worst of the selected type of models, ranked in last place, with WAPE 41.46%. Occurred that is not the best option with the followed approach even if his method is the same with LARS with small residuals; with large residuals, the penalty applied in features is linearly increasing and not quadratically [76], to be able to fit better in those cases. In Table 18 of the Appendix, RR in Table 17 of Appendix managed to be ranked in twelve places with a WAPE of 40.68%, and worse by more than 5% WAPE from AB in eleventh place. RR does not perform feature selection and reduce standard errors in its applications [77], concluding that it cannot predict better than other models of any of the forecasted players' performance metrics.

5.1.2 Tree based models

GBM, RF, LGBM, ET, DTs and AB are the selected and tested Tree-based models in this research. Based on the results, Tree-based models are the best for predicting players' upcoming performance, with ET being in rank one in the WAPE table, RF in second place, Dissection Tree in third, GBM in seventh, LGBM in ninth, and AB in eleventh place. The performance of Tree-based models reasoning that data of the followed experiments approach were non-linearly separable, with complex, non-linear relationships, and many features compared with the data instances [78].

GBM, in Table 7in Appendices, managed to finish in the top seven best performers, with a WAPE of 34.83%. Additionally, it outperforms others in predicting players' BLK, a performance metric that is difficult to predict because of the variable's low variance [79]. RF, which performance is presented in Table 5 in Appendix, referred to as a bagging ensemble method, ranked in third place with WAPE 34.23%, predicting best three players' performance metrics. Like ET, bagging methods proved that it is an excellent method, with the selected forecasting approach, by handling unbalanced, non-linear data very well [80]. LGBM ranked in place nine, with WAPE 35.2%, and its’ performance is in Table 13 of Appendices. It can be characterised as eligible to forecast players' overall performance, even if it does not outperform the others in any forecasted metric. Usually, LGBM performs less well than other ensemble methods when data instances are limited [81]. ET was the best performing model in this research, which performance results are shown in Table 14 of the Appendix, with 34.14%, achieved perform best on average in four players' performance metrics. The following model proved that bagging ensemble methods are slightly performing better for forecasting in SA, with constructed randomised Trees and unique selected non-linear datasets [82]. DT, a fundamental ML method, performed highly in second place on the WAPE table, with 34.23%. Achieved outperforming other ML models in three players' performance metrics forecasting, presenting its’ detailed forecasting performance in Table 18 in Appendices. Additionally, it performs feature selection, and unimported features do not influence outputs [83]. AB accomplished eleventh place, whose performance is presented in Table 7 in the Appendix, with WAPE 35.35%, which does not predict better than any of the selected forecasted players' performance metrics. AB is a boosting ensemble Tree-Based method [84], and even if he does not rank in the top ten of the ranking table, his overall performance is close to other higher-ranked ML models.

5.1.3 Non-parametric

The selected non-parametric model tested in this experiment is K-Nearest Neighbours (KNN), ranked eighth in WAPE Ranking Table. Without making any primary assumptions on model function, the non-parametric model best fits with training data.

KNN, with forecasting performance scores in Table 15 of the Appendix, the Non-Parametric selected method was ranked eighth with WAPE 35.11%. While no assumptions are needed, modifications in tuning parameters to fit each case, KNN, was an excellent choice for non-linear data experiments [85]. However, often KNN underperform with spread observations into feature space.

5.1.4 Online learning

At Online Learning category belongs only to the PA of the selected ML models. Online Learning was a high-expectation ML category because it is used for cases in which models are needed to adapt dynamically to new data patterns [86]. After all, each player's performance is not stable or linearly mutative.

PA finished in penultimate place on the WAPE Ranking table, with 40.77%, and the performance score is shown in Table 19 as Appendix. Did not manage to generate forecasts better than other ML models in any of the selected players' performance metrics. Crucial in the performance of this model is the amount of data instances, and it is specialised in big data [87]. With the selected approach of applying each model in individual players' datasets, data instances were limited, causing PA to underperform.

5.2 WAPE ranking table

Forecasting the upcoming performance of NBA players was always challenging, starting by evaluating individual players' performance metrics and continuing with appropriate forecasting and evaluation. The findings were based on results on both test procedures and forecasts in unseen data. This research produced extended forecasting scenarios in 90 high-performance players' cases, with 14 compared ML model types in 18 players' performance evaluation metrics. Those mentioned above overviewed that it is possible with the chosen approach to generate valid predictions in high-performance NBA players, with eleven of the Fourteen selected ML models' types; ET, RF, DT, LARS, LASSO, EN, GBM, KNN, LGBM, BR and AB. Evaluation results were similar on both test and validation procedures, clarifying that the best performers are the Tree-Based Models; however, most of the selected Linear-Based models and KNN performed great. Nevertheless, the Online Learning model's PA and specific Linear Based RR and HR results were not prominent (RQ2).

5.3 KPIs forecasting optimization

The second phase of this research aimed to yield KPI forecasting results by applying different approaches and discovering efficiencies in individual forecasting. The target KPI, FP, was selected because it overviews players' performance with fundamental players' performance evaluation statistics. Its uses are applied directly by performance evaluation aspects in the sports industry, on teams and organisations and in Betting Industry via Fantasy Tournaments. The mentioned above made FP a significant statistic that its results interest everyone that is occupied with basketball and NBA [88].

Finally, the results in Table 4 assure that all selected models are good performers in forecasting FP with both selected approaches. However, improvements can be applied because, at the professional level, every minor or significant improvement can make a difference in Sports and Betting. The Tree-Based models again outperformed others, with the GBM model, producing exceptional results with significant improvement in forecasting with Unseen data. The aforementioned managed to forecast excellent players' upcoming performances with MAPE 29.81% and rank him in the first place of models for forecasting FP (RQ3).

6 Conclusion & future work

6.1 Conclusion

Players' performance forecasting in each Sport is considered a great challenge, also with significant importance [24]. For this reason, this research aimed to conduct an extended analysis and review of forecasting methods with game-lag features. 14 different ML models were applied for performance forecasting in 18 fundamental advanced basketball statistics on a pool of 90 high-performance NBA players. This research targeted to overview each ML model's performance, a ranking table based on a proposed novel KPI, WAPE, a weighted evaluation metric of the forecasted advanced basketball statistics MAPE results. Additionally, a comprehensive approach was introduced to extend research on KPIs forecasting optimization, providing remarkable insights into which approach can produce better forecasting results.

This research was based on NBA’s last 3 seasons, 2019–22 Advanced Box-Scores statistics, selecting the 90 most high-performance NBA players, filtered by historical KPIs average results, GMSC, 4Factors, TENDEX, FP and EFF averages and their participation time. Furthermore, after the appropriate data pre-processing and feature engineering, based on our selected players' pool, 90 forecasting experiments with 14 ML models on 18 advanced basketball statistics were accomplished.

Results showed that most ML models have good performance, but the Tree-Based models are the winners. The best performer was ET, with 34.14% WAPE, being the best predictor in three out of 18 statistics. In second place, RF, with 34.23% WAPE, outperformed others in forecasting four of the 18 statics and in third place, the DT, with 34.41% WAPE, predicting better compared to other ML models, three of the 18 target variables. It is worth mentioning that the Linear-Based model, LASSO, also had exceptional performance with 34.54% WAPE and forecasting the best four of the 18 statistics. Additionally, good results were achieved by LARS with WAPE 34.53%, EN with WAPE 34.71%, GBM with WAPE 34.83% and KNN with WAPE 35.11% (RQ1).

This research successfully classifies ML model performance based on the target variables advanced basketball metrics. Based on the results, we conclude that the best approach to forecasting in basketball is when using multiple ML models selected based on the target basketball statistic of each experiment. Additionally, benchmarking the performance of different fundamental ML models to identify the best-performing models for basketball player performance prediction. The results suggest that the 3 Tree-based regression models outperformed the other models, namely ET, RF, and DT. Moreover, LASSO, LARS, EN, GBM, and KNN were identified as promising selections for performance forecasting (RQ2).

The second stage aimed to improve KPIs FP forecasted results, following a different approach. Instead of forecasting FP as it was transformed, the individual statistics that construct FP are forecasted separately, and the FP KPI was transformed afterwards with the generated predictions. Insights were remarkable, while forecasted results for all ten selected ML models were improved, with GBM outperforming overall performance. In the first experiment, where FP was forecasted as a unit, the best predictor was LARS, with 33.39% MAPE; however, in the second approach followed, GBM was evaluated with 29.81% MAPE, improving forecasting by 3.58%. Finally, this research concludes that it is possible to yield significantly better prediction results for the corresponding target basketball KPI, with a different forecasting approach (RQ3).

6.2 Future work

The aforesaid experiments provided significant results, overviewing BPPF with game-lag features [89]. Based on this work, there is room for improvement in predicting individual players' performance as well as possible, using open data provided by a valid source, NBA itself.

Future work can consider forecasting each player individually, for each selected statistic, with all available models. At the same time, as observed, Tree-Based models have the best overall performance, but in each statistic, different results occurred, with no Tree-Based models being the best. Additionally, sentiment analysis [90,91,92,93,94] results could be generated for individual players or teams, and betting odds and transfer market data [95] can all be included as forecasting features. Moreover, motion capture technologies [96] are already used to provide a variety and volume of data that can be used as an additional source for players' performance in-game, capturing the recent playstyles and tactics of players' last games [97].

Furthermore, Association Rules [97,98,99,100] and patterns recognition [101, 102] could be applied to basketball statistics, forecasting results and players as individuals or in teams. Those results can also be used as features on fundamental forecasting and analysis in NBA. Moreover, other forecasting techniques can be applied to many basketball statistics, for example, classification [103] for BLK, STL, TOV and AST that a player achieved, a statistic with minor variation. More techniques can be Timeseries [104] for forecasting or feature engineering and creation.

Data availability

Data are available at each of the three stages throughout this research—initially as raw collections, then pre-processed, and finally after feature engineering—all of which are accessible in the following GitHub repository: github.com/gpapageorgiouedu/Evaluating-the-Effectiveness-of-Machine-Learning-Models-for-Performance-Forecasting-in-Basketball.

References

Bai Z, Bai X (2021) Sports big data: management, analysis, applications, and challenges. Complexity 2021:1–11. https://doi.org/10.1155/2021/6676297

Li B, Xu X (2021) Application of artificial intelligence in basketball sport. J f Educ, Health Sport 11(7):54–67. https://doi.org/10.12775/JEHS.2021.11.07.005

Watanabe NM, Shapiro S, Drayer J (2021) Big Data and Analytics in Sport Management. J Sport Manag 35(3):197–202. https://doi.org/10.1123/jsm.2021-0067

Sarlis V, Tjortjis C (2020) Sports analytics — Evaluation of basketball players and team performance. Inf Syst. https://doi.org/10.1016/j.is.2020.101562

Aoki, R. Y. S., Assuncao, R. M., & Vaz de Melo, P. O. S. (2017). Luck is Hard to Beat. In”: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pp 1367–1376. https://doi.org/10.1145/3097983.3098045

Nguyen NH, Nguyen DTA, Ma B, Hu J (2022) The application of machine learning and deep learning in sport: predicting NBA players’ performance and popularity. J Inf Telecommun 6(2):217–235. https://doi.org/10.1080/24751839.2021.1977066

Morgulev E, Azar OH, Lidor R (2018) Sports analytics and the big-data era. Int J Data Sci Anal 5(4):213–222. https://doi.org/10.1007/s41060-017-0093-7

Terner Z, Franks A (2021) Modeling player and team performance in basketball. Annu Rev Stat Appl 8(1):1–23. https://doi.org/10.1146/annurev-statistics-040720-015536

Vinué G, Epifanio I (2019) Forecasting basketball players’ performance using sparse functional data*. Stat Anal Data Min 12(6):534–547. https://doi.org/10.1002/sam.11436

Ahmadalinezhad M, Makrehchi M (2020) Basketball lineup performance prediction using edge-centric multi-view network analysis. Social Netw Anal Min. https://doi.org/10.1007/s13278-020-00677-0

Migliorati M (2020) Detecting drivers of basketball successful games: an exploratory study with machine learning algorithms. Electr J Appl Stat Anal 13(2):454–473. https://doi.org/10.1285/i20705948v13n2p454

Zhang F, Huang Y, Ren W (2021) basketball sports injury prediction model based on the grey theory neural network. J Healthcare Eng. https://doi.org/10.1155/2021/1653093

Rangel W, Ugrinowitsch C, Lamas L (2019) Basketball players’ versatility: Assessing the diversity of tactical roles. Int J Sports Sci Coach 14(4):552–561. https://doi.org/10.1177/1747954119859683

Siemon, D., Ahmad, R., Huttner, J.-P., & Robra-Bissantz, S. (2019). Predicting the Performance of Basketball Players Using Automated Personality Mining BeDien-Begleitforschung Personennahe Dienstleistungen View project Collaboration with AI View project. https://www.researchgate.net/publication/327344755

Michailidou K, Lindström S, Dennis J, Beesley J, Hui S, Kar S, Lemaçon A, Soucy P, Glubb D, Rostamianfar A, Bolla MK, Wang Q, Tyrer J, Dicks E, Lee A, Wang Z, Allen J, Keeman R, Eilber U, Easton DF (2017) Association analysis identifies 65 new breast cancer risk loci. Nature. https://doi.org/10.1038/nature24284

Kiliç Depren S (2019) FARKLI MAKİNE ÖĞRENMESİ ALGORİTMALARININ BASKETBOL OYUNCULARININ ATIŞ PERFORMANSI ÜZERİNDEKİ ETKİNLİĞİ. Spor ve Performans Araştırmaları Dergisi. https://doi.org/10.17155/omuspd.507797

Oughali MS, Bahloul M, El Rahman SA (2019) Analysis of nba players and shot prediction using random forest and XGBoost models. Int Conf Comput Inf Sci (ICCIS) 2019:1–5. https://doi.org/10.1109/ICCISci.2019.8716412

Cene E, Parim C, Ozkan B (2018) Comparing the performance of basketball players with decision trees and TOPSIS. Data Sci Appl 1(1):21–28

Soliman G, El-Nabawy A, Misbah A, Eldawlatly S (2017) Predicting all star player in the national basketball association using random forest. Intell Syst Conf (IntelliSys) 2017:706–713. https://doi.org/10.1109/IntelliSys.2017.8324371

Zimmermann A (2016) Basketball predictions in the NCAAB and NBA: Similarities and differences. Stat Anal Data Min 9(5):350–364. https://doi.org/10.1002/sam.11319

Evans BA, Roush J, Pitts JD, Hornby A (2018) Evidence of skill and strategy in daily fantasy basketball. J Gambl Stud 34(3):757–771. https://doi.org/10.1007/s10899-018-9766-y

South C, Elmore R, Clarage A, Sickorez R, Cao J (2019) A starting point for navigating the world of daily fantasy basketball. Am Stat 73(2):179–185. https://doi.org/10.1080/00031305.2017.1401559

Chen WJ, Jhou MJ, Lee TS, Lu CJ (2021) Hybrid basketball game outcome prediction model by integrating data mining methods for the national basketball association. Entropy. https://doi.org/10.3390/e23040477

Thabtah F, Zhang L, Abdelhamid N (2019) NBA game result prediction using feature analysis and machine learning. Annals Data Sci 6(1):103–116. https://doi.org/10.1007/s40745-018-00189-x

Huang M-L, Lin Y-J (2020) Regression tree model for predicting game scores for the golden state warriors in the national basketball association. Symmetry 12(5):835. https://doi.org/10.3390/sym12050835

Cheng G, Zhang Z, Kyebambe M, Kimbugwe N (2016) Predicting the outcome of NBA playoffs based on the maximum entropy principle. Entropy 18(12):450. https://doi.org/10.3390/e18120450

The National Basketball Association. (2022). nba.com.

Zhang S, Gomez MÁ, Yi Q, Dong R, Leicht A, Lorenzo A (2020) Modelling the relationship between match outcome and match performances during the 2019 FIBA basketball world cup: a quantile regression analysis. Int J Environ Res Public Health 17(16):5722. https://doi.org/10.3390/ijerph17165722

Dehesa R, Vaquera A, Gonçalves B, Mateus N, Gomez-Ruano MÁ, Sampaio J (2019) Key game indicators in NBA players’ performance profiles. Kinesiology 51(1):92–101. https://doi.org/10.26582/k.51.1.9

Khanmohammadi, R., Saba-Sadiya, S., Esfandiarpour, S., Alhanai, T., & Ghassemi, M. M. (2022). MambaNet: A Hybrid Neural Network for Predicting the NBA Playoffs.

Atkinson AC, Riani M, Corbellini A (2021) The box-cox transformation: review and extensions. Stat Sci. https://doi.org/10.1214/20-STS778

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A (2013) A review of feature selection methods on synthetic data. Knowl Inf Syst 34(3):483–519. https://doi.org/10.1007/s10115-012-0487-8

Katrutsa A, Strijov V (2017) Comprehensive study of feature selection methods to solve multicollinearity problem according to evaluation criteria. Expert Syst Appl 76:1–11. https://doi.org/10.1016/j.eswa.2017.01.048

Zien, A., Krämer, N., Sonnenburg, S., & Rätsch, G. (2009). The Feature Importance Ranking Measure (pp. 694–709). https://doi.org/10.1007/978-3-642-04174-7_45

Imaam F, Subasinghe A, Kasthuriarachchi H, Fernando S, Haddela P, Pemadasa N (2021) Moderate automobile accident claim process automation using machine learning. Int Conf Comput Commun Inf (ICCCI) 2021:1–6. https://doi.org/10.1109/ICCCI50826.2021.9457017

Ali, M. (2020). PyCaret: An open source, low-code machine learning library in Python. https://www.pycaret.org

Wang, Z., Sun, D., Jiang, S., & Huang, W. (2022). AChEI-EL:Prediction of acetylcholinesterase inhibitors based on ensemble learning model. In: 2022 7th international conference on big data analytics (ICBDA), 96–103. https://doi.org/10.1109/ICBDA55095.2022.9760329

Triguero I, García S, Herrera F (2015) Self-labeled techniques for semi-supervised learning: taxonomy, software and empirical study. Knowl Inf Syst 42(2):245–284. https://doi.org/10.1007/s10115-013-0706-y

Kumar, A., Naughton, J., & Patel, J. M. (2015). Learning generalized linear models over normalized data. In: Proceedings of the 2015 ACM SIGMOD international conference on management of data, pp 1969–1984. https://doi.org/10.1145/2723372.2723713

Ampomah EK, Qin Z, Nyame G (2020) Evaluation of tree-based ensemble machine learning models in predicting stock price direction of movement. Information 11(6):332. https://doi.org/10.3390/info11060332

Mondal AR, Bhuiyan MAE, Yang F (2020) Advancement of weather-related crash prediction model using nonparametric machine learning algorithms. SN Appl Sci 2(8):1372. https://doi.org/10.1007/s42452-020-03196-x

Lobo JL, Del Ser J, Bifet A, Kasabov N (2020) Spiking neural networks and online learning: an overview and perspectives. Neural Netw 121:88–100. https://doi.org/10.1016/j.neunet.2019.09.004

Metzler D, Bruce Croft W (2007) Linear feature-based models for information retrieval. Inf Retrieval 10(3):257–274. https://doi.org/10.1007/s10791-006-9019-z

James G, Witten D, Hastie T, Tibshirani R (2021) An Introduction to Statistical Learning. Springer, US

Ranstam J, Cook JA (2018) LASSO regression. Br J Surg 105(10):1348–1348. https://doi.org/10.1002/bjs.10895

Zhang Z, Lai Z, Xu Y, Shao L, Wu J, Xie G-S (2017) Discriminative elastic-net regularized linear regression. IEEE Trans Image Process 26(3):1466–1481. https://doi.org/10.1109/TIP.2017.2651396

Efendi, A., & Effrihan. (2017). A simulation study on Bayesian Ridge regression models for several collinearity levels. pp 020031. https://doi.org/10.1063/1.5016665

Efron B, Hastie T, Johnstone I, Tibshirani R (2004) Least angle regression. Annals Stat. https://doi.org/10.1214/009053604000000067

Sun Q, Zhou W-X, Fan J (2020) Adaptive huber regression. J Am Stat Assoc 115(529):254–265. https://doi.org/10.1080/01621459.2018.1543124

McDonald GC (2009) Ridge regression. Wiley Interdisciplinary Rev 1(1):93–100. https://doi.org/10.1002/wics.14

Chen, H., Zhang, H., Si, S., Li, Y., Boning, D., & Hsieh, C.-J. (2019). Robustness Verification of Tree-based Models. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d Alché-Buc, E. Fox, & R. Garnett (Eds.), Advances in Neural Information Processing Systems (Vol. 32). Curran Associates, Inc. https://proceedings.neurips.cc/paper/2019/file/cd9508fdaa5c1390e9cc329001cf1459-Paper.pdf

Sagi O, Rokach L (2018) Ensemble learning: a survey. WIREs Data Min Knowl Discov. https://doi.org/10.1002/widm.1249

Papadopoulos S, Azar E, Woon W-L, Kontokosta CE (2018) Evaluation of tree-based ensemble learning algorithms for building energy performance estimation. J Build Perform Simul 11(3):322–332. https://doi.org/10.1080/19401493.2017.1354919

Natekin A, Knoll A (2013) Gradient boosting machines, a tutorial. Front Neurorobot 7:21. https://doi.org/10.3389/FNBOT.2013.00021/BIBTEX

Liu J, Huang J, Zhou Y, Li X, Ji S, Xiong H, Dou D (2022) From distributed machine learning to federated learning: a survey. Knowl Inf Syst 64(4):885–917. https://doi.org/10.1007/s10115-022-01664-x

Liu, Y., Wang, Y., & Zhang, J. (2012). New Machine Learning Algorithm: Random Forest (pp. 246–252). https://doi.org/10.1007/978-3-642-34062-8_32

Chakraborty D, Elhegazy H, Elzarka H, Gutierrez L (2020) A novel construction cost prediction model using hybrid natural and light gradient boosting. Adv Eng Inf. https://doi.org/10.1016/J.AEI.2020.101201

Alsariera YA, Adeyemo VE, Balogun AO, Alazzawi AK (2020) AI meta-learners and extra-trees algorithm for the detection of phishing websites. IEEE Access 8:142532–142542. https://doi.org/10.1109/ACCESS.2020.3013699

Rathore SS, Kumar S (2016) A decision tree regression based approach for the number of software faults prediction. ACM SIGSOFT Softw Eng Notes 41(1):1–6. https://doi.org/10.1145/2853073.2853083

Schapire RE (2013) Explaining AdaBoost. Empirical Inference. Springer, Berlin Heidelberg, pp 37–52

Son Y, Byun H, Lee J (2016) Nonparametric machine learning models for predicting the credit default swaps: an empirical study. Expert Syst Appl 58:210–220. https://doi.org/10.1016/j.eswa.2016.03.049

Altman NS (1992) An introduction to kernel and nearest-neighbor nonparametric regression. Am Stat 46(3):175–185. https://doi.org/10.1080/00031305.1992.10475879

Fontenla-Romero, Ó., Guijarro-Berdiñas, B., Martinez-Rego, D., Pérez-Sánchez, B., & Peteiro-Barral, D. (2013). Online Machine Learning. In Efficiency and Scalability Methods for Computational Intellect (pp. 27–54). IGI Global. https://doi.org/10.4018/978-1-4666-3942-3.ch002

Wu Q, Zhou X, Yan Y, Wu H, Min H (2017) Online transfer learning by leveraging multiple source domains. Knowl Inf Syst 52(3):687–707. https://doi.org/10.1007/s10115-016-1021-1

Yin G, Alazzawi FJI, Mironov S, Reegu F, El-Shafay AS, Rahman ML, Nguyen HC (2022) Machine learning method for simulation of adsorption separation: comparisons of model’s performance in predicting equilibrium concentrations. Arabian J Chem 15(3):103612

Georgievski B, Vrtagic S (2021) Machine learning and the NBA Game. J Phys Educ Sport 21(06):3339–3343

Richter C, O’Reilly M, Delahunt E (2021) Machine learning in sports science: challenges and opportunities. Sports Biomech. https://doi.org/10.1080/14763141.2021.1910334

de Myttenaere A, Golden B, Le Grand B, Rossi F (2016) Mean Absolute Percentage Error for regression models. Neurocomputing 192:38–48. https://doi.org/10.1016/j.neucom.2015.12.114

Schubert A-L, Hagemann D, Voss A, Bergmann K (2017) Evaluating the model fit of diffusion models with the root mean square error of approximation. J Math Psychol 77:29–45. https://doi.org/10.1016/j.jmp.2016.08.004

Botchkarev A (2019) A new typology design of performance metrics to measure errors in machine learning regression algorithms. Interdiscip J Inf, Knowl Manag 14:045–076. https://doi.org/10.28945/4184

Teramoto M, Cross CL, Rieger RH, Maak TG, Willick SE (2018) Predictive validity of national basketball association draft combine on future performance. J Strength Cond Res 32(2):396–408. https://doi.org/10.1519/JSC.0000000000001798

Wu TT, Lange K (2008) Coordinate descent algorithms for lasso penalized regression. Annals Appl Stat. https://doi.org/10.1214/07-AOAS147

Mamdouh Farghaly H, Shams MY, Abd El-Hafeez T (2023) Hepatitis C Virus prediction based on machine learning framework: a real-world case study in Egypt. Knowl Inf Syst 65(6):2595–2617. https://doi.org/10.1007/s10115-023-01851-4

Saqib M (2021) Forecasting COVID-19 outbreak progression using hybrid polynomial-Bayesian ridge regression model. Appl Intell 51(5):2703–2713. https://doi.org/10.1007/s10489-020-01942-7

McCann L, Welsch RE (2007) Robust variable selection using least angle regression and elemental set sampling. Comput Stat Data Anal 52(1):249–257. https://doi.org/10.1016/j.csda.2007.01.012

Mangasarian OL, Musicant DR (2000) Robust linear and support vector regression. IEEE Trans Pattern Anal Mach Intell 22(9):950–955. https://doi.org/10.1109/34.877518

Marquardt DW, Snee RD (1975) Ridge regression in practice. Am Stat 29(1):3–20. https://doi.org/10.1080/00031305.1975.10479105

Dormann CF, Elith J, Bacher S, Buchmann C, Carl G, Carré G, Marquéz JRG, Gruber B, Lafourcade B, Leitão PJ, Münkemüller T, McClean C, Osborne PE, Reineking B, Schröder B, Skidmore AK, Zurell D, Lautenbach S (2013) Collinearity: a review of methods to deal with it and a simulation study evaluating their performance. Ecography 36(1):27–46. https://doi.org/10.1111/j.1600-0587.2012.07348.x

Natekin A, Knoll A (2013) Gradient boosting machines, a tutorial. Front Neurorobotics. https://doi.org/10.3389/fnbot.2013.00021