Abstract

The identification of prognostic and predictive biomarker signatures is crucial for drug development and providing personalized treatment to cancer patients. However, the discovery process often involves high-dimensional candidate biomarkers, leading to inflated family-wise error rates (FWERs) due to multiple hypothesis testing. This is an understudied area, particularly under the survival framework. To address this issue, we propose a novel three-stage approach for identifying significant biomarker signatures, including prognostic biomarkers (main effects) and predictive biomarkers (biomarker-by-treatment interactions), using Cox proportional hazard regression with high-dimensional covariates. To control the FWER, we adopt an adaptive group LASSO for variable screening and selection. We then derive adjusted p-values through multi-splitting and bootstrapping to overcome invalid p values caused by the penalized approach’s restrictions. Our extensive simulations provide empirical evaluation of the FWER and model selection accuracy, demonstrating that our proposed three-stage approach outperforms existing alternatives. Furthermore, we provide detailed proofs and software implementation in R to support our theoretical contributions. Finally, we apply our method to real data from cancer genetic studies.

Similar content being viewed by others

1 Introduction

Personalized medicine has gained escalating importance in contemporary clinical practice, as the potential for tailored treatments designed for individual patients holds promise for augmenting the effectiveness of interventions (Hamburg and Collins 2010; Chin 2011). However, in the case of diseases such as cancer, significant heterogeneity exists among patients, impacting disease progression and responses to specific treatments. Consequently, identifying cancer subgroups and disparities in diagnoses can be immensely valuable in tailoring optimized therapies for each patient, ultimately improving healthcare outcomes, including survival rates. To achieve this goal, the discovery of both prognostic markers (primary biomarkers) and predictive biomarkers (biomarker-treatment interactions) is crucial in cancer drug development and clinical practice. These biomarkers have the potential to personalize therapies for individual patients and enhance treatment effectiveness.

Recent advances in biotechnology have led to the generation of vast amounts of complex biological and molecular data. Modern high-throughput technologies can simultaneously measure the expression levels of thousands of genes. Databases like the Gene Expression Omnibus (GEO) and Array Express provide extensive resources for cancer genetic research (Barrett 2010). However, a common challenge in these datasets is that the number of genes (p) is often equal to or even greater than the number of samples (n). This situation becomes more complex when researchers aim to identify both prognostic biomarkers (genes) and predictive biomarkers (gene-treatment interactions), increasing the dimensionality of the data. In such cases, it is crucial to assess the significance of each variable using p values and to correct for multiple testing, especially in clinical applications. Of note, controlling the false positive rate, specifically the family-wise error rates (FWER), is a paramount consideration. False positives can lead to erroneous conclusions, resource wastage, the diversion of research efforts towards unproductive avenues, among others. Hence, our commitment to controlling false positives is rooted in the need to uphold the integrity of scientific and medical research. The central focus of this paper resides in the identification of biomarker signatures, encompassing both prognostic and predictive biomarkers, through the assignment of valid p values within the context of the survival framework, all while effectively controlling FWER.

Over the past two decades, a multitude of regularization techniques have emerged to facilitate feature selection and yield sparse parameter estimates when grappling with high-dimensional data. One prominent method is the least absolute shrinkage and selection operator (LASSO) (Tibshirani 1996), a preeminent method known for furnishing sparse estimates through an \(L_1\) penalty, encompassing optimal tuning parameters for linear models. This work has led to the development of various extensions (Fan and Li 2001; Zou 2006; Ghosh 2007; Yuan and Lin 2006; Wang and Leng 2008). To deal with ultra-high-dimensional data, Fan and Lv (2008a) introduced a correlation screening technique termed Sure Independence Screening (SIS). This method effectively reduces the dimensionality from an extremely large scale to a more manageable one, making it easier to use LASSO for variable selection. This combination is referred to as SIS-Lasso. These techniques, tailored for variable selection and screening, have been expanded to accommodate survival outcomes within the context of Cox proportional hazards (PH) models (Tibshirani 1997; Fan and Li 2002; Zhang and Lu 2007; Simon 2011; He 2019; Fan 2010; Zhao and Li 2012).

While regularization and screening techniques are effective in producing sparse and interpretable estimates, they face challenges in maintaining control over type I error rates, primarily due to issues with p values obtained from penalized likelihood. Recent advancements have addressed these challenges in high-dimensional linear models. For example, Wasserman and Roeder (2009) introduced a “screen and clean" procedure, involving data division into a training set for variable screening (using LASSO) and a testing set for significance testing. Several other methods exhibit screening properties, such as the adaptive Lasso (Zou 2006) and the smoothly clipped absolute deviation (SCAD) Fan and Li (2001). Later, Meinshausen (2009) enhanced the approach by iteratively repeating the split-and-fit procedure, computing p values for each split, and aggregating them to establish a collective p-value for the purpose of controlling FWER. Related works include Meinshausen and Yu (2009); Bühlmann (2013); Zhang and Zhang (2014); Dezeure (2015). Recent breakthroughs in this domain include the work of Zuo et al. (2021), who introduced a groundbreaking variable selection approach termed “penalized regression with second-generation p values" (ProSGPV). This method combines an \(L_1\) penalization scheme with second-generation p values (SGPV) to identify variables suitable for inclusion in the model. While these methods have proven effective in generalized linear models for high-dimensional data, their application within the survival framework is an emerging area that requires further development and exploration.

In this paper, we present a novel method for detecting biomarker signatures that considers both main effects and biomarker-by-interaction effects within the survival framework while effectively managing the FWER. To achieve this, we extend the concept introduced by Meinshausen (2009) to the Cox survival model, employing a three-stage process that enables the identification of prognostic and predictive biomarkers while assigning valid p values. Our contributions include: (1) Application to high-dimensional datasets using a penalized technique for variable selection, facilitating the identification of biomarker signatures that encompass both prognostic and predictive biomarkers; (2) Addressing the challenge of multiple testing by obtaining p values from randomized multi-split data, ensuring robust control of the FWER; (3) Providing a user-friendly R implementation of our algorithm, available at https://github.com/aliviawu/Biomarker-Paper/tree/main. Additionally, we offer comprehensive theoretical properties in the Supplementary Materials. By integrating main effects and biomarker-by-interactions within the survival framework and ensuring strict control over the FWER, our approach makes a valuable contribution to the field of biomarker identification and statistical inference in high-dimensional data scenarios.

The remainder of this paper is organized as follows. In Sect. 2, we provide a detailed description of our proposed three-stage approach within the Cox PH model framework. This approach considers main effects and biomarker-by-interaction effects while effectively controlling the FWER. Section 3 presents the results of our simulation studies, including comparisons with existing methods. In Sect. 4, we apply our proposed method to multiple real-world datasets. Finally, in Sect. 5, we summarize our findings and discuss potential directions for future research.

2 Materials and methods

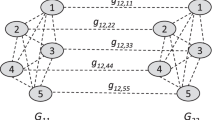

To achieve FWER control in biomarker selection, we propose a three-stage approach that builds on the penalized-likelihood approach using adaptive gLASSO (Wang and Leng 2008). Our approach extends the idea of p-value adjustment developed by Meinshausen (2009) to the Cox proportional hazards framework. Additionally, we provide a description of several existing alternatives for comparison purposes.

2.1 Notation

Consider a study with n subjects and p potential biomarkers. Let \(T_i\) denote the event time for the \(i^{th}\) subject and \(C_i\) denote the censoring time. The follow-up time is defined as \(Y_i = \min (T_i, C_i)\), and the event indicator is \(\delta _i = I(T_i \le C_i)\), where \(I(\cdot )\) is an indicator function. We focus on right-censored data. The p-dimensional candidate biomarkers are denoted by \({\textbf {X}}_i = (X_{i1}, X_{i2}, \ldots , X_{ip})^{T}\). The treatment status for the \(i^{th}\) patient is denoted by \(H_i\). We assume that \(T_i\) and \(C_i\) are conditionally independent given \({\textbf {X}}_i\). The hazard function of the \(i^{th}\) patient under the Cox PH model can be expressed as:

with the total number of regression parameters as \(2p+1\). We denote the number of main biomarkers (prognostic) and its interaction with treatment (predictive) to be \(\widetilde{p}=2p\). Our objective is to identify prognostic biomarkers (\(\alpha _j \ne 0\), \(j=1,\ldots ,p\)) and predictive biomarkers (\(\gamma _j \ne 0\), \(j=1,\ldots ,p\)) in situations where \(n \ll \widetilde{p}\).

2.2 Adaptive gLASSO for variable selection

Under the Cox PH framework, let D denote the indices of the subjects who experienced the event of interest, and for each \(r \in D\), the observed failure time is denoted by \(t_r\). The set \(R_r=\{i: Y_i \ge t_r\}\) includes the indices of the individuals who are at risk of experiencing the event at time \(t_r\). Let \(\varvec{{\theta }}=\{\alpha _0,\alpha _j,\gamma _j,j=1,\dots ,p\}\) be a vector of parameters with dimensionality \(\widetilde{p}+1\), and let the semi-parametric partial likelihood function for parameter estimation be denoted by

Let \(\ell (\varvec{\theta })\) denote the logarithm of the partial likelihood function \(\log L(\varvec{\theta })\). The estimates of the parameters \(\varvec{\theta }\) can be obtained by maximizing \(\ell (\varvec{\theta })\). However, when the number of parameters \(\widetilde{p}\) is larger than the sample size n (\(n\ll \widetilde{p}\)), the semi-parametric likelihood estimator in Eq. (2) may not be feasible due to the difficulty in finding the global maximum as the number of biomarkers increases.

To select prognostic and predictive biomarkers with the oracle property, one commonly used method with a weighted adaptive gLASSO penalty can be considered. The objective function that the adaptive gLASSO minimizes is:

where \(|\cdot |_1\) denotes the \(L_1\) norm, \(\lambda _1\) is the shrinkage parameter, \(G_g\) is the index set belonging to the g-th pair of prognostic and predictive biomarkers (\(g=1,\dots ,p\)), and \(\varvec{\theta }_{G_g}=\{\alpha _g,\gamma _g\}\) is the g-th pair of estimated coefficients belonging to \(G_g\). Additionally, \(\hat{\omega}_g\) is an adaptive weight vector obtained by performing \(L_2\) regularization for each coefficient of \(\alpha\) and \(\gamma\), which is defined as follows:

where \(\hat{\alpha }_g^{ini}\) and \(\hat{\gamma }_g^{ini}\) are initial estimators obtained from a ridge regression (Hoerl and Kennard 1970), \(g=1,\dots ,p\).

Subsequently, the group-based estimates can be obtained by maximizing \(\ell ^{aGL}(\varvec{\theta })\). Biomarkers with non-zero coefficients (\(\alpha _g\ne 0\) or \(\gamma _g\ne 0\), where \(g=1,\dots ,p\)) will be selected. However, it should be noted that the FWER is not well controlled by this method.

2.3 The proposed three-stage approach

To control the FWER, we propose a three-stage strategy based on the penalized likelihood approach. This strategy extends the concept of p-value adjustment introduced by (Meinshausen 2009) to the Cox proportional hazards model. The algorithm for our proposed three-stage approach is described in detail below.

2.3.1 Stage I: conduct feature screening and obtain (nonaggregated) p values

In the first stage, we perform feature screening to reduce the dimensionality from \(\widetilde{p}\) to a more manageable scale d with \(d<n\). We then use the remaining d/2 pairs of prognostic and predictive biomarkers for variable selection based on penalized techniques (i.e., adaptive gLASSO) as well as p-value adjustment. Both feature screening and p-value adjustment are performed through a bootstrapping procedure.

For \(b=1\dots B\),

-

(i)

Randomly split the data into two sets, a “training" set and a “testing" set, with some allocation rate of m (e.g., \(m=0.5\) indicates an equal sample size). Of note, the selection of m depends on various factors, including the dataset sample size, the study’s objectives, and other relevant considerations.;

-

(ii)

Perform feature screening via joint hypotheses using the training data. To identify the significant predictors among the main biomarkers and their interactions with treatment, the likelihood ratio test (LRT) can be utilized. For each j in 1, ..., p, the log-likelihood under the null hypothesis (\(H_0\)) and the alternative hypothesis (\(H_A\)) can be compared:

$$\begin{aligned} H_0: \alpha _j=0, \gamma _j=0, H_A: \text {at least one of } \alpha _j \text {and } \gamma _j \text { is not equal to 0}. \end{aligned}$$(5)The LRT statistic is computed as the difference between the partial log-likelihood statistics of two models: \(H_0\), which only includes the treatment variable (\(H_j\)), and \(H_A\), which includes the treatment variable (\(H_j\)), a main biomarker (\(X_j\)), and its interaction with treatment (\(X_jH\)). In general, the LRT statistic is expressed as \(LRT=-2\ln (\frac{\ell _0}{\ell _A})\sim \chi ^2_2\). To screen the predictors efficiently, existing screening procedures often require the specification of a threshold. Here, we adopt the conventional threshold of \([n/\log n]\) (Fan and Lv 2008b). Specifically, the screening process retains the top \([n/\log n]\) pairs based on the rank of the Chi-square statistics of the joint hypothesis testing for each biomarker and its interaction with treatment.

-

(iii)

Apply adaptive gLASSO on the training set to select pairs using the pre-selected pairs of main biomarkers and their interactions with treatment. The selected pairs are denoted by \(\widetilde{S}^{(b)}\);

-

(iv)

Obtain the p-values for each selected pair in \(\widetilde{S}^{(b)}\) by performing LRT using testing dataset. Using only testing data, we employ a LRT hypothesis test for each selected pair in \(\widetilde{S}^{(b)}\), and calculate the corresponding chi-square based p-values, \(\widetilde{p}_j^{(b)}\), for \(j\in \widetilde{S}^{(b)}\). For unselected pairs, we set the corresponding p-values to 1.

-

(v)

Adjusted p-values based on the Bonferroni correction for \(\widetilde{p}_j^{(b)}\), denoted by \(p_j^{(b)}\) (\(j=1,\ldots , p\)). For \(j=1,2,\ldots , p\), we have

$$\begin{aligned} p_j^{(b)}=\min \left\{ \frac{1}{2}\widetilde{p}_j^{(b)}|\widetilde{S}^{(b)}|,1\right\} , \end{aligned}$$(6)where \(|\widetilde{S}|^{(b)}\) denotes the total number of selected pairs in \(\widetilde{S}^{(b)}\).

2.3.2 Stage II: obtain aggregated p values

In the first stage, we obtain a total of B p-values for each pair of prognostic and predictive biomarkers. To aggregate these p-values, we use quantiles introduced by (Meinshausen 2009).

Specifically, we define

where \(q_{\eta }\) is the \(\eta ^{th}\) quantile for the set \(\left\{ p_j^{(b)}/\eta ; b=1,\ldots ,B\right\}\). The aggregated p-value is denoted as \(p_j^*\) and is defined as

where \(\eta _{\min }\in (0,1)\), and recommended choice is 0.05 as suggested by (Meinshausen 2009). Note that \(H_{0j}:\alpha _j=\gamma _j=0\) is rejected if \(p_j^*\le \alpha\), where \(\alpha\) is the pre-specified FWER to be preserved (\(j=1,\ldots , p\)).

2.3.3 Stage III: biomarker signature identification

In the last stage, we perform further regression analysis on the pairs of prognostic and predictive biomarkers selected in Stage II. We fit a Cox PH model using the entire dataset by maximizing the partial likelihood. We identify biomarkers with p-values less than the pre-specified significance level \(\alpha\). The significant biomarkers, along with their interactions with treatment, form the biomarker signature for predicting patient outcomes in response to treatment.

Identification of prognostic and predictive biomarkers related to disease progression and treatment response in a survival framework is crucial for personalized medicine. However, existing penalized approaches for high-dimensional data often suffer from a lack of control over the FWER. Our proposed three-stage strategy that combines penalized likelihood techniques with adjusted p values obtained through random data splitting provides a reliable and interpretable approach for identifying biomarker signatures with strong prognostic and predictive power, while effectively controlling FWER.

In Stage I, we use a bootstrapping procedure to reduce the dimensionality of the training dataset to a moderate scale and select active pairs of prognostic and predictive biomarkers via an adaptive group LASSO technique. We then accumulate corrected p-values \(p_j^{(b)}\) for each active pair via a likelihood ratio test based on the testing dataset. In Stage II, we summarize these non-aggregated p-values to \(p_j^*\) using an adaptive empirical quantile function and select the pairs based on \(p_j^*\). Finally, in Stage III, we identify the final biomarkers by fitting the selected pairs from Stage II with a Cox PH model based on the entire dataset.

2.4 Other existing methods

To empirically evaluate the performance of our proposed approach, we compare it with several existing methods. In the literature, there are various methods for variable selection from biomarker main effects and biomarker-by-treatment interactions. Ternès (2016) conducted a comprehensive summary of possible approaches for high-dimensional Cox PH regression. They compared these methods through simulations with different numbers of biomarkers and varying effects of main biomarkers and interactions with treatment, and evaluated their selection abilities in null (i.e., no interactions with treatment) and alternative scenarios (i.e., at least one interaction with treatment). In the null scenarios, group LASSO and gradient boosting methods performed poorly in the presence of non-null main effects but performed well in alternative scenarios with high interaction strengths. Adaptive LASSO with grouped weights was found to be too conservative. Principal component analysis (PCA) combined with LASSO performed moderately. Both LASSO and adaptive LASSO performed well, although LASSO was relatively poor in the presence of only non-null main effects. Here, we describe several competing methods that we consider for comparison.

2.4.1 LASSO

In the Cox PH framework, variable selection is typically performed by minimizing the log partial likelihood subject to a penalty on the parameters, as proposed by Tibshirani (1997). We use the LASSO penalty for both the main effects \(\alpha _j\) and their interaction effects with treatment \(\gamma _j\) (\(j=1,...,p\)) in Eq. (1) to perform variable selection, enabling us to identify both prognostic and predictive biomarkers. With the semi-parametric partial likelihood function defined in Eq. (2), let \(\varvec{\theta }=\{\alpha _0, \alpha _j, \gamma _j\}_{j=1}^{p}\), where \(\alpha _0\) denotes the treatment effect, \(\alpha _j\) denotes the \(j^{th}\) prognostic biomarker effect and \(\gamma _j\) denotes the \(j^{th}\) predictive biomarker effect. The partial log-likelihood with the LASSO penalty is

where prognostic biomarkers and predictive biomarkers are equally penalized with the shrinkage parameter \(\lambda\). This tuning parameter \(\lambda\) is chosen by fivefold cross-validation. The LASSO-based coefficient estimators can then be obtained by maximizing \(\ell ^{L}(\varvec{\theta })\), and the predictive and prognostic biomarkers (\(\alpha _j\ne 0\), \(\gamma _j\ne 0\)) are selected.

2.4.2 Adaptive LASSO with grouped weights

Adaptive LASSO is a penalization method that assigns different penalty weights to the main effects and interaction effects, with larger coefficients penalized less than smaller ones to highlight their differences (Zou 2006; Zhang and Lu 2007). In the initial stage, this method estimates the weights by including the treatment and all biomarker main effects and interactions with the treatment, and applies a ridge penalty (Hoerl and Kennard 1970). Let \(\alpha _{j}\) and \(\gamma _{j}\) (\(j=1,...,p\)) be the main effects and interaction effects of the biomarkers, respectively. The penalty term with the shrinkage parameter \(\lambda _2\) to control the magnitude of \(\alpha _{j}\) and \(\gamma _{j}\) is

In the second stage, a common grouped weight is estimated for all \(\alpha _j\) and a single weight is assigned to all \(\gamma _j\) as the average of \(\alpha _{j}\) and \(\gamma _{j}\) from the preliminary stage, i.e., \(\alpha _R=\frac{1}{p}\sum ^{p}_{j=1}|\alpha _j|\), \(\gamma _R=\frac{1}{p}\sum ^p_{j=1}|\gamma _j|\). The penalized log-likelihood with \(\theta =\{\alpha _0, \alpha _j, \gamma _j\}_{j=1}^p\) is

2.4.3 Gradient boosting

Boosting algorithms are designed to enhance prediction accuracy by training a sequence of weak models, each correcting the errors of its predecessors. In high-dimensional settings, the process starts from the null model and updates a single coefficient at each step. This iterative process stops when the model achieves a balance between bias and variance. Gradient boosting reformulates this approach as a numerical optimization problem, where the objective is to minimize the model’s loss function by adding weak learners using gradient descent (Friedman 2001). Bühlmann and Yu (2003) proposed \(L_{2}\)-Boost with a novel component-wise smoothing spline learner, providing an effective procedure for carrying out boosting for high-dimensional regression problems with continuous predictors. In our study, we first estimate the treatment effect preliminarily and then fix it as an offset.

2.4.4 PCA+LASSO

In the first stage, we use PCA (Hastie 2017) to reduce the dimensionality of the main effect matrix. The second stage applies the LASSO penalty to the interactions, which allows for the identification of predictive biomarkers based on the first K principal components of the main effects. In the final stage, we fit a Cox PH model by maximizing the partial likelihood based on all biomarkers and selected biomarker-treatment interactions. We then select prognostic and predictive biomarkers with p-values less than \(\alpha\).

3 Simulation studies

3.1 Simulation setup

We make the following assumptions regarding the true hazard function for the i-th patient:

Here, \(\alpha _0\) denotes the impact of treatment H, \(\alpha _4\) and \(\alpha _5\) signify the prognostic effects of biomarkers \(X_4\) and \(X_5\), respectively, and \(\gamma _4\) represents the predictive effect of biomarker \(X_4\). We investigate four distinct scenarios, each characterized by distinct values of (\(\alpha _0\), \(\alpha _4, \gamma _4, \alpha _5\)): Scenario 1 (S1): (1, 1, 1, 1); Scenario 2 (S2): (1, 0.5, 1, 1); Scenario 3 (S3): (1, 0.5, 1.5, 1); Scenario 4 (S4): (1, 0.5, 2.5, 1.5). To simulate individual participants, we generate a treatment indicator variable for each using a Binom(1, 0.5) distribution. The expression level of the primary j-th biomarker \(X_{ij}\) follows a standard normal distribution. The pairwise correlation between prognostic biomarkers is set to \(\rho =0.15\) in order to mimic our real data applications. The baseline hazard function \(h_0(t)\) is set up as a constant \(\frac{1}{100}\) and \(\frac{1}{270}\), resulting in mean censoring rates of around \(40\%\) or \(60\%\), respectively. Censoring times \(C_i\) are generated from a uniform distribution Unif(0, 150). For the estimation of survival times, we employ the method introduced by (Bender 2005). This involves drawing from a Unif(0, 1) random variable and subsequently applying the transformations:

The observed time to an event is then computed as \(min(t_i, C_i)\), along with the event status denoted as \(I(t_i<C_i)\). To provide a comprehensive evaluation of our results, we generate 1,000 Monte Carlo datasets for each scenario. Furthermore, we consider varying sample sizes of 300, 500, or 1000, with the allocation rate \(m=0.5\) and the total number of candidate prognostic and predictive biomarkers \(\widetilde{p}\) set to 1000, 2000, or 4000.

We evaluate four primary performance metrics: selection accuracy, mean squared error (MSE), relative bias of regression coefficient estimates, and control of FWER. Selection accuracy measures the percentage of times the true biomarker is selected out of 1,000 replicates. We estimate MSE as the mean of the squared difference between the true and estimated parameters across 1,000 replicates. Relative bias is evaluated as the mean difference divided by the true parameter value across 1,000 replicates. FWER control measures the proportion of times in 1,000 replicates that at least one biomarker, which is not one of the three candidate biomarkers, is selected while controlling the FWER at the nominal level of \(\alpha =0.05\). We investigate the impact of sample size, number of biomarkers, and censoring rates on these four metrics across various scenarios. Additionally, we compare the proposed method with five other methods, namely LASSO, gLASSO, adaptive LASSO, PCA+LASSO, and gradient boosting.

3.2 Simulation results

3.2.1 FWER control

Our proposed method effectively controls the FWER at a nominal level of 0.05 for selecting prognostic and predictive biomarkers, as demonstrated in Fig. 1. We evaluated the actual FWER across 1,000 replicates for four different scenarios with varying sample sizes (n), the total number of biomarkers (\(\widetilde{p}\)), and censoring rates, except for the case where \(\widetilde{p}=n=1000\) because it does not represent a high-dimensional scenario. Our method shows effective FWER control at approximately 0.05 for all four scenarios, particularly when the sample size is 1000. Although the FWERs were inflated for sample sizes of 500 or 800, we observed a return to 0.05 with a sample size of 1000. Furthermore, we conducted additional simulations using a different allocation rate, i.e., \(m=0.7\) (allocating 70% of the data for training and 30% for testing). We observed minimal differences in FWERs. For example, with sample sizes of \(n=300, 500\) in S1 (\(\widetilde{p}=2000\)), the FWERs were 0.062 and 0.048, respectively, and also similar trends emerged across varying sample sizes (not shown due to space limit). Moreover, we also considered a higher value of the correlation coefficient, \(\rho =0.3\), for S1. Across different combinations of sample sizes and the number of biomarkers denoted as \({(n, \widetilde{p})}={(300,1000),(300,2000),(500,1000),(500,2000),(1000,2000),(1000,4000)}\), we obtained satisfactory results with the FWERs of 0.055, 0.056, 0.051, 0.045, 0.059, and 0.048, respectively. In addition, We also incorporated a non-randomized clinical trial with an 80% treatment proportion, mirroring our second data application. Thus, in Scenario 1 (\(\alpha _4=\alpha _5=\gamma _4=1\)), with sample sizes of \(n=(300,500)\) and \(\widetilde{p}\)=2000, the obtained FWERs are 0.045 and 0.040, respectively.

3.2.2 Estimates of effects

The accurate estimation of prognostic and predictive biomarker effects is crucial for predicting hazard and survival rates. Boxplots of the estimates of \(\alpha _4\), \(\gamma _4\), and \(\alpha _5\) are presented in Fig. 2 for scenarios S1 and S4, both with a 60% censoring rate. Additional scenarios are presented in Figure S5 and S6 in the Supplementary Materials. The average coefficient estimates of \(\alpha _4\) and \(\alpha _5\) from 1,000 simulated datasets closely match the true effects of (1, 1) and (0.5, 2.5) for scenarios S1 and S4, respectively. As the sample size increases from 300 to 1000, the dispersion of the estimated \(\gamma _4\) gradually decreases, and the estimates tend to center around the true effects. Moreover, the coefficient estimates for the 40% censoring rate exhibit similar trends in terms of deviation from the true values, as shown in Figure S5 in the Supplementary Materials.

The MSEs and biases of the estimates are presented in Table 2 and Table S1 in the Supplementary Materials, respectively. The results indicate that, with a censoring rate of 40%, the MSEs and biases for all estimates are generally smaller compared to those with a 60% censoring rate. Moreover, increasing the sample size leads to a reduction in MSEs and relative bias, which is consistent with our expectations. Regarding the interaction effect of \(\gamma _4\), although the MSEs are relatively higher compared to the main effects (e.g., \(\alpha _4\) and \(\alpha _5\)), the bias decreases as the true interaction effect increases from 1 to 1.5. Additionally, the underlying true effect strength of the primary biomarkers influences the estimation of their interaction effect. For example, as the true effect value of \(\alpha _4\) decreases from 1 (S1) to 0.5 (S2), its bias decreases, while the bias of its interaction effect with \(\gamma _4\) increases.

3.2.3 Selection accuracy

The results of the selection accuracy analysis are summarized in Table 1. The findings indicate that selection accuracy improves with larger sample sizes, lower censoring rates, and greater biomarker effects. When the sample size is sufficiently large (i.e., >800), the true biomarker effects and censoring rate have minimal effects on selection accuracy. Both the biomarker and interaction term selections are influenced by the underlying true effect strength, and the accuracy of the interaction term selection tends to increase as the main effect decreases. When \(\alpha _4\) decreases from 1 (S1) to 0.5 (S2), the selection accuracy of \(X_4\) decreases, but the accuracy of \(X_4H\) increases, particularly with small sample sizes and high censoring rates. Furthermore, sample size is a critical factor in determining selection accuracy. As the sample size increases from 300 to 500, the selection percentages for all biomarkers are consistently around 100%.

In conclusion, higher selection percentages are observed when the sample size is large, the censoring rate is small, and the true biomarker effect is strong. The proposed method is not significantly affected by the number of candidate biomarkers, as the selection accuracy remains relatively stable as \(\widetilde{p}\) increases.

3.2.4 Method comparison

We compared our three-stage strategy with five other methods: LASSO, adaptive LASSO, gLASSO, PCA+LASSO, and gradient boosting. The comparison was based on 11 setups combining various sample sizes and biomarker numbers under high censoring rate, and additional scenarios are available in the Supplementary Materials. Since LASSO and adaptive LASSO had similar performance, we excluded the results of adaptive LASSO from the analysis. As shown in Fig. 3, our proposed three-stage method effectively controls the FWERs, whereas the other four methods fail to achieve this goal. For all alternative methods, the FWERs for all scenarios are close to 1. These results agree with our expectation. Notably, methods such as LASSO, boosting, PCA+LASSO, and adaptive LASSO do not take into account the correlation between the primary biomarker and its interaction with treatment or impose the hierarchy constraint, despite incorporating various regularization strategies for variable selection. Group LASSO does attempt to tackle these concerns through its regularization approach; however, the p values obtained for the selected variables do not effectively control the FWER. In contrast, our method is designed to provide valid asymptotic control over variable inclusion at the nominal level, which is made possible by integrating the multiple sample-splitting approach and incorporating features such as correlation and hierarchy (e.g., group lasso) into the regularization techniques after initial feature screening.

For scenarios S1 and S2 (with details available in the Supplementary Materials), we evaluated the selection accuracy of prognostic and predictive biomarkers using five methods under four scenarios, with sample sizes of 300, 500, 800, and 1000, 2000 biomarkers, and 40% and 60% censoring rates. Overall, gLASSO and PCA+LASSO showed relatively high selection accuracy of the interaction term \(X_4H\), but performed poorly in selecting the main effects. On the other hand, LASSO and gradient boosting achieved selection accuracies close to 1 and were insensitive to the censoring rate, sample size, number of biomarkers, and scenario. However, our proposed method controls the selection accuracy with respect to increases in the sample size or underlying biomarker effects or a decrease in the censoring rate. Furthermore, scenarios S3 and S4 (details available in the Supplementary Materials) compared the coefficient estimates based on different methods. In comparison with the four existing methods, our model provided unbiased estimates of the effects with improved efficiency throughout. The simulations were carried out using R 4.1.2 on a high-performance computing cluster. For each dataset with 50 iterations of the bootstrapping procedure, the computation time for the proposed method is approximate 6 min, considering the number of biomarkers as 1,000 and sample size of 300, however, this time may increase (up to 26 min) with larger number of biomarkers and sample sizes. Other alternative methods need less time due to the lack of bootstrap procedures (i.e., 3 min for the case with 1,000 biomarkers and sample size of 300).

4 Applications

In the first example, we present an application of our method using an existing breast cancer study that includes patients with estrogen receptor (ER)-negative tumors (Desmedt 2011; Hatzis 2011). The gene expression data associated with the study is publicly available from the Gene Expression Omnibus database (refer to GSE16446 and GSE25066). The study comprised 614 patients, with 507 receiving only anthracycline-based adjuvant chemotherapy (coded as 0) (Desmedt 2011), and 107 receiving anthracycline with taxane-based chemotherapy (coded as 1) (Hatzis 2011). The gene expression data has been pre-processed (e.g., normalization, filtering out low-expression genes), resulting in 1,689 gene variables for direct analysis. The primary outcome of interest is the distant recurrence-free survival, with a censoring rate of approximately 78% for both groups.

In the second application, we examine the effect of Tamoxifen treatment on patients with ER-positive breast tumors and evaluate gene expression biomarkers and their interactions with treatment (Loi 2007). The original dataset comprises 414 patients from the cohort GSE6532, collected by Loi (2007), to identify ER-positive subtypes with gene expression profiles. Our analysis focuses on the primary outcome of distant metastasis-free survival (DMFS). After excluding 34 patients, who lack any records of time-to-event data (no follow-up or dropout information) for survival outcomes, we are left with 255 patients who received Tamoxifen treatment and 125 patients who did not. The censoring rates for the two groups are 73.3% and 77.6%, respectively, and there are 44,916 gene expression measurements for each patient.

We applied our approach to identify prognostic and predictive gene biomarkers in the two applications and compared it to existing methods. To implement our proposed method, we opted for 50 iterations in the bootstrapping procedure, and we utilized a 70% allocation rate to partition the data into training and testing datasets. The nominal level was set at 0.05, and for each existing method, a feature screening was constructed based on the training set. After obtaining a total of 50 p values for each pair of prognostic and predictive biomarkers, we calculated the aggregated p values for each pair via the quantile function introduced by Meinshausen (2009) with 0.05 as the \(\eta_{min}\).

As shown in Table 3, our proposed method did not identify any prognostic or predictive biomarkers for the first dataset. However, for the second dataset, the interaction of the gene HYPK (’218,680_x_at’) with the treatment indicator was selected with significance, indicating that HYPK is a predictive biomarker for Tamoxifen treatment regarding the outcome of DMFS. This finding is consistent with the literature (Hans-Dieter and RoyerMatthias 2017), where HYPK is suggested as a novel predictive biomarker for breast cancer. LASSO selected the largest number of biomarkers, followed by boosting, adaptive LASSO, and gLASSO, while PCA+LASSO led to the fewest selections. This performance is similar to what we observed in our simulation studies. Additionally, the HYPK gene selected by our proposed method was also identified by the gradient boosting methods. We further listed the gene symbols of selected prognostic and predictive biomarkers based on the methods in Tables S.4-S.5 of the Supplementary Materials.

Per reviewers’ suggestion, we present another data application to further explore our method, as provided in the Supplementary Material. In particular, we analyzed a microarray dataset (refer to GSE22762) (Herold 2011) comprising 151 chronic lymphocytic leukemia (CLL) patients. The primary objective was to identify prognostic and predictive biomarkers associated with overall survival (OS) and salvage chemotherapy. Our proposed method successfully identified 2 predictive biomarkers and 3 prognostic biomarkers. For additional details, please refer to Section D of the Supplementary Material.

5 Discussion

In this paper, we presented a three-stage strategy for identifying prognostic and predictive biomarker signatures, which can be extended to higher-order terms, such as pairwise interactions among the biomarkers, depending on clinical interest or practical necessity. Our work builds upon the concept of multi-splitting for p-value adjustment to identify prognostic and predictive biomarkers under the survival framework, with a focus on Cox PH regressions. Specifically, we extend the approach proposed by (Meinshausen 2009) by generating pairwise p values through joint hypothesis testing. However, we note that if we were to generate p values via individual hypothesis testing, as in the approach proposed by (Meinshausen 2009), the resulting family-wise error rates (FWERs) would be overly conservative when identifying prognostic and predictive biomarkers in our case.

We conducted extensive simulation studies, which demonstrated that our proposed approach can control the FWER well around the nominal level, whereas existing methods such as LASSO, gLASSO, PCA+LASSO, and gradient boosting fail to control FWER. For example, LASSO produces FWERs close to 1 for all scenarios, while boosting, gLASSO, and PCA+LASSO have unstable FWERs across different scenarios. Controlling the FWER in cancer studies can improve the sensitivity of biomarker selection and testing during screening. Additionally, compared with existing methods, our proposed method provides accurate estimates of the effects of selected biomarkers with centers closer to true effects and lower dispersion across a variety of scenarios. The mean square errors and relative bias of the estimates produced by our proposed method are consistently lower across a variety of scenarios. However, gLASSO and PCA+LASSO have the largest variability of estimates, and the boosting method underestimates the effects in most scenarios. Furthermore, our method can control the selection accuracy of prognostic and predictive biomarkers close to 100% with an increase in sample size or underlying biomarker effects or a decrease in the censoring rate. In contrast, existing methods (e.g., gLASSO and PCA+LASSO) have relatively lower and inconsistent selection accuracy of the main effects, regardless of censoring rates.

Furthermore, we applied our proposed method and other existing methods to analyze two breast cancer datasets and a chronic lymphocytic leukemia dataset, as detailed in the supplementary material. While there is no literature revealing true prognostic and predictive biomarkers based on these three gene expression datasets, our proposed method yielded intriguing findings. In the second data example, our proposed method identified the gene HYPK as a predictive biomarker for Tamoxifen treatment regarding the outcome of DMFS. This aligns with a finding from (Hans-Dieter and RoyerMatthias 2017), suggesting HYPK as a novel predictive biomarker for breast cancer. Notably, the gradient boosting method also identified the HYPK gene. For the CLL data application shown in the Supplementary Material, our proposed method successfully identified 2 predictive biomarkers and 3 prognostic biomarkers associated with OS and chemotherapy. Among the selected biomarkers, TCF7 stood out as both a prognostic and predictive biomarker, indicating a significant impact on OS in CLL and providing insights into the effect of chemotherapy on CLL patients. This finding aligns with the observations in the paper by (Herold 2011).

In summary, our proposed approach provides a robust tool for identifying prognostic and predictive biomarkers in cancer studies, demonstrating superior performance in simulation studies compared to existing methods, particularly in terms of controlling the FWER. Noted that the FWER control represents a conservative approach, emphasizing the importance of avoiding any false discoveries within a family of tests, making it more restrictive compared to less stringent methods. Of note, in real-world applications, various factors, such as non-randomized clinical trial, sample size, the distribution of biomarker values, the magnitude of biomarker effects and varied pairwise correlations among biomarkers, may influence the selection results. In our data applications, we present several examples for illustration, with promise findings and the genes selected by our method verified by the existing literature.

Regarding the choice of quantiles for aggregating p values, we have adopted a strategy akin to (Meinshausen 2009), considering a lower bound value of \(\eta_{\text{min}} =0.05\) that has been both suggested and exclusively explored in prior studies (Meinshausen 2009; Renaux 2020; Shi et al. 2023; Buzdugan 2016). While there are alternative methods for p-value aggregation (Mitchell 2015), our context is unique because the p values for each variable are generated through repeated data random-splits, resulting in empirical distributions. Using quantiles to combine and aggregate p values provides a flexible means of error rate control, with the advantage of subjective quartile selection. The challenge of selecting the quartile parameter, denoted as \(\eta_{\text{min}}\) \((0<\eta_{\text{min}} <1)\), has been acknowledged Meinshausen (2009), and there is no universally accepted value for \(\eta_{\text{min}}\) that guarantees error control. However, the outcomes from the chosen value have proven satisfactory, but further exploration can be pursued in this regard.

In terms of other future work, it may be worthwhile to investigate the issue of multicollinearity between gene expression levels, particularly when the correlation among biomarkers is relatively high. Additionally, the accelerated failure time model can be easily adapted into our three-stage framework to derive the p values and identify biomarker signatures when Cox PH models are not appropriate due to violations of the PH assumption. Overall, our proposed method has wide-ranging potential for application in cancer genetics studies and can be readily extended to other areas as necessary.

A matrix of panels for family-wise error rates calculated via our proposed model based on simulation studies; rows represent high or low censoring rates; columns represent four simulation scenarios; x-axis is the sample size and y-axis is FWERs; three types of lines represent different total number of biomarkers \(\widetilde{p}\)

A matrix of panels for coefficient estimates for the high censoring rate; rows represent three biomarker effects; columns represent four simulation scenarios; x-axis represents 11 setups of sample sizes and the numbers of biomarkers; y-axis represents the estimated coefficients; red dotted lines represent the strength of biomarker effects

A matrix of panels for FWERs comparisons for different methods based on simulation studies; rows represent high or low censoring rates; columns represent four simulation scenarios; x-axis represents different sample sizes and the numbers of biomarkers, y-axis represents FWERs; five colored lines represent different methods

Data availability

The data analysed during the current study are publicly available from the Gene Expression Omnibus database. Please see below: GSE16446: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE16446; GSE25066: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE25066; GSE6532: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=gse6532. GSE22762: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE22762.

References

Barrett T et al (2010) Ncbi geo: archive for functional genomics data sets-10 years on. Nucleic Acids Res 39(suppl–1):D1005–D1010

Bender R et al (2005) Generating survival times to simulate cox proportional hazards models. Stat Med 24(11):1713–1723

Bühlmann P (2013) Statistical significance in high-dimensional linear models. Bernoulli 19(4):1212–1242

Buzdugan L et al (2016) Assessing statistical significance in multivariable genome wide association analysis. Bioinformatics 32(13):1990–2000

Bühlmann P, Yu B (2003) Boosting with the l2loss. J Am Stat Assoc 98(462):324–339

Chin L et al (2011) Cancer genomics: from discovery science to personalized medicine. Nat Med 17(3):297–303

Desmedt C et al (2011) Multifactorial approach to predicting resistance to anthracyclines. J Clin Oncol 29(12):1578–1586

Dezeure R et al (2015) High-dimensional inference: confidence intervals, \(p\)-values and R-software hdi. Stat Sci 30(4):533–558

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96(456):1348–1360

Fan J, Li R (2002) Variable selection for coxs proportional hazards model and frailty model. Ann Stat 30(1):74–99

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc: Ser B (Stat Methodol) 70(5):849–911

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc: Ser B (Stat Methodol) 70(5):849–911

Fan J et al (2010) High-dimensional variable selection for cox’s proportional hazards model. Theory powering applications - a festschrift for Lawrence D. Brown, Institute of Mathematical Statistics Collections Borrowing Strength, pp 70–86

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189

Ghosh S (2007) Adaptive elastic net: an improvement of elastic net to achieve oracle properties. Preprint, p 1

Hamburg MA, Collins FS (2010) The path to personalized medicine. N Engl J Med 2010(363):301–304

Hans-Dieter, RoyerMatthias, KHR-P (2017) Novel prognostic and predictive biomarkers (tumor markers) for human breast cancer. EP2669682B1

Hastie T et al (2017) The elements of statistical learning: data mining, inference, and prediction. Springer

Hatzis C et al (2011) A genomic predictor of response and survival following taxane-anthracycline chemotherapy for invasive breast cancer. JAMA 305(18):1873–1881

He K et al (2019) An improved variable selection procedure for adaptive lasso in high-dimensional survival analysis. Lifetime Data Anal 25(3):569–585

Herold T et al (2011) An eight-gene expression signature for the prediction of survival and time to treatment in chronic lymphocytic leukemia. Leukemia 25(10):1639–1645

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12(1):55–67

Loi S et al (2007) Definition of clinically distinct molecular subtypes in estrogen receptor-positive breast carcinomas through genomic grade. J Clin Oncol 25(10):1239–1246

Meinshausen N, Yu B (2009) Lasso-type recovery of sparse representations for high-dimensional data. Ann Stat 37(1):246–270

Meinshausen N et al (2009) p values for high-dimensional regression. J Am Stat Assoc 104(488):1671–1681

Mitchell MW (2015) A comparison of aggregate p value methods and multivariate statistics for self-contained tests of metabolic pathway analysis. PLoS One 10(4):e0125081

Renaux C et al (2020) Hierarchical inference for genome-wide association studies: a view on methodology with software. Comput Stat 35(1):1–40

Shi H et al (2023) Tests for ultrahigh-dimensional partially linear regression models

Simon N et al (2011) Regularization paths for cox’s proportional hazards model via coordinate descent. J Stat Softw 39(5):1–13

Ternès N et al (2016) Identification of biomarker-by-treatment interactions in randomized clinical trials with survival outcomes and high-dimensional spaces. Biom J 59(4):685–701

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc: Ser B (Methodol) 58(1):267–288

Tibshirani R (1997) The lasso method for variable selection in the cox model. Stat Med 16(4):385–395

Wang H, Leng C (2008) A note on adaptive group lasso. Comput Stat Data Anal 52(12):5277–5286

Wasserman L, Roeder K (2009) High dimensional variable selection. Ann Stat 37(5A):2178

Yuan M, Lin Y (2006) Model selection and estimation in regression with grouped variables. J R Stat Soc: Ser B (Stat Methodol) 68(1):49–67

Zhang C-H, Zhang SS (2014) Confidence intervals for low dimensional parameters in high dimensional linear models. J R Stat Soc Ser B (Stat Methodol) 76(1):217–242

Zhang HH, Lu W (2007) Adaptive lasso for cox’s proportional hazards model. Biometrika 94(3):691–703

Zhao SD, Li Y (2012) Principled sure independence screening for cox models with ultra-high-dimensional covariates. J Multivar Anal 105(1):397–411

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Zuo Y et al (2021) Variable selection with second-generation p values. The American Statistician, pp 1–11

Acknowledgements

The authors gratefully acknowledge Dr. Hao Feng and Ms. Wen Tang for their assistance with the data processing of the microarray dataset (GSE22762) (Herold et al., 2011) during the paper revision. Additionally, they appreciate the valuable feedback provided by the reviewers, which has contributed to enhancing the quality of the paper.

Funding

Wang’s work received partial support from the start-up funding provided by the Department of Population and Quantitative Health Sciences at Case Western Reserve University. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, X., Chen, C., Li, Z. et al. A three-stage approach to identify biomarker signatures for cancer genetic data with survival endpoints. Stat Methods Appl (2024). https://doi.org/10.1007/s10260-024-00748-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s10260-024-00748-y