Abstract

This study examines the varying coefficient model in tail index regression. The varying coefficient model is an efficient semiparametric model that avoids the curse of dimensionality when including large covariates in the model. In fact, the varying coefficient model is useful in mean, quantile, and other regressions. The tail index regression is not an exception. However, the varying coefficient model is flexible, but leaner and simpler models are preferred for applications. Therefore, it is important to evaluate whether the estimated coefficient function varies significantly with covariates. If the effect of the non-linearity of the model is weak, the varying coefficient structure is reduced to a simpler model, such as a constant or zero. Accordingly, the hypothesis test for model assessment in the varying coefficient model has been discussed in mean and quantile regression. However, there are no results in tail index regression. In this study, we investigate the asymptotic properties of an estimator and provide a hypothesis testing method for varying coefficient models for tail index regression.

Similar content being viewed by others

1 Introduction

In various fields, predicting the high- or low-tail behavior of data distribution is of interest. Examples include events such as heavy rains, large earthquakes, and significant fluctuations in stock prices. Extreme value theory is a standard approach for analyzing the data of such extremal events. Let \(Y_1, Y_2, \ldots , Y_n\) be independent and identically distributed random variables with distribution function F. In extreme value theory, the following maximum domain of attraction assumption is standard: Assume that there exist sequences of constants \(a_n>0\) and \(b_n\in \mathbb {R}\) and a non-degenerate distribution function G such that

for each continuity point y in G. This assumption implies that there exist a constant \(\gamma \in \mathbb {R}\) and a positive function \(\sigma (t)\) such that

for all y for which \(1+\gamma y>0\), where \(y^*=\sup \{y: F(y)<1\}\in (-\infty , \infty ]\) and the right-hand side for \(\gamma =0\) is interpreted as \(e^{-y}\) (see, Theorem 1.1.6 of de Haan and Ferreira (2006)). The class of distributions on the right-hand side is called the generalized Pareto distribution and the parameter \(\gamma \) is called the extreme value index. Therefore, in extreme value theory, the tail behavior of the data distribution is characterized by the extreme value index \(\gamma \). Its existing estimators include the Hill estimator (Hill 1975), Pickands estimator (Pickands 1975), kernel estimator (Csorgo et al. 1985), maximum likelihood estimator (Smith 1987), and moment estimator (Dekkers et al. 1989), etc. It is noteworthy that the generalized Pareto distribution has different features depending on the sign of \(\gamma \). If \(\gamma >0\), we have

for all \(y>0\), where \(\mathcal {L}(y)\) is a slowly varying function at infinity; i.e., \(\mathcal {L}(ys)/\mathcal {L}(y)\rightarrow 1\) as \(y\rightarrow \infty \) for all \(s>0\). The class of these distributions is called the Pareto-type distribution. This case seems to be common in areas such as finance and insurance, and we frequently observe extremely large values in the data compared to the case of \(\gamma \le 0\). Therefore, many researchers in extreme value theory have focused on this case. The Hill estimator mentioned above is one of the estimators of the positive extreme value index \(\gamma \) and is widely used in many extreme value studies. In this study, we assume that the extreme value index \(\gamma \) is positive.

In recent years, various regression models of the conditional extreme value index have been studied in the so-called tail index regression, where the tail index refers to the inverse of the extreme value index. The nonparametric tail index regression estimators include Gardes and Girard (2010), Stupfler (2013), Daouia et al. (2013), Gardes and Stupfler (2014), Goegebeur et al. (2014, 2015) and Ma et al. (2020). For fully nonparametric methods, the curse of dimensionality arises when multiple covariates are used. However, in many applications, extremal events are often triggered by multiple factors, and we hope to consider these factors. To avoid the curse of dimensionality, Wang and Tsai (2009) studied the parametric tail index regression assuming the linear model. However, in some applications of extreme value theory, the linear model may be too simple to predict the tail behavior of the distribution of the response. As an extension of Wang and Tsai (2009), Youngman (2019) studied additive models, Li et al. (2022) developed partially linear models, Yoshida (2023) provided single-index models, and Ma et al. (2019) provided varying coefficient models. The varying coefficient model is useful for analyzing time series and longitudinal data, etc. Because time or location is often important in many applications of extreme value theory, the varying coefficient model is expected to be useful in tail index regression. We are also interested in tail index regression assuming the varying coefficient model.

The varying coefficient models pioneered by Hastie and Tibshirani (1993) assume that the regression function \(m_Y(\mathbf{{X}}, \mathbf{{T}})\) of interest satisfies

for the given explanatory variable vectors \(\mathbf{{X}}\) and \(\mathbf{{T}}\), and the response variable Y, where \({\varvec{\theta }}(\cdot )\) is the vector of unknown smooth functions of \(\mathbf{{T}}\), which is denoted by the coefficient function vector. In mean and quantile regression, many authors have developed varying coefficient models, such as those of Wu et al. (1998), Fan and Zhang (1999), Huang et al. (2002), Huang et al. (2004), Kim (2007), Cai and Xu (2008), Andriyana et al. (2014), and Andriyana et al. (2018). Fan and Zhang (2008) provided a review article on the varying coefficient model. Some of these studies examined not only the estimation methods of the coefficient function, but also the hypothesis testing methods. We denote \({\varvec{\theta }}(\cdot )=(\theta _1(\cdot ), \theta _2(\cdot ), \ldots , \theta _p(\cdot ))^\top \). The hypothesis test for the constancy of a specific component can be represented as

for an unknown constant \(C_0\), where \(\mathrm{{H}}_{0\mathrm{{C}}}\) is the null hypothesis and \(\mathrm{{H}}_{1\mathrm{{C}}}\) is the alternative hypothesis. It is particularly important to test the sparsity of a specific covariate, which can be expressed as

where \(\mathrm{{H}}_{0\mathrm{{Z}}}\) is the null hypothesis and \(\mathrm{{H}}_{1\mathrm{{Z}}}\) is the alternative hypothesis. The varying coefficient model is flexible, but simpler models provide an easy interpretation of the data structure in real data analysis. The above hypothesis tests help to reduce the varying coefficient model to a simpler model. In mean and quantile regression, testing methods based on the comparison of the residual sum of squares include Cai et al. (2000), Fan and Li (2001), Huang et al. (2002), Kim (2007), among others, where they used the bootstrap to implement their test. In mean regression, Fan and Zhang (2000) proposed the testing method based on the asymptotic distribution of the maximum deviation between the estimated coefficient function and true coefficient function.

In this study, we employ a logarithmic transformation to link the extreme value index of the response variable Y to the explanatory variable vectors \(\mathbf{{X}}\) and \(\mathbf{{T}}\) via

To the best of our knowledge, Ma et al. (2019) also studied this model. They provided a kernel-type nonparametric estimator of \({\varvec{\theta }}(\mathbf{{T}})\) and established asymptotic normality. However, they did not discuss hypothesis testing. Therefore, there are no results for the hypothesis tests in tail index regression. Our study aims to establish a testing method for varying coefficient models for tail index regression.

The remainder of this paper is organized as follows. Section 2 introduces the local constant (Nadaraya–Watson type) maximum likelihood estimator of the coefficient functions, and Sect. 3 investigates its asymptotic properties. Section 4 introduces the proposed method for testing the structure of the coefficient functions and demonstrates the finite sample performance through simulations. A real example is analyzed in Sect. 5. All technical details are provided in Appendix.

2 Model and method

2.1 Varying coefficient models in tail index regression

Let \(Y>0\) be the univariate response variable of interest, \(\mathbf{{X}}=(X_1, X_2, \ldots , X_p)^\top \in \mathbb {R}^p\) be the p-dimensional explanatory variable vector, and \(\mathbf{{T}}=(T_1, T_2, \ldots , T_q)^\top \in \mathbb {R}^q\) be the q-dimensional explanatory variable vector. In addition, let \(F(y\mid \mathbf{{x}}, \mathbf{{t}})=P(Y\le y\mid \mathbf{{X}}=\mathbf{{x}}, \mathbf{{T}}=\mathbf{{t}})\) be the conditional distribution function of Y given \((\mathbf{{X}}, \mathbf{{T}})=(\mathbf{{x}}, \mathbf{{t}})\). We consider the Pareto-type distribution

where \(\gamma (\mathbf{{x}}, \mathbf{{t}})\) is a positive function of \(\mathbf{{x}}\) and \(\mathbf{{t}}\), and \(\mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})\) is a covariate-dependent slowly varying function at infinity; i.e., \(\mathcal {L}(ys; \mathbf{{x}}, \mathbf{{t}})/\mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})\rightarrow 1\) as \(y\rightarrow \infty \) for any \(s>0\). We assume that the slowly varying function \(\mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})\) converges to a constant at a reasonably high speed. Specifically, we assume

where \(c_0(\mathbf{{x}}, \mathbf{{t}})\), \(c_1(\mathbf{{x}}, \mathbf{{t}})\) and \(\beta (\mathbf{{x}}, \mathbf{{t}})\) are functions of \(\mathbf{{x}}\) and \(\mathbf{{t}}\) with \(c_0(\mathbf{{x}}, \mathbf{{t}})>0\) and \(\beta (\mathbf{{x}}, \mathbf{{t}})>0\), and \(o(y^{-\beta (\mathbf{{x}}, \mathbf{{t}})})\) is a higher-order term. This assumption is called the Hall class (Hall 1982). In our study, we adopt the varying coefficient model for the conditional extreme value index \(\gamma (\mathbf{{x}}, \mathbf{{t}})\) as

where \(\mathbf{{x}}=(x_1, x_2, \ldots , x_p)^\top \in \mathbb {R}^p\), \({\varvec{\theta }}(\mathbf{{t}})=(\theta _0(\mathbf{{t}}), \theta _1(\mathbf{{t}}), \ldots , \theta _p(\mathbf{{t}}))^\top \in \mathbb {R}^{p+1}\), and \(\theta _j(\mathbf{{t}}),\ j=0, 1, \ldots , p\) are the unknown smooth functions of \(\mathbf{{t}}\).

2.2 Local constant maximum likelihood estimator

Let \(f(y\mid \mathbf{{x}}, \mathbf{{t}})\) be the conditional probability density function of Y given \((\mathbf{{X}}, \mathbf{{T}})=(\mathbf{{x}}, \mathbf{{t}})\). If \(\mathcal {L}(\cdot ; \mathbf{{x}}, \mathbf{{t}})\) is differentiable, we obtain

Because \(\mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})\rightarrow c_0(\mathbf{{x}}, \mathbf{{t}})\) and \(\partial \mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})/\partial y\rightarrow 0\) as \(y\rightarrow \infty \) by using (2.2), we have

for sufficiently large \(y>0\). Let \(\{(Y_i, \mathbf{{X}}_i, \mathbf{{T}}_i),\ i=1, 2, \ldots , n\}\) be an independent and identically distributed random sample with the same distribution as \((Y, \mathbf{{X}}, \mathbf{{T}})\). We introduce a sufficiently high threshold \(\omega _n>0\) such that \(\omega _n\rightarrow \infty \) as \(n\rightarrow \infty \) and use the responses that exceed it. Let \(f(y\mid \mathbf{{x}}, \mathbf{{t}}, \omega _n)\) be the conditional probability density function of Y given \((\mathbf{{X}}, \mathbf{{T}})=(\mathbf{{x}}, \mathbf{{t}})\) and \(Y>\omega _n\). Then, we have

for \(y>\omega _n\). Thus, we can estimate the coefficient function vector \({\varvec{\theta }}(\mathbf{{t}})\) by using the following weighted maximum likelihood approach: Let

where \({\varvec{\theta }}\in \mathbb {R}^{p+1}\), \(I(\cdot )\) is an indicator function, \(K(\cdot )\) is a kernel function on \(\mathbb {R}^q\), and \(\mathbf{{H}}_n=\mathrm{{diag}}(h_{n1}, \ldots , h_{nq})\) is a q-order diagonal matrix whose components are bandwidths \(h_{nk},\ k=1, 2, \ldots , q\) such that \(h_{nk}\rightarrow 0\) as \(n\rightarrow \infty \). We define the estimator of the coefficient function vector \({\varvec{\theta }}(\mathbf{{t}})\) as the minimizer of the objective function \(L_n({\varvec{\theta }})\). We denote this estimator by \(\widehat{\varvec{\theta }}(\mathbf{{t}})=(\widehat{\theta }_0(\mathbf{{t}}), \widehat{\theta }_1(\mathbf{{t}}), \ldots , \widehat{\theta }_p(\mathbf{{t}}))^\top \in \mathbb {R}^{p+1}\). Ma et al. (2019) provided the local linear maximum likelihood estimator. When \(p=0\) and \(q=0\), the covariate-independent estimator \(\widehat{\theta }_0\) is explicitly obtained, and we have

which is the Hill estimator proposed by Hill (1975) and is widely used in univariate extreme value theory.

Note that the varying coefficient model corresponds to linear and nonparametric models as special cases. When \(q=0\), (2.3) is simplified as

where \({\varvec{\theta }}=(\theta _0, \theta _1, \ldots , \theta _p)^\top \in \mathbb {R}^{p+1}\), and \(\theta _j,\ j=0, 1, \ldots , p\) are the regression coefficients. Wang and Tsai (2009) studied this tail index regression model. Whereas, when \(p=0\), we obtain a nonparametric estimator of the positive extreme value index as

which was studied by Goegebeur et al. (2014, 2015).

2.3 Bandwidths and threshold selection

The threshold \(\omega _n\) and bandwidths \(h_{nk},\ k=1, \ldots , q\) are tuning parameters that control the balance between the bias and variance of the estimator \(\widehat{\varvec{\theta }}(\mathbf{{t}})\). A larger value of \(h_{nk}\) or smaller value of \(\omega _n\) leads to more bias, whereas a larger value of \(\omega _n\) or smaller value of \(h_{nk}\) leads to a larger variance. Therefore, these tuning parameters must be appropriately selected.

The threshold selection is needed to obtain the good approximation of (2.4). To achieve this, the discrepancy measure, which was proposed by Wang and Tsai (2009), is suitable. Meanwhile, the choice of the bandwidths controls the smoothness of the estimator. Therefore, we use the cross-validation to select the bandwidths, similar to other studies using kernel smoothing (e.g., Ma et al. 2019). Thus, we combine the discrepancy measure and cross-validation as the whole tuning parameter selection method. The algorithm of the tuning parameter selection is as follows. In the first step, we select the bandwidths \(h_{nk},\ k=1, \ldots , q\) by D-fold cross-validation as

which is based on (2.5), where \(\omega _0\) is a predetermined threshold, \(\lfloor \cdot \rfloor \) is the floor function, and \(\{(Y_i^{(d)}, \mathbf{{X}}_i^{(d)}, \mathbf{{T}}_i^{(d)}),\ i=1, 2, \ldots , \lfloor n/D\rfloor \}\) is the dth test dataset. \(\widehat{\varvec{\theta }}^{(d)}(\cdot ; \omega _0, \mathbf{{H}})\) is the proposed estimator, with \(\omega _n=\omega _0\) and \(\mathbf{{H}}_n=\mathbf{{H}}\), which is obtained from the dth training dataset. In the second step, we select the threshold \(\omega _n\) using the discrepancy measure. We denote the order statistics of \(\{\exp \{-\exp ((1, \mathbf{{X}}_i^\top )\widehat{\varvec{\theta }}(\mathbf{{T}}_i))\log (Y_i/\omega _n)\}: Y_i>\omega _n,\ i=1, \ldots , n\}\) as \(\widehat{U}_{1, n_0} \le \widehat{U}_{2, n_0} \le \ldots \le \widehat{U}_{n_0, n_0}\), where \(n_0=\sum _{i=1}^nI(Y_i>\omega _n)\) is the number of responses that exceed the threshold \(\omega _n\). Because the conditional distribution of \(\exp \{-\exp ((1, \mathbf{{X}}^\top ){\varvec{\theta }}(\mathbf{{T}}))\log (Y/\omega _n)\}\) given \(Y>\omega _n\) is approximately standard uniform, we can regard \(\{\widehat{U}_{l, n_0}\}_{l=1}^{n_0}\) as a sample from the standard uniform distribution. Therefore, we select the threshold \(\omega _n\) as

where \(\{\widehat{U}_{l, n_0}(\omega , \mathbf{{H}}_\mathrm{{CV}})\}_{l=1}^{n_0}\) are \(\{\widehat{U}_{l, n_0}\}_{l=1}^{n_0}\) with \(\omega _n=\omega \) and \(\mathbf{{H}}_n=\mathbf{{H}}_\mathrm{{CV}}\), and \(\widehat{F}(\cdot ; \omega , \mathbf{{H}}_\mathrm{{CV}})\) is a empirical distribution function of \(\{\widehat{U}_{l, n_0}(\omega , \mathbf{{H}}_\mathrm{{CV}})\}_{l=1}^{n_0}\).

3 Asymptotic properties

3.1 Conditions

In this section, we investigate the asymptotic properties of our proposed estimator. The following technical conditions are required: We define \(n_0(\mathbf{{t}})=n\det (\mathbf{{H}}_n)f_\mathbf{{T}}(\mathbf{{t}})P(Y>\omega _n\mid \mathbf{{T}}=\mathbf{{t}})\) and \(n(\mathbf{{t}})=n\det (\mathbf{{H}}_n)f_\mathbf{{T}}(\mathbf{{t}})\), where \(f_\mathbf{{T}}(\mathbf{{t}})\) is the marginal probability density function of \(\mathbf{{T}}\). We also define

and

- (C.1):

-

The kernel function \(K(\cdot )\) is an absolutely continuous function that has compact support and satisfies the conditions

$$\begin{aligned} \int K(\mathbf{{u}})\mathrm{{d}}{} \mathbf{{u}}=1,\ \int u_kK(\mathbf{{u}})\mathrm{{d}}{} \mathbf{{u}}=0,\ \int u_k^2K(\mathbf{{u}})\mathrm{{d}}{} \mathbf{{u}}<\infty ,\ \int K(\mathbf{{u}})^2\mathrm{{d}}{} \mathbf{{u}}<\infty \end{aligned}$$with \(\mathbf{{u}}=(u_1, u_2, \ldots , u_q)^\top \in \mathbb {R}^q\).

- (C.2):

-

The joint probability density function \(f(y, \mathbf{{x}}, \mathbf{{t}})\) of \((Y, \mathbf{{X}}, \mathbf{{T}})\) and the coefficient function \(\theta _j(\mathbf{{t}})\) have continuous second-order derivative on \(\mathbf{{t}}\).

- (C.3):

-

Assume \(n_0(\mathbf{{t}})\rightarrow \infty \) and

$$\begin{aligned} {\varvec{\Sigma }}_n(\mathbf{{t}})^{-1/2}\widetilde{\varvec{\Sigma }}_n(\mathbf{{t}}){\varvec{\Sigma }}_n(\mathbf{{t}})^{-1/2}\xrightarrow {P}{} \mathbf{{I}}_{p+1} \end{aligned}$$as \(n\rightarrow \infty \) for all \(\mathbf{{t}}\in \mathbb {R}^q\), where \(\mathbf{{I}}_{p+1}\) is a \((p+1)\)-order identity matrix and the symbol “\(\xrightarrow {P}\)” stands for convergence in probability.

- (C.4):

-

The reminder term \(o(y^{-\beta (\mathbf{{x}}, \mathbf{{t}})})\) defined in (2.2) satisfies

$$\begin{aligned} \sup _{\mathbf{{x}}\in \mathbb {R}^p,\ \mathbf{{t}}\in \mathbb {R}^q}(y^{\beta (\mathbf{{x}}, \mathbf{{t}})}o(y^{-\beta (\mathbf{{x}}, \mathbf{{t}})}))\rightarrow 0 \end{aligned}$$as \(y\rightarrow \infty \).

- (C.5):

-

For all \(\mathbf{{t}}\in \mathbb {R}^q\), there exists a nonzero vector \(\mathbf{{b}}(\mathbf{{t}})\in \mathbb {R}^{p+1}\) such that

$$\begin{aligned}&\frac{n(\mathbf{{t}})}{\sqrt{n_0(\mathbf{{t}})}}{} \mathbf{{\Sigma }}_n(\mathbf{{t}})^{-1/2}\\&\quad E\left[ (1, \mathbf{{X}}^\top )^\top c_1(\mathbf{{X}}, \mathbf{{t}})\frac{\gamma (\mathbf{{X}}, \mathbf{{t}})\beta (\mathbf{{X}}, \mathbf{{t}})}{1+\gamma (\mathbf{{X}}, \mathbf{{t}})\beta (\mathbf{{X}}, \mathbf{{t}})}\omega _n^{-1/\gamma (\mathbf{{X}}, \mathbf{{t}})-\beta (\mathbf{{X}}, \mathbf{{t}})}\mid \mathbf{{T}}=\mathbf{{t}}\right] \rightarrow \mathbf{{b}}(\mathbf{{t}}) \end{aligned}$$as \(n\rightarrow \infty \).

The condition (C.1) is typically used for kernel estimation. The conditions (C.3)–(C.5) correspond to the conditions (C.1)–(C.3) of Wang and Tsai (2009). The condition (C.3) requires that a certain weak law of large numbers holds. The condition (C.4) regularizes the extreme behavior of the slowly varying function \(\mathcal {L}(y; \mathbf{{x}}, \mathbf{{t}})\). The condition (C.5) specifies the optimal convergence rates of threshold \(\omega _n\) and bandwidths \(h_{nk},\ k=1, \ldots , q\).

3.2 Asymptotic properties

We define

and

The above \(\dot{\varvec{L}}_n({\varvec{\theta }})\) and \(\ddot{\varvec{L}}_n({\varvec{\theta }})\) are the gradient vector and Hessian matrix of the objective function \(L_n({\varvec{\theta }})\), respectively. The proposed estimator \(\widehat{\varvec{\theta }}(\mathbf{{t}})\) is defined as the minimizer of \(L_n({\varvec{\theta }})\) and satisfies \(\dot{\varvec{L}}_n(\widehat{\varvec{\theta }}(\mathbf{{t}}))={\varvec{0}}\). Therefore, similar to common approaches for establishing the asymptotic normality of the maximum likelihood estimator, we need to investigate the asymptotic properties of \(\dot{\varvec{L}}_n({\varvec{\theta }})\) and \(\ddot{\varvec{L}}_n({\varvec{\theta }})\). Let \(\nu =\int K(\mathbf{{u}})^2\mathrm{{d}}{} \mathbf{{u}}\), \({\varvec{\kappa }}=\int \mathbf{{u}}{} \mathbf{{u}}^\top K(\mathbf{{u}})\mathrm{{d}}{} \mathbf{{u}}\),

and

where \(\mathbf{{t}}=(t_1, t_2, \ldots , t_q)^\top \in \mathbb {R}^q\) and \(k_1, k_2\in \{1, 2, \ldots , q\}\).

Theorem 1

Let us suppose that conditions (C.1)-(C.5) are satisfied; then, as \(n\rightarrow \infty \),

where the symbol “\(\xrightarrow {D}\)” denotes convergence in distribution,

and

Theorem 2

Let us suppose that conditions (C.1)-(C.5) are satisfied; then, as \(n\rightarrow \infty \),

From Theorems 1 and 2, we obtain the following asymptotic normality of our proposed estimator \(\widehat{\varvec{\theta }}(\mathbf{{t}})\):

Theorem 3

Let us suppose that conditions (C.1)-(C.5) are satisfied; then, as \(n\rightarrow \infty \),

This result implies that \(\widehat{\varvec{\theta }}(\mathbf{{t}})\) is the consistent estimator of \({\varvec{\theta }}(\mathbf{{t}})\). The convergence rate of \(\widehat{\varvec{\theta }}(\mathbf{{t}})\) to \({\varvec{\theta }}(\mathbf{{t}})\) is on the same order as \([n_0(\mathbf{{t}}){\varvec{\Sigma }}_n(\mathbf{{t}})]^{-1/2}\). The \(n_0(\mathbf{{t}})=n\det (\mathbf{{H}}_n)f_\mathbf{{T}}(\mathbf{{t}})P(Y>\omega _n\mid \mathbf{{T}}=\mathbf{{t}})\) is proportional to the number of top-order statistics of the responses used for estimation at \(\mathbf{{t}}\). The \({\varvec{\Sigma }}_n(\mathbf{{t}})\) is defined in Sect. 3.1. The asymptotic bias is caused by two factors. The bias \(\mathbf{{b}}(\mathbf{{t}})\) is caused by the approximation of the tail of the conditional distribution of Y by the Pareto distribution in (2.4), which is related to the convergence rate of the slowly varying function \(\mathcal {L}(\cdot ; \mathbf{{x}}, \mathbf{{t}})\) to the constant \(c_0(\mathbf{{x}}, \mathbf{{t}})\). From the definition of \(\mathbf{{b}}(\mathbf{{t}})\) given in (C.5), we can see that the proposed estimator is more biased for larger \(\gamma (\mathbf{{x}}, \mathbf{{t}})\). In other words, the heavier the tail of the data, the more biased the estimator. Meanwhile, if \(\beta (\mathbf{{x}}, \mathbf{{t}})\) is small, the large bias of the estimator is occurred. Thus, the bias of our estimator is particularly sensitive to \(\gamma (\mathbf{{x}}, \mathbf{{t}})\) and \(\beta (\mathbf{{x}}, \mathbf{{t}})\). These parameters are related to the second order condition in extreme value theory (see, Theorem 3.2.5 of de Haan and Ferreira (2006), Theorems 2 and 3 of Wang and Tsai (2009), and Theorem 2 of Li et al. (2022), to name a few). In contrast, the biases \(\mathbf{{\Lambda }}_n^{(1)}(\mathbf{{t}})\) and \(\mathbf{{\Lambda }}_n^{(2)}(\mathbf{{t}})\) are caused by kernel smoothing.

Our asymptotic normality is comparable to the asymptotic normality of the local linear maximum likelihood estimator of the coefficient function vector proposed by Ma et al. (2019). The difference between the two estimators is the asymptotic bias. In the asymptotic normality in Ma et al. (2019), it is assumed that the bias caused by the approximation (2.4) is negligible, so the bias \(\mathbf{{b}}(\mathbf{{t}})\) does not appear in their asymptotic normality. The essential difference is the bias caused by kernel smoothing. In the case of Ma et al. (2019), the bias caused by kernel smoothing is \(\mathbf{{\Lambda }}_n^{(2)}(\mathbf{{t}})\). However, it has the same convergence rate as the bias \(\mathbf{{\Lambda }}_n^{(1)}(\mathbf{{t}})+\mathbf{{\Lambda }}_n^{(2)}(\mathbf{{t}})\). The asymptotic variances of the two estimators are the same.

4 Testing for structure of the coefficient function

4.1 Testing method

In varying coefficient models, we often hope to test whether each coefficient function \(\theta _j(\cdot )\) is constant or zero. If some \(\theta _j(\mathbf{{t}})\) does not vary across \(\mathbf{{t}}\), this motivates us to consider models that are simpler than the varying coefficient model. Generally, the hypothesis test can be represented as

for a given known function \(\eta (\cdot )\), where \(\mathrm{{H}}_0\) is the null hypothesis and \(\mathrm{{H}}_1\) is the alternative hypothesis.

Without a loss of generality, we assume that the explanatory variable vector \(\mathbf{{T}}\in \mathbb {R}^q\) is distributed on \([0, 1]^q\subset \mathbb {R}^q\). Then, we apply Lemma 1 of Fan and Zhang (2000) to

where \(\sigma _{nj}(\mathbf{{t}})=E[X_j^2I(Y>\omega _n)\mid \mathbf{{T}}=\mathbf{{t}}],\ j=0, 1, \ldots , p\) and \(X_0\equiv 1\). The following conditions are required:

- (C.6):

-

For all large \(n\in \mathbb {N}\), the function \(\sigma _{nj}(\mathbf{{t}})\) is bounded away from zero for all \(\mathbf{{t}}\in [0, 1]^q\) and has a bounded partial derivative.

- (C.7):

-

\(\lim _{n\rightarrow \infty }\sup _\mathbf{{t}}E[|X_j|^sI(Y>\omega _n)\mid \mathbf{{T}}=\mathbf{{t}}]<\infty \) for some \(s>2\).

Theorem 4

Under the conditions (C.1)-(C.7), if \(h_n:=h_{nk}=n^{-b},\ k=1, \ldots , q\), for some \(0<b<1-2/s\), we have

as \(n\rightarrow \infty \), where

(see, Rosenblatt 1976) and

From Theorem 3, we now have \(\widehat{\varvec{\theta }}(\mathbf{{t}})\rightarrow ^P{\varvec{\theta }}(\mathbf{{t}})\) as \(n\rightarrow \infty \). By the first-order Taylor expansion around \(\widehat{\theta }_j(\mathbf{{t}})=\theta _j(\mathbf{{t}})\), we obtain

The left-hand side of the above equation is zero because \(\widehat{\varvec{\theta }}(\mathbf{{t}})=(\widehat{\theta }_0(\mathbf{{t}}), \widehat{\theta }_{1}(\mathbf{{t}}), \ldots , \widehat{\theta }_p(\mathbf{{t}}))^\top \) is the minimizer of \(L_n({\varvec{\theta }})\). From Theorems 2 and 3, we also have \(\partial ^2L_n({\varvec{\theta }})/\partial \theta _j^2\mid _{{\varvec{\theta }}=(\widehat{\theta }_0(\mathbf{{t}}), \ldots , \widehat{\theta }_{j-1}(\mathbf{{t}}), \theta _j(\mathbf{{t}}), \widehat{\theta }_{j+1}(\mathbf{{t}}), \ldots , \widehat{\theta }_p(\mathbf{{t}}))^\top }\rightarrow ^Pn(\mathbf{{t}})\sigma _{nj}(\mathbf{{t}})\) as \(n\rightarrow \infty \). Consequently, we have

This implies that \(\psi (\mathbf{{t}})\) in Theorem 4 can be approximated as

From this result, \(E[\psi (\mathbf{{t}})]\) is asymptotically equivalent to the jth component of \(-\mathbf{{b}}(\mathbf{{t}})-[n_0(\mathbf{{t}}){\varvec{\Sigma }}_n(\mathbf{{t}})]^{1/2}\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}})\). This bias involves many unknown parameters. In particular, \(\beta (\mathbf{{x}}, \mathbf{{t}})\) included in \(\mathbf{{b}}(\mathbf{{t}})\) corresponds to the so-called second order parameter (see Gomes et al. 2002). However, the estimation method of the second order parameter has not yet been developed in the context of the tail index regression. Thus, at the present stage, checking that (C.5) is satisfied and estimating \(E[\psi (\mathbf{{t}})]\) are challenging and are posited as future work. Therefore, in this paper, we assume that \(E[\psi (\mathbf{{t}})]\) is zero, similar to Wang and Tsai (2009). Then, Theorem 4 can be used to test if (4.1). Under the null hypothesis \(\mathrm{{H}}_0: \theta _j(\mathbf{{t}})\equiv \eta (\mathbf{{t}})\), we use the test statistic

where \(\widehat{[n(\mathbf{{t}})\sigma _{nj}(\mathbf{{t}})]}\) is the kernel estimator of \(n(\mathbf{{t}})\sigma _{nj}(\mathbf{{t}})\) based on (C.3). For a given significance level \(\alpha \), we reject the null hypothesis \(\mathrm{{H}}_0\) if \(\widetilde{T}<-\log \{-0.5\log (\alpha /2)\}=e_{\alpha /2}\) or \(\widetilde{T}>-\log \{-0.5\log (1-\alpha /2)\}=e_{1-\alpha /2}\).

As mentioned above, we are mainly interested in the following two hypothesis tests: One is

If the null hypothesis \(\mathrm{{H}}_{0\textrm{Z}}\) is not rejected, the corresponding \(X_j\) may not be important for predicting the tail behavior of the distribution of the response Y. Thus, this can help judge the sparsity of a particular covariate. The other is

for an unknown constant \(C_0\) without prior knowledge. Under the null hypothesis \(\mathrm{{H}}_{0\mathrm{{C}}}\), we estimate the unknown constant \(C_0\) as the average of the estimates \(\{\widehat{\theta }_j(\mathbf{{t}}_l)\}_{l=1}^L\), where \(\mathbf{{t}}_l,\ l=1, 2, \ldots , L\) are equally spaced points in \([0, 1]^q\). If the null hypothesis \(\mathrm{{H}}_{0\textrm{C}}\) is not rejected, it motivates us to adopt a simpler model that considers the coefficient function \(\theta _j(\cdot )\) to be constant.

The simultaneous test from Theorem 4 is more rigorous than the test statistic based on the residual sum of squares (see Cai et al. 2000). Here, we consider the separate hypotheses for each coefficient. One might think that the single hypothesis test on all coefficients would be of interest. However, such an extension is really difficult because we have to consider the distribution of \(\sup _\mathbf{{t}}\{\widehat{\theta }_0(\mathbf{{t}}), \widehat{\theta }_1(\mathbf{{t}}), \ldots , \widehat{\theta }_p(\mathbf{{t}})\}\). In fact, such a method has not even been studied in the context of mean regression. Thus, the development of a simultaneous testing method into a single hypothesis test on all coefficient functions is posited as an important future work.

4.2 Simulation

We ran a simulation study to demonstrate the finite sample performance of the proposed estimator and test statistic. We present the results for the three model settings. In all settings, we simulated the responses \(\{Y_i\}_{i=1}^n\) using the following conditional distribution function:

where \(\log \gamma (\mathbf{{x}}, \mathbf{{t}})^{-1}=(1, \mathbf{{x}}^\top ){\varvec{\theta }}(\mathbf{{t}})\). This conditional distribution function satisfies (2.2) with \(c_0(\mathbf{{x}},\mathbf{{t}})=1+\delta \), \(c_1(\mathbf{{x}}, \mathbf{{t}})=-\delta (1+\delta )\), and \(\beta (\mathbf{{x}}, \mathbf{{t}})=\gamma (\mathbf{{x}}, \mathbf{{t}})^{-1}\). If \(\delta \ne 0\), the above conditional distribution is not the Pareto distribution; therefore, we need to introduce the threshold \(\omega _n\) appropriately. Otherwise, modeling bias occurs, resulting in less accuracy in the estimation. We simulated the predictors \(\{(X_{i1}, X_{i2}, \ldots , X_{ip})\}_{i=1}^n\) based on the following procedure:

where \(\{(Z_{i1}, Z_{i2}, \ldots , Z_{ip})\}_{i=1}^n\) is an independent sample from the multivariate normal distribution with \(E[Z_{ij}]=0\) and \(\mathrm{{cov}}[Z_{ij_1}, Z_{ij_2}]=0.5^{|j_1-j_2|}\), and \(\Phi (\cdot )\) is the cumulative distribution function of a standard normal. Consequently, for \(j=1, 2, \ldots , p\), \(\{X_{ij}\}_{i=1}^n\) is uniformly distributed on \([-\sqrt{3}, \sqrt{3}]\) with unit variance. Meanwhile, we simulated the predictors \(\{(T_{i1}, T_{i2}, \ldots , T_{iq})\}_{i=1}^n\) from a uniform distribution on \([-0.2, 1.2]^q\subset \mathbb {R}^q\) with \(\mathrm{{cov}}[T_{ik_1}, T_{ik_2}]=0\).

To measure the goodness of the estimator \(\widehat{\varvec{\theta }}(\mathbf{{t}})\), we calculated the following empirical mean square error based on \(M=100\) simulations:

where \(\widehat{\theta }_j^{(m)}(\cdot )\) is the estimate of \(\theta _j(\cdot )\) using the mth dataset and \(\{\mathbf{{t}}_l\}_{l=1}^L\) are equally spaced points in \([0, 1]^q\). In addition, to evaluate the performance of the test statistic, we obtained the probability of error as follows. When the null hypothesis is true, the empirical probability of the Type I error is defined as

where \(\widetilde{T}_m\) is the test statistic \(\widetilde{T}\) using the mth dataset. Meanwhile, when the null hypothesis is false, the empirical probability of the Type II error is given by

Now, the null hypotheses of interest, \(\mathrm{{H}}_{0\mathrm{{C}}}\) and \(\mathrm{{H}}_{0\mathrm{{Z}}}\), are defined in Sect. 4.1. Accordingly, if the null hypothesis \(\mathrm{{H}}_{0\mathrm{{C}}}\) is true, i.e., the given coefficient function \(\theta _j(\mathbf{{t}})\) is constant, we provide E1 to examine the performance of the constancy test; if not, E2 is provided. Similarly, if the null hypothesis \(\mathrm{{H}}_{0\mathrm{{Z}}}\) is true, i.e., \(\theta _j(\mathbf{{t}})\equiv 0\), E1 is used to evaluate the accuracy for the sparsity test; if not, the result for \(\mathrm{{H}}_{0\mathrm{{Z}}}\) is given as E2.

In the first model setting, we set \(p=3\) and \(q=1\) and defined the coefficient functions \(\theta _j(\cdot ),\ j=1, 2, 3\) as

where the intercept term \(\theta _0(t)\) was not considered. We employed the Epanechnikov kernel in the proposed estimator. In the estimation process, we selected the threshold \(\omega _n\) and bandwidth \(h_n\) using the procedure described in Sect. 2.3. We set the pre-determined sample fraction to \(n_0/n=0.2\) in \(D=20\)-fold cross-validation, where \(n_0=\sum _{i=1}^nI(Y_i>\omega _n)\). Table 1 shows the calculated MSEs and empirical probabilities of error for each coefficient function \(\theta _j(\cdot )\) when \(\delta =0.1, 0.25, 0.5\) and \(n=200, 500, 1000\). For each coefficient function \(\theta _j(\cdot )\), the calculated MSEs improved as n increased. This result is desirable and suggests the consistency of the proposed estimator. Note that when testing the null hypothesis \(\mathrm{{H}}_{0\mathrm{{C}}}\), we must estimate the unknown constant \(C_0\). Since the maximum deviation between \(\widehat{\theta }_j(t)\) and the estimated \(C_0\) tends to be smaller than the maximum deviation between \(\widehat{\theta }_j(t)\) and the true value \(C_0\), the empirical probabilities of the Type I error were smaller for the null hypothesis \(\mathrm{{H}}_{0\mathrm{{C}}}\) than for the null hypothesis \(\mathrm{{H}}_{0\mathrm{{Z}}}\). In all settings, the empirical probability of the Type II error improved as n increased.

The second model setting focuses on the case where p is larger than in the first model setting. We set \(p=10\) and \(q=1\) and defined the coefficient functions \(\theta _j(\cdot ),\ j=1, 2, \ldots , 10\) as

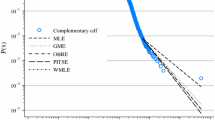

where the intercept term \(\theta _0(t)\) was not considered. The kernel function was the Epanechnikov kernel, and the tuning parameters were selected in the same manner as in the first model setting. Table 2 shows the calculated MSEs and empirical probabilities of error for each coefficient function \(\theta _j(\cdot )\) when \(\delta =0.1, 0.25, 0.5\) and \(n=200, 500, 1000\). The accuracy of the estimator and test statistic improved as n increased, with no significant deterioration compared to the first model setting with \(p=3\), indicating that the proposed model can avoid the curse of dimensionality even when the dimension p is large. Figure 1 shows the results of the estimation. The two dotted lines are plots of the 5th and 95th largest estimates of the \(M=100\) estimates at each point \(t\in [0, 1]\). The average estimates (dashed line) resembled the true value (solid line).

In the third model setting, we set \(p=2\) and \(q=2\) and defined the coefficient functions \(\theta _j(\cdot ),\ j=0, 1, 2\) as

We employed the kernel function of the Epanechnikov type as follows:

The tuning parameters were selected in the same manner as in the first model setting. Table 3 shows the calculated MSEs and empirical probabilities of error for each coefficient function \(\theta _j(\cdot )\) when \(\delta =0.1, 0.25, 0.5\) and \(n=3000, 5000\). As with the first and second settings, the accuracy of the estimator and test statistic improved as n increased.

We note that Tables 1, 2, and 3 show the results of the hypothesis tests when the tuning parameters are automatically selected based on each dataset. As a result, the empirical probability of the Type I error tended to be smaller than the given significance level \(\alpha =0.05\) in many settings, and thus the results seem to be conservative. However, although ad hoc selection of the tuning parameters will yield more reasonable results about the Type I error, such tuning parameters cannot be determined in real data analysis.

In this study, we used the plug-in test, but the bootstrap method may also be useful for testing. However, as described in de Haan and Zhou (2022), it is known that bootstrap methods do not always work efficiently in the context of extreme value theory. In addition, the effectiveness of bootstrapping in extreme value theory has only been partially revealed. Thus, at the present stage, the bootstrap test in our model is posited as future research.

5 Application

In this section, we apply the proposed method to a real dataset on white blood cells. The dataset is available in Kaggle (https://www.kaggle.com/amithasanshuvo/cardiac-data-nhanes). White blood cells play a role in processing foreign substances such as bacteria and viruses that have invaded the body, and are a type of blood cell that is indispensable for maintaining the normal immune function of the human body. Therefore, if the white blood cell count is abnormal, diseases may be suspected. The top left and right panels of Fig. 2 show histograms of the white blood cell counts for \(n=18{,}047\) males and \(n=19{,}032\) females aged 20 to 85, respectively, and the bottom two panels show histograms for those over 15 (\(\times 10^3/\upmu \)L). We can judge whether the tails of these distributions have a positive extreme value index by comparing them to the normal distribution with a zero extreme value index. In many extreme value studies, kurtosis is often used. The sample kurtosis was about 403.8 for males and about 38.3 for females, indicating that the right tails of these distributions are heavy. In addition, Fig. 3 shows plots of the subject’s age and white blood cell count, suggesting that the number of abnormal white blood cell counts tends to increase with age.

The dataset also contains percentages by type: neutrophils, eosinophils, basophils, monocytes, and lymphocytes. White blood cell differentiation is a clinical test that identifies the types of white blood cells that cause an abnormal white blood cell count. These five types have different immune functions and can help detect certain diseases. The sample averages were about 58.02, 3.10, 0.69, 8.39, and 29.84% for males and about 58.70, 2.57, 0.71, 7.47, and 30.59% for females, respectively. Neutrophils and lymphocytes comprised the majority of white blood cells, and the correlation coefficient calculated from the transformed observations, as described below, was approximately \(-0.93\) for males and \(-0.95\) for females. In other words, there was a strong negative correlation between the percentages of neutrophils and lymphocytes. In this analysis, we define the response Y as the white blood cell count; the predictors \(X_1\), \(X_2\), \(X_3\) and \(X_4\) as the percentages of eosinophils, basophils, monocytes and lymphocytes in the white blood cells; and the predictor T as age. We denote \(\mathbf{{X}}=(X_1, X_2, X_3, X_4)^\top \).

Figures 4 and 5 show the three-dimensional scatter plots of each \((X_j, T, Y)\) for male and female, respectively. As shown in these figures, the predictors \(X_1\), \(X_2\), \(X_3\) and \(X_4\) had many outliers. However, excluding these outliers also excludes the extreme values of the response Y. Therefore, we apply the normal score transformation to \(X_j\). That is, if \(X_{ij}\) is the \(R_{ij}\)-th largest in the jth predictor sample \(\{X_{ij}\}_{i=1}^n\), \(X_{ij}\) is redefined as

where all observations are jittered by uniform noise before applying the normal score transformation. Consequently, the redefined predictors \(X_1\), \(X_2\), \(X_3\), and \(X_4\) are normally distributed. Wang and Tsai (2009) applied a similar transformation in their analysis of real data.

We assume that the conditional distribution function of Y given \((\mathbf{{X}}, T)=(\mathbf{{x}}, t)\) satisfies

where \(\mathcal {L}(\cdot ; \mathbf{{x}}, t)\) is a slowly varying function satisfying (2.2), and

where \(\mathbf{{x}}=(x_1, x_2, x_3, x_4)^\top \in \mathbb {R}^4\), and \(\theta _j(t),\ j=0, 1, 2, 3, 4\) are unknown smooth functions of t. The aim of the analysis is to investigate the effect of \(X_j\) on the extreme values of Y, where the effect of \(X_j\) varies with T. To do this, we first estimate the unknown coefficient functions \(\theta _j(\cdot ),\ j=0, 1, 2, 3, 4\). Then, we select the threshold \(\omega _n\) and bandwidth \(h_n\) using the procedure described in Sect. 2.3. We employ the Epanechikov kernel in the proposed estimator and set the pre-determined sample fraction to \(n_0/n=0.030\) in \(D=20\)-fold cross-validation, where \(n_0=\sum _{i=1}^nI(Y_i>\omega _n)\). We obtained the optimal tuning parameters as \((h_n, n_0/n)=(0.21, 0.042)\) for male and \((h_n, n_0/n)=(0.30, 0.036)\) for female. Figure 6 shows the estimated coefficient functions \(\widehat{\theta }_j(\cdot ),\ j=0, 1, 2, 3, 4\) by the solid line and the following pointwise 95% confidence intervals computed from the asymptotic normality of the proposed estimator by the dashed lines:

where the bias is ignored, \(n(t)\sigma _{nj}(t)\) defined in Sect. 4.1 is estimated based on (C.3), and \(\nu =\int K(u)^2\mathrm{{d}}u\). For all coefficient functions, the trends were similar for male and female. The decreasing trend in the estimated intercept term \(\widehat{\theta }_0(\cdot )\) indicates that the number of abnormal white blood cell counts tends to increase with age. Some of the estimated coefficient functions deviated from zero and vary with age.

Table 4 presents the results of the statistical hypothesis tests for sparsity and constancy, as defined in Sect. 4.1. For the significance level \(\alpha =0.05\), we reject the null hypothesis if \(\widetilde{T}<-0.61\) or \(\widetilde{T}>4.37\). The null hypothesis \(\mathrm{{H}}_{0\textrm{Z}}\) for sparsity was rejected for all coefficient functions, except \(\theta _2(\cdot )\) for both male and female. In addition, the null hypothesis \(\mathrm{{H}}_{0\textrm{C}}\) for constancy was rejected for \(\theta _1(\cdot )\) and \(\theta _4(\cdot )\) for male and \(\theta _0(\cdot )\) and \(\theta _4(\cdot )\) for female. Remarkably, eosinophils and monocytes, which represented a small percentage of white blood cells, were associated with abnormal white blood cell counts.

The Q–Q plots for the linear model proposed by Wang and Tsai (2009) for male (left panel) and female (right panel)

We evaluate the goodness of fit of the model using the Q–Q plot (quantile–quantile plot). We regard \(\{\exp ((1, \mathbf{{X}}_i^\top )\widehat{\varvec{\theta }}(T_i))\log (Y_i/\omega _n): Y_i>\omega _n,\ i=1, \ldots , n\}\) as a random sample from the standard exponential distribution. Figure 7 shows plots of these empirical and theoretical quantiles. The two dashed lines show the pointwise 95% confidence intervals computed in the simulations. We can infer that the better the plots are aligned on a straight line, the better the model fits the data. Most of the plots were within the 95% confidence interval and the goodness of fit of the model did not seem to be bad. In contrast, Fig. 8 shows the plots for the linear model proposed by Wang and Tsai (2009), where the predictors are defined as T scaled on [0, 1], \(X_1\), \(X_2\), \(X_3\) and \(X_4\). In this case, many plots were outside the 95% confidence interval and deviated significantly from the straight line, indicating that our model fits the data better.

Finally, because the null hypotheses \(\mathrm{{H}}_{0\textrm{Z}}\) and \(\mathrm{{H}}_{0\textrm{C}}\) were not rejected for \(\theta _2(\cdot )\) and \(\theta _3(\cdot )\), we adopt a simpler model. We consider the model

which assumes the sparsity of \(X_2\). For the model (5.1), the discrepancy measure value described in Sect. 2.3 was approximately \(4.322\times 10^{-4}\) for males and \(3.015\times 10^{-4}\) for females. Meanwhile, for the model (5.2), the discrepancy measure value was approximately \(3.730\times 10^{-4}\) for males and \(3.017\times 10^{-4}\) for females, where \((h_n, n_0/n)=(0.22, 0.042)\) for males and \((h_n, n_0/n)=(0.30, 0.036)\) for females. The discrepancy measure values for females were not very different between the two models, but the discrepancy measure value for males was smaller in the model (5.2) than in the model (5.1). Moreover, we consider the model

where \(\widehat{\theta }_3\) is the average of the estimates \(\{\widehat{\theta }_3(t_l)\}_{l=1}^L\) obtained in model (5.1), which is a known constant. For the model (5.3), the discrepancy measure value was approximately \(3.628\times 10^{-4}\) for males and \(3.104\times 10^{-4}\) for females, where \((h_n, n_0/n)=(0.19, 0.042)\) for males and \((h_n, n_0/n)=(0.30, 0.036)\) for females. The discrepancy measure value for males was smaller in the model (5.3) than in the model (5.1). Therefore, from the point of view of the discrepancy measure, the data structure may be explained by a simpler model.

Data availability

Data is available on online.

Code availability

If reviewers requested, we will submit the code.

References

Andriyana Y, Gijbels I, Verhasselt A (2014) P-splines quantile regression estimation in varying coefficient models. TEST 23:153–194. https://doi.org/10.1007/s11749-013-0346-2

Andriyana Y, Gijbels I, Verhasselt A (2018) Quantile regression in varying-coefficient models: non-crossing quantile curves and heteroscedasticity. Stat Pap 59:1589–1621. https://doi.org/10.1007/s00362-016-0847-7

Cai Z, Xu X (2008) Nonparametric quantile estimations for dynamic smooth coefficient models. J Am Stat Assoc 103:1595–1608. https://doi.org/10.1198/016214508000000977

Cai Z, Fan J, Yao Q (2000) Functional-Coefficient Regression Models for Nonlinear Time Series. J Am Stat Assoc 95:941–956. https://doi.org/10.1080/01621459.2000.10474284

Csorgo S, Deheuvels P, Mason D (1985) Kernel estimates of the tail index of a distribution. Annu Stat 13:1050–1077. https://doi.org/10.1214/aos/1176349656

de Haan L, Ferreira A (2006) Extreme value theory: an introduction. Springer, New York. https://doi.org/10.1007/0-387-34471-3

de Haan L, Zhou C (2022) Bootstrapping extreme value estimators. J Am Stat Assoc. https://doi.org/10.1080/01621459.2022.2120400

Daouia A, Gardes L, Girard S (2013) On kernel smoothing for extremal quantile regression. Bernoulli 19:2557–2589. https://doi.org/10.3150/12-BEJ466

Dekkers ALM, Einmahl JHJ, de Haan L (1989) A moment estimator for the index of an extreme-value distribution. Ann Stat 17:1833–1855. https://doi.org/10.1214/aos/1176347397

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360. https://doi.org/10.1198/016214501753382273

Fan J, Zhang W (1999) Statistical estimation in varying coefficient models. Ann Stat 27:1491–1518. https://doi.org/10.1214/aos/1017939139

Fan J, Zhang W (2000) Simultaneous confidence bands and hypothesis testing in varying-coefficient models. Scand J Stat 27:715–731. https://doi.org/10.1111/1467-9469.00218

Fan J, Zhang W (2008) Statistical methods with varying coefficient models. Stat Interface 1:179–195. https://doi.org/10.4310/SII.2008.v1.n1.a15

Fan J, Zhang C, Zhang J (2001) Generalized likelihood ratio statistics and Wilks phenomenon. Ann Stat 29:153–193. https://doi.org/10.1214/aos/996986505

Gardes L, Girard S (2010) Conditional extremes from heavy-tailed distributions: application to the estimation of extreme rainfall return levels. Extremes 13:177–204. https://doi.org/10.1007/s10687-010-0100-z

Gardes L, Stupfler G (2014) Estimation of the conditional tail index using a smoothed local hill estimator. Extremes 17:45–75. https://doi.org/10.1007/s10687-013-0174-5

Goegebeur Y, Guillou A, Schorgen A (2014) Nonparametric regression estimation of conditional tails: random covariate case. Statistics 48:732–755. https://doi.org/10.1080/02331888.2013.800064

Goegebeur Y, Guillou A, Stupfler G (2015) Uniform asymptotic properties of the nonparametric regression estimator of conditional tails. Annales de l’ Institut Henri Poincaré, Probabilités et Statistiques 51:1190–1213. https://doi.org/10.1214/14-AIHP624

Gomes M, de Haan L, Peng L (2002) Semi-parametric estimation of the second order parameter in statistics of extremes. Extremes 5:387–414. https://doi.org/10.1023/A:1025128326588

Hall P (1982) On some simple estimates of an exponent of regular variation. J R Stat Soc B 44:37–42. https://doi.org/10.1111/j.2517-6161.1982.tb01183.x

Hill BM (1975) A simple general approach to inference about the tail of a distribution. Annu Stat 3:1163–1174. https://doi.org/10.1214/aos/1176343247

Hastie T, Tibshirani R (1993) Varying-coefficient models. J R Stat Soc B 55:757–779. https://doi.org/10.1111/j.2517-6161.1993.tb01939.x

Huang JZ, Wu CO, Zhou L (2002) Varying-coefficient models and basis function approximations for the analysis of repeated measurements. Biometrika 89:111–128. https://doi.org/10.1093/biomet/89.1.111

Huang JZ, Wu CO, Zhou L (2004) Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Stat Sin 14:763–788

Kim MO (2007) Quantile regression with varying coefficients. Ann Stat 35:92–108. https://doi.org/10.1214/009053606000000966

Li R, Leng C, You J (2022) Semiparametric tail index regression. J Bus Econ Stat 40:82–95. https://doi.org/10.1080/07350015.2020.1775616

Ma Y, Jiang Y, Huang W (2019) Tail index varying coefficient model. Commun Stat 48:235–256. https://doi.org/10.1080/03610926.2017.1406519

Ma Y, Wei B, Huang W (2020) A nonparametric estimator for the conditional tail index of Pareto-type distributions. Metrika 83:17–44. https://doi.org/10.1007/s00184-019-00723-8

Pickands J (1975) Statistical inference using extreme order statistics. Ann Stat 3:119–131. https://doi.org/10.1214/aos/1176343003

Rosenblatt M (1976) On the maximal deviation of \(k\)-dimensional density estimates. Ann Probab 4:1009–1015. https://doi.org/10.1214/aop/1176995945

Smith RL (1987) Estimating tails of probability distributions. Ann Stat 15:1174–1207. https://doi.org/10.1214/aos/1176350499

Stupfler G (2013) A moment estimator for the conditional extreme-value index. Electron J Stat 7:2298–2343. https://doi.org/10.1214/13-EJS846

Wang H, Tsai CL (2009) Tail index regression. J Am Stat Assoc 104:1233–1240. https://doi.org/10.1198/jasa.2009.tm08458

Wu CO, Chiang CT, Hoover DR (1998) Asymptotic confidence regions for kernel smoothing of a varying-coefficient model with longitudinal data. J Am Stat Assoc 93:1388–1402. https://doi.org/10.1080/01621459.1998.10473800

Yoshida T (2023) Single-index models for extreme value index regression. arXiv:2203.05758

Youngman BD (2019) Generalized additive models for exceedances of high thresholds with an application to return level estimation for U.S. wind gusts. J Am Stat Assoc 114:1865–1879. https://doi.org/10.1080/01621459.2018.1529596

Acknowledgements

We would like to thank the anonymous two reviewers, Associate Editor and Editor for their helpful comments that helped to improve the manuscript.

Funding

Open access funding provided by Kagoshima University. This research was partially financially supported by the JSPS KAKENHI (Grant Numbers 18K18011, 22K11935).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Koki Momoki. The first draft of the manuscript was written by Koki Momoki and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare that are relevant to the content of this article.

Ethical approval

None

Consent to participate

Agree

Consent for publication

Agree

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this appendix, we prove Theorems 1–3 for \(\mathbf{{t}}=\mathbf{{t}}_0\in \mathbb {R}^q\). For convenience, the intercept term \(\theta _0(\cdot )\) is not considered.

Proof of Theorem 1

\(\dot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))\) can be regarded as the sum of independent and identically distributed random variables. To apply the Central Limit Theorem, we show \(E[[n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}(\dot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))+n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}}_0))]\rightarrow -\mathbf{{b}}(\mathbf{{t}}_0)\) as \(n\rightarrow \infty \) in the first step and \(\mathrm{{var}}[[n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}(\dot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))+n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}}_0))]=\mathrm{{var}}[[n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}\dot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))]\rightarrow \nu \mathbf{{I}}_p\) as \(n\rightarrow \infty \) in the second step, where “var” denotes the variance-covariance matrix.

step 1

We can write \(\dot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))\) as

By the Taylor expansion and the condition (C.1), we have

From the condition (C.4) and model assumptions (2.1) and (2.2), we have

Analogously, we have

Under the condition (C.5), we have

as \(n\rightarrow \infty \). Therefore, we have \(E[[n_0(\mathbf{{t}}_0)\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}{} \mathbf{{A}}_n^{(1)}]\rightarrow -\mathbf{{b}}(\mathbf{{t}}_0)\) as \(n\rightarrow \infty \). Using the second-order Taylor expansion, we have

Therefore, by the Taylor expansion and condition (C.1), we have

Because the conditional distribution of \(\gamma (\mathbf{{X}}, \mathbf{{t}}_0)^{-1}\log (Y/\omega _n)\) given \((\mathbf{{X}}, \mathbf{{T}})=(\mathbf{{x}}, \mathbf{{t}}_0)\) and \(Y>\omega _n\) is approximately a standard exponential, we have

where \(f_{(\mathbf{{X}}, \mathbf{{T}})}(\mathbf{{x}}, \mathbf{{t}})\) denotes the marginal density function of \((\mathbf{{X}},\mathbf{{T}})\). Therefore, the right-hand side of (A.1) can be written as

where \(\mathbf{{\Lambda }}_n^{(1)}(\mathbf{{t}})\) and \(\mathbf{{\Lambda }}_n^{(2)}(\mathbf{{t}})\) are defined in Sect. 3.2. Therefore, we have \(E[[n_0(\mathbf{{t}}_0){} \mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}{} \mathbf{{A}}_n^{(2)}]-[n_0(\mathbf{{t}}_0)\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{1/2}\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}}_0)\rightarrow \mathbf{{0}}\) as \(n\rightarrow \infty \). Hence, the proof of the first step is completed.

step 2

We abbreviate as

Because \(\{(Y_i, \mathbf{{X}}_i, \mathbf{{T}}_i)\}_{i=1}^n\) are independently distributed, we have

From the result of Step 1, the second term on the right-hand side converges to the zero matrix as \(n\rightarrow \infty \). Using the Taylor expansion, the first term on the right-hand side can be written as

Because the conditional distribution of \(\gamma (\mathbf{{X}}, \mathbf{{t}}_0)^{-1}\log (Y/\omega _n)\) given \((\mathbf{{X}}, \mathbf{{T}})=(\mathbf{{x}}, \mathbf{{t}}_0)\) and \(Y>\omega _n\) is approximately a standard exponential, we have

Therefore, the right-hand side of (A.2) can be written as

as \(n\rightarrow \infty \). Hence, the proof of the second step is completed.

From the results of Steps 1 and 2, we obtain Theorem 1 by applying the Central Limit Theorem. \(\square \)

Proof of Theorem 2

We show \(E[[n_0(\mathbf{{t}}_0)\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}\ddot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))[n_0(\mathbf{{t}}_0)\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}]\rightarrow \mathbf{{I}}_p\) as \(n\rightarrow \infty \) in the third step and \(\mathrm{{var}}[\mathrm{{vec}}([n_0(\mathbf{{t}}_0)\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2}\ddot{\varvec{L}}_n({\varvec{\theta }}(\mathbf{{t}}_0))[n_0(\mathbf{{t}}_0){} \mathbf{{\Sigma }}_n(\mathbf{{t}}_0)]^{-1/2})]\rightarrow {\varvec{O}}\) as \(n\rightarrow \infty \) in the fourth step, where \(\mathrm{{vec}}(\cdot )\) is a vec operator.

step 3

The conditional distribution of \(\gamma (\mathbf{{X}},\mathbf{{t}}_0)^{-1}\log (Y/\omega _n)\) given \((\mathbf{{X}},\mathbf{{T}})=(\mathbf{{x}},\mathbf{{t}}_0)\) and \(Y>\omega _n\) is approximately a standard exponential. Consequently, we have

Under the condition (C.3), the right-hand side converges to \(\mathbf{{I}}_p\) as \(n\rightarrow \infty \). Hence, the proof of the third step is completed.

step 4

The conditional distribution of \(\gamma (\mathbf{{X}},\mathbf{{t}}_0)^{-1}\log (Y/\omega _n)\) given \((\mathbf{{X}},\mathbf{{T}})=(\mathbf{{x}},\mathbf{{t}}_0)\) and \(Y>\omega _n\) is approximately a standard exponential. Consequently, we have

where “\(\otimes \)” denotes the Kronecker product. Under the condition (C.3), the right-hand side converges to \({\varvec{O}}\) as \(n\rightarrow \infty \). Hence, the proof of the fourth step is completed.

\(\square \)

Proof of Theorem 3

We define \({\varvec{\alpha }}_n=\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)^{1/2}({\varvec{\theta }}-{\varvec{\theta }}(\mathbf{{t}}_0))\) and \({\varvec{\alpha }}_n^*=\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)^{1/2}{\varvec{\theta }}(\mathbf{{t}}_0)\). Additionally, we define \(\mathbf{{Z}}_{ni}=\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)^{-1/2}{} \mathbf{{X}}_i,\ i=1, \ldots , n\). The objective function \(L_n({\varvec{\theta }})\) can be written as

Let \(\dot{\varvec{L}}_n^*({\varvec{\alpha }}_n)\) be the gradient vector of \(L_n^*({\varvec{\alpha }}_n)\) and \(\ddot{\varvec{L}}_n^*({\varvec{\alpha }}_n)\) be Hessian matrix of \(L_n^*({\varvec{\alpha }}_n)\). We assume \(\ddot{\varvec{L}}_n^*({\varvec{\alpha }}_n)\) is a positive definite matrix for all \({\varvec{\alpha }}_n\in \mathbb {R}^p\). Therefore, \(L_n^*({\varvec{\alpha }}_n)\) is strictly convex.

Using the Taylor expansion, we have

for fixed \(\mathbf{{s}}\in \mathbb {R}^p\). By applying Theorems 1 and 2, we have \(\dot{\varvec{L}}_n^*({\varvec{0}})/\sqrt{n_0(\mathbf{{t}}_0)}+[n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)]^{1/2}\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}}_0)\xrightarrow {D}\mathrm{{N}}(-\mathbf{{b}}(\mathbf{{t}}_0),\nu \mathbf{{I}}_p)\) and \(\ddot{\varvec{L}}_n^*({\varvec{0}})/n_0(\mathbf{{t}}_0)\xrightarrow {P}{} \mathbf{{I}}_p\). We assume \([n_0(\mathbf{{t}}_0){\varvec{\Sigma }}_n(\mathbf{{t}}_0)]^{1/2}\sum _{l=1}^2\mathbf{{\Lambda }}_n^{(l)}(\mathbf{{t}}_0)\rightarrow 0\) as \(n\rightarrow \infty \). Consequently, this implies that, for any \(\varepsilon >0\), there exists a constant C such that

which implies \(\widehat{\varvec{\alpha }}_n=\mathbf{{\Sigma }}_n(\mathbf{{t}}_0)^{1/2}(\widehat{\varvec{\theta }}(\mathbf{{t}}_0)-{\varvec{\theta }}(\mathbf{{t}}_0))=O_P(\sqrt{n_0(\mathbf{{t}}_0)})\) (Fan and Li 2001). From the Taylor expansion of \(\dot{\varvec{L}}_n^*(\widehat{\varvec{\alpha }}_n)={\varvec{0}}\), we have

Therefore, by applying Theorems 1 and 2, we obtain Theorem 3. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Momoki, K., Yoshida, T. Hypothesis testing for varying coefficient models in tail index regression. Stat Papers (2024). https://doi.org/10.1007/s00362-024-01538-0

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-024-01538-0

Keywords

- Extreme value theory

- Hypothesis testing

- Pareto-type model

- Tail index regression

- Varying coefficient model