Abstract

In this paper, we argue that not all economic interactions can be simulated. Specific types of interactions, instantiated in and instantiating of institutional structures, are embodied in ways that do not admit entailing laws and cannot be expressed in a computational model. Our arguments have two implications: (i) zero intelligence is not merely a computational phenomenon but requires an “embodied” coupling with the environment (theoretical implication); and (ii) some interactions, on which collective phenomena are based, are unprestatable and generate emerging phenomena which cannot be entailed by computation (methodological implication).

Similar content being viewed by others

1 Introduction

Douglas Hofstadter, in his 1979 well-known book Gödel, Escher, Bach, while discussing the nature of swarm intelligence with reference to the anecdotal case of ant colonies, raises a question: “the interaction between ants is determined just as much by their six-leggedness and their size and so on, as by the information stored in their brain. Could there be an Artificial Ant Colony?” (p. 365, emphasis added). After four decades, judging from the significant development of AI, complexity science and related methodologies—such as multi-agent systems—we may be tempted to admit that this is possible: modeling artificial societies (including artificial ant colonies) may be a successful type of scientific praxis.

But this praxis still may not be a convincing answer to Hofstadter’s question which remains the same: are ant colonies more a matter of legs or a matter of information? Or more generally, what types of interactions, on which collective intelligence is based, admit a simulation? Does interaction depend simply on information processing or is it an extended, embodied matter that somehow escapes computation?

The question is relevant if we recognize a role for zero/minimal intelligence (ZI) in cognitive organization. This concept suggests that, in specific institutional settings, “dumbs agents” (e.g., simple agents that make elementary choices, such as random choices) can generate outcomes that are substantively rational (Gode & Sunder 1993). And this is possible precisely because some specific structures external to the agent do most of the work and take the place of an agent’s cognition. In this regard, an environment or institution may be designed to scaffold an agent’s behavior, or to steer behavior in a particular direction (Clark 1997; Denzau and North 1994). On some views, this ZI-institutional structure can be easily simulated; on other views, as we’ll see, it’s not so easy, and may be impossible.

In this contribution, we argue that not all interactions can be easily simulated. In particular, specific types of interactions, instantiated in and instantiating of institutional structures, are embodied and extended in ways that cannot be captured computationally. Our arguments present two implications: (a) a theoretical implication: ZI is not merely a computational phenomenon but often requires an “embodied” coupling with the environment;Footnote 1 (b) a methodological implication: simulations cannot simply substitute ecological settings for interactions to the extent that some interactions, on which collective phenomena are based, are unprestatable and generate emerging phenomena which cannot be entailed by computation.

Our thesis is consistent with two general views which are staked out in the contemporary debate on evolution and cognition: (1) We endorse the impossibility of computationally entailing many emerging phenomena of the ecosphere (Longo et al. 2012; Kauffman 2000); and (2) we rely on the view that cognition and intelligence are embodied, embedded, enactive and extended (Newen et al. 2018), in ways that do not admit of cognitivist (computationalist) representability.

1.1 State of the art: zero intelligence plus institutions

Denzau and North (1994) propose that institutional mechanisms occur through shared internal mental models. They define institutions as “the rules of the game of a society [consisting] of formal and informal constraints constructed to order interpersonal relationships” (1994, 4). “Institutions” that order interpersonal relations, as sets of rules that compose “shared mental models,” would be fully computational and fully consistent with computational models of the mind. “The mental models are the internal representations that individual cognitive systems create to interpret the environment; the institutions are the external (to the mind) mechanisms individuals create to structure and order the environment” (Denzau & North 1994, p. 4). In other words, North’s idea of “markets as institutions” is part of the information-processing paradigm within economics and cognitive science; these mental models are constituted mainly by beliefs, preferences, expectations that populate people’s mental representations of the institutions they act in.

Under conditions of uncertainty, individuals’ interpretation of their environment will reflect their learning. Individuals with common cultural backgrounds and experiences will share reasonably convergent mental models, ideologies, and institutions; and individuals with different learning experiences (both cultural and environmental) will have different theories (models, ideologies) to interpret their environment (Denzau & North 1994, pp. 3-4).

Institutional change can be easily simulated by manipulating these mental models (North 1993), and in such simulations, uncertainty can be reduced through such manipulations.

In the philosophy of mind and the cognitive sciences, the idea that external instruments and institutions can scaffold individual cognition was introduced by Andy Clark (1997) under the heading of the “extended mind” (Clark & Chalmers 1998). Clark took up Denzau & North’s idea of shared mental models, but conceived of institutions as extensions of the cognitive system according to the parity principle:

If, as we confront some task, a part of the world functions as a process which, were it done in the head, we would have no hesitation in recognizing as part of the cognitive process, then that part of the world is (so we claim) part of the cognitive process (Clark & Chalmers 1998, p. 8).

On this view, the manipulation of the external world is functionally equivalent to internal processes. In the economic context, markets, along with their constraints and incentives, are viewed as epistemic scaffolds that can support decisions and actions and predict agent behavior (Clark 1997). “[W]hat is doing the work, in such cases, is not (so much) the individual's cogitations as the larger social and institutional structures in which she is embedded” (Clark 1997, p. 279, p. 272). Factors conceived of as external to brain and body may be functionally integrated in the overall cognitive system based on functional equivalence. Institutions, such as markets, significantly reduce internal cognitive effort by externalizing some amount of information processing. Institutional rules and practices, understood as market mechanisms, promote actions that facilitate returns relative to a fixed set of goals. They “provide an external resource in which individuals behave in ways dictated by norms, policies, and practices; norms, policies, and practices that may even become internalized as mental models” (Clark 1997, p. 279).

In some cases, rather than psychological states within the individual, market structures rule firm-level strategies and impose strong constraints on individual choice.

Where the external scaffolding of policies, infrastructure, and customs is strong and (importantly) is a result of competitive selection, the individual members are, in effect, interchangeable cogs in a larger machine. The larger machine … incorporates large-scale social, physical and even geopolitical structures (1997, p. 272).

According to neo-institutional economics, economic institutions can work in place of internal cognitive processes (Hodgson 2004), or put differently, the external environment “does most of the work,” as external devices and arrangements process the relevant information which otherwise is typically conceived of as internally (cognitively) manipulated. This is consistent with zero or minimal (internal) intelligence supported by external structures. At the extreme, in these larger institutional processes, individual agents may play interchangeable functional roles as ZI traders (Gode & Sunder 1993).

Accordingly, “the explanatory burden is borne by overall system dynamics in which the microdynamics of individual psychology is relatively unimportant” (Clark 1997, p. 276). This would ultimately explain why neoclassical economics works “(insofar as it works at all)” (Clark 1997, p. 271).

In cases where the overall structuring environment acts so as to select in favor of actions which are restricted so as to conform to a specific model of preferences, neoclassical theory works. And it works because individual psychology no longer matters: the “preferences” are imposed by the wider situation and need not be echoed in individual psychology (Clark, 1998, p. 183).

This externalist turn, common to both neo-institutional economics and the philosophy of mind in the cognitive sciences, is able to reinforce the concept of ZI, which affirms “the power of institutional settings and external constraints to promote collective behaviors that conform to the model of substantive rationality” (Clark 1997, p. 274).

The idea that markets are institutions which “do most of the work” as they substitute for the agent’s cognition (Gode & Sunder 1993) should not be considered an absolute and unconditioned fact. Individuals are systematically biased and this affects market behavior (Ariely 2008; Kahneman et al. 1982); and market mechanisms work only when specific information requirements are met (Williamson 1981; Akerlof 1970). Generally speaking, we cannot analyze the relationship between agents, institutions and market mechanisms unless we abandon the neoclassical paradigm and focus on the behaviorally grounded dimension of how human rationality enters into market behaviors (North 1993; cf., Gallagher et al. 2019; Furubotn, & Richter 1994).

Accordingly, the allocative efficiency of markets populated by zero-intelligence agents should not be considered in absolute terms; neither should it be considered a theoretically blind endorsement of invisible hand mechanisms. This becomes clear when we include the idea of embodied intelligence, and understand it as related, in particular, to the problem of simulating specific interactions among embodied agents, even in hypothetical cases where internal cognitive processes are minimized or approach zero. The minimal intelligence debate assumes, as a benchmark, a classical—cognitively poor—maximizing agent, to demonstrate that “maximization at the individual level is unnecessary for the extraction of surplus in aggregate” (Gode & Sunder 1993 p. 136). The idea of ZI assumes the classic cognitivist view that intelligence is essentially computational. ZI means zero computation on the side of the agent; the agent’s cognition is set to zero. The idea of embodied intelligence, however, contends that intelligence is more than computation and that so-called ZI works not just because of the arrangement of the environment, but because the system it is part of, insofar as it includes bodily processes, is intelligent—the agent’s bodily processes are intelligent in their coupling to the environment. The ZI system is zero computation, plus embodied intelligence operating in a structured environment.

Here we embrace (as we will discuss in the next sections) the view that agents are embodied and evolutionarily-grounded, characterized by relational autonomy, and we assume, consistent with Simon’s bounded rationality (Simon 1982), that human rationality is not an abstract property, but must be conceived as involving an adequacy of cognitive “endowment” relative to the specific environment in which the agent is embedded.Footnote 2 In particular, we assume that bounded rationality is embodied (cf., Petracca, & Grayot 2023; Viale et al. 2023; Gallese et al. 2021) and evolutionarily grounded (Mastrogiorgio et al. 2022).

Notice that the notion of zero or minimal intelligence, although originally conceived in a classical maximizing framework, is consistent with bounded rationality whenever a random rule fits the structure of the task environment (Mastrogiorgio 2020). For instance, an ant—an organism endowed with minimal intelligence—randomly walking in search for food, is an adapted organism as the minimal rule characterizing its behavior fits the structure of the environment; its random behavior (characterized by a meandering pattern, Popp & Dornhaus 2023) increases the chance of finding food as other ants also explore the environment using the same minimal rule (which results in a collectively intelligent exploration of the landscape).

2 Theoretical framework: two views of “distributedness” and embodied cognition

The use of, so-called, multi-agent systems in social and economic sciences has been inspired by artificial intelligence studies and in particular by the notion of distributed intelligence, which is based on the possibility of solving complex problems by means of a multitude of differentiated agents (algorithms): Such interacting agents, together, are able to generate solutions that are superior to the ones generated by single agents. Again, this view is sympathetic with a specific computational perspective on the brain and, in particular, the hypothesis that cognitive phenomena emerge from complex distributed networks of (relatively) simple neurons that, on their own, (in 0, 1, or yes, no modes) either activate or don’t activate.

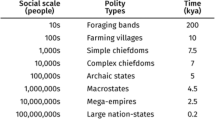

Simple agents interacting at a micro-level through simple rules can generate non-trivial, self-organized systems that emerge as complex societies, characterized by a differentiation of roles and activities (Gilbert & Terna 2000; Axtell & Epstein 1994). A fundamental ingredient of this perspective—consistent with “complexity” studies and substantiated in the use of agent-based models—is interaction. Agents interact so as to generate emerging phenomena that cannot be a simple composition of the multitude of individual behaviors and cannot be reduced to micro-level dynamics.

That is to say, the resulting system is more than the sum of its parts and presents emerging properties, which cannot be reduced to their elementary components.

In artificial intelligence, and computer simulations implemented through agent-based models, “distributedness” is purely formal and symbolic as it refers to artificial agents whose interacting behavior can be implemented on a computer. In contrast, “distributedness” in cognitive science is a quite different thing. It refers to the fact that cognition is extended and occurs ecologically, “in the wild” (Hutchins 1995), distributed among the strictly real agents of a social group and involving the use of both internal and external material resources (i.e., artifacts, technologies and intelligent systems) (Sutton 2006). The fundamental distinction is that while “distributedness” in artificial applications and simulations is merely symbolic, in cognitive science it is materially embodied. This difference is significant and is definitely not a matter of degree. As in the case of ant colonies (are they a matter of information or legs?), there are emerging phenomena whose symbolic implementation is problematic, precisely because they are distributed in ecological terms.

This contrast between two different conceptions of distributedness is reflected in:

-

(1)

Two different views of embodied cognition;

-

(2)

Two different views of interaction; and

-

(3)

Two different views of institution.

We will briefly summarize these three contrasts in the next three sections and then explain how they complicate the idea of zero-intelligence agents, and the problem of simulating economic models.

2.1 Weak and strong embodied cognition

In the research area of embodied cognition (EC) a distinction is made between “weak” EC and “strong” EC (Alsmith & de Vignemont 2012). According to weak EC, the significant explanatory role is given to “in-the-head” neural processes, or what are variously called body or B-formatted (neural) representations, understood as simulations of bodily functions in the brain (e.g., Glenberg 2010; Goldman 2012, 2014; Goldman & de Vignemont 2009). This is sometimes captured by phrases such as “embodied simulation” (Gallese & Sinigaglia 2011) or “the body in the brain” (Berlucchi & Aglioti 2010). Weak EC places strict constraints on how we are to understand embodiment. For example, Goldman and de Vignemont (2009) assume that almost everything of importance for human cognition happens in the brain, “the seat of most, if not all, mental events” (2009, p. 154). They discount both the non-neural body and the environment as significant contributors to cognition.

Ruling out bodily activity and environmental factors, weak EC is left with “sanitized” body- or B-formatted representations (“B-formats”) (Goldman & de Vignemont 2009). Such representations are computational, even if they are not propositional or conceptual in format; their content may include representations of the body or body parts, but also can include action goals, and the motoric brain processes to achieve them (Goldman 2012). Weak EC also includes Barsalou’s notion of grounded cognition, which suggests that cognition operates on reactivation of motor areas but “can indeed proceed independently of the specific body that encoded the sensorimotor experience" (2008, p. 619; see Pezzulo et al. 2011). According to Goldman (2012), one gets a productive concept of EC simply by generalizing the use of B-formatted representations. B-formats, including “codes associated with activations in somatosensory cortex and motor cortex” (2012, p. 74), may originally have had only an interoceptive or motor task such that the content of the representation in some way references the body. Their functions are expanded, however, through an evolutionary process of “reuse” (Anderson 2010), i.e., the idea that neural circuits originally established for one use can be redeployed for other purposes while still maintaining their original function. For example, mirror neurons start out as motor neurons involved in motor control; but they get exapted in the course of evolution for purposes of social cognition and now are also activated to create simulations of another person’s actions. Another example of this reuse principle can be found in linguistics. Pulvermüller’s (2005) language-grounding hypothesis shows that language comprehension of action words involves the activation of action-related cortical areas. This suggests that higher-order symbolic thought, including memory, is grounded in low-level simulations of motor action (Goldman 2014; Glenberg 2010; Barsalou 1999; Casasanto & Dijkstra 2010).

In contrast, strong EC endorses a significant explanatory role for the (non-neural) body itself, and for physical and social environments in cognitive processes. “Given that bodies and nervous systems co-evolve with their environments, and only the behavior of complete animals is subjected to selection, the need for such a tightly coupled perspective should not be surprising” (Beer, 2000 p. 97; also see Brooks 1991; Chemero 2009; Gallagher 2005; Thompson 2007). Strong EC, which is sometimes called 4E (embodied, embedded/ecological, extended and enactive) cognition,Footnote 3 maintains that cognition is not just a brain activity and that, in regard to evolutionary claims, one has to understand the significance of the fact that the brain and body coevolved. Consider, for example, the hypothetical case in which humans evolved without hands. It’s very clear that not only would our brains be different, but also, we would perceive the world differently. On enactivist and ecological accounts, our perception is action-oriented, and we perceive the world pragmatically, in terms of affordances, i.e., in terms of what we can do with the things around us, and how we can interact with other agents. Both physical and social affordances would be different for an organism without hands. According to a way of thinking that goes as far back as the presocratic philosopher Anaxagoras, our conception of rationality would be different if we evolved without hands (Gallagher 2013a; 2018). Likewise, our upright posture, our bendable leg joints and other morphological features of our bodies allow us to see the world, to act in it and to engage in social interactions in particularly human ways.

2.2 Interaction: topology and autonomy

According to weak EC, our social interactions are primarily run as simulations on a system of mirror neurons in the brain (Gallese & Goldman 1998). This idea of simulation has one thing in common with the idea of computer simulations used to model the behavior of artificial agents, namely that interactions and mirror-neuron processes can be captured by computational models (e.g., Oztop et al. 2006; Tessitore et al. 2010). Interactions among artificial agents can also be captured computationally. They can act in a social environment that usually consists in an artificial society populated by other agents. Interactions are specified by a network topology (or fixed landscape) that predefines the possible interactions among the agents and operates as an external constraint, from the perspective of those agents (see Felin et al. 2014). In other words, relationships among agents define an abstract topology of interactions. For example, in cellular automata (e.g., Hegselmann 1998) each agent interacts with a fixed number of neighbors. In more complex models, we have populations of agents with different levels of centrality, that is to say, not all the agents in a social network are topologically equal since different agents and groups of agents are embedded in a specific manner. From this theoretical point of view, interaction specifies the relationship among the agents that is salient for the phenomenon under investigation, and purely in terms of what each individual agent brings to the interaction. Methodologically, on this view, interaction can be formally simulated, that is to say, it can be modeled in computational terms. The topology of the simulation specifies and limits the number and kinds of interactions possible.

In contrast, in strong EC, and especially according to enactive conceptions, interaction is defined as a mutually engaged co-regulated coupling between at least two autonomous agents, where

-

The co-regulation and the coupling mutually affect each agent and constitute a self-sustaining organization in the domain of relational dynamics;

-

The autonomy of the agents involved is not destroyed, although its scope may be augmented or reduced (De Jaegher et al. 2010; Gallagher 2020).

On this view, agents who lack autonomy are not able to engage in genuine interaction. The relation between master and slave, for example, is not genuine interaction if the slave lacks autonomy. In addition, autonomy, on this agentive scale, is conceived of as relational autonomy, which means that the autonomy of one agent depends on its relations with other agents. In this respect, autonomy is not predetermined or absolute, but exists by degree and depends on the social relations that exist in any particular situation. Autonomy, likewise, is strongly embodied, in the sense that it is constrained not only by one’s social relations, but also by bodily affectivity (for example, if I am in pain, or fatigued, my autonomy may be less than if I am not in pain and well fed), and by the material arrangements that define the situation. Affordances are likewise relational and, rather than operating as external constraints, are, in effect, enacted and likewise constrained in the interactions between action-oriented bodily parameters (e.g., having hands and legs), environment and other agents.

Finally, on a more macroscale the process of interaction itself can take on its own autonomy insofar as the meaning and the effects of interaction are not reducible to the sum of the individual contributions, but dynamically emerge as greater than the sum of its parts. In this respect, in some instances, persisting interactions can enact an institution. One can think here of the tango, which requires two agents, where each agent does not simply run through their own predefined movements, but move, in response to the other agent, constrained by a particular genre or tradition of dance. What emerges is the tango, a phenomenon that depends, not just on the movements of each agent, but on the dynamical interaction between them.

2.3 Institutions: functional extension versus social extension

Extended mind or extended cognition is typically considered part of the 4E or strong EC camp. It differs from some of the other versions of EC in that it remains functionalist and consistent with computational and representational models (Clark & Chalmers 1998). Functionalism, in this context, means that the causal factors that contribute to cognition are multiply realizable. As long as cognitive processes involve the correct causal relations, e.g., between syntactical rules or representations, they can be instantiated in brains, bodies or computers. Hence, the parity principle (see above), which states that the materiality or location of such processes (in the head or in the external world) is not important. The only important thing is the equivalency of function. The concept of institution discussed above, and as found in Clark, Denzau and North, is consistent with this functionalist view. The type of interaction that occurs between agent and institution, on this view, has been characterized as functional integration (Clark 1997; Slors 2019) and is consistent with the topological conception of interaction outlined above.

In contrast, the concept of the “socially extended mind” or “cognitive institution” (Gallagher 2013b; Gallagher and Crisafi 2009) incorporates the idea of social interactions characterized by relational autonomy. On a socially extended cognition model, the constraints imposed by social interactions, as well as the possibilities enabled by such interactions, are such that economic reasoning is never just an individual process carried out by an autonomous individual, classically understood. Interactive engagement with cognitive institutions (which include markets, as well as legal systems, cultural and educational institutions, etc.) (1) not only helps to accomplish (or support or scaffold) certain cognitive processes, but without this engagement, and without the institution, such cognitive processes would not be possible, and (2) vice versa, without this engagement, the institution would not exist. Embodied, social interactions enact institutions. As Gallagher, Mastrogiorgio and Petracca (2020, pp. 6–7) explain:

Such considerations change the theoretical notion of market from a mere economic mechanism able to solve allocation and coordination problems of collectivity (better than alternative mechanisms) to an enactive cognitive institution. Considering the perspective of the agents involved, the market as cognitive institution is like Clark’s extended mind notion of scaffolded cognition insofar as it (i) extends the participant’s cognitive processes of economic reasoning, and (ii) both constrains and enables the actions and interactions of embodied and embedded agents in the economy. The enactive notion of cognitive institution involves something more, however.

According to Slors (2019), the main difference between an extended mind conception of institution as a causal–functional unit, and the enactive model of socially extended cognition, is that rather than functional integration, the socially extended mind is characterized by task dependency, which is “the extent to which the intelligibility of a task depends on a larger whole of coordinated tasks” (p. 1190). He considers this a normative notion tied to specific roles in an overall organization or system. For example, in the legal system a judge cannot do what she does or fulfill her task without others (defense attorneys, prosecutors, clerks, juries, etc.) doing what they do. We have argued, however, that both functional integration and task dependency are matters of degree, and that even if the extended mind concept of institution is characterized by a high degree of functional integration in contrast to the high degree of task dependency in the socially extended concept of institution, the more important difference concerns interaction. Granted that these differing degrees and differing types of agent–environment or agent–institution relations may recursively affect social interaction, the institutions themselves are enacted by just these embodied, material and social interactions, and it is this that reflects the essential difference between the conceptions of topological versus autonomous interaction. Specifically, we will argue that interaction characterized by different scales of autonomy, and therefore the socially extended institutional process characteristic of real socially extended institutions, cannot be simulated.

3 Discussion: simulated interaction at stake

Complex phenomena may emerge by elementary rules. Herbert A. Simon used the effective anecdote of the ant on the beach to capture this point: The behavior of an ant on a beach looks very complex to an observer as the ant avoids all the obstacles (such as pebbles and dips) and goes unerringly to its objective. Actually, its complex behavior does not require complex rules as the ant simply follows a minimal rule of turning left/right when there is an obstacle. Its overall intelligent behavior, based on the elementary rule, is determined by the environment to which the ant is adapted.

Agent-based models are based on this type of rule-following logic; they are used to analyze collective behaviors and to model societies of organisms as emergent phenomena. For instance, we can easily model ant colonies by stigmergy (e.g., De Nicola, 2020; Bonabeau et al. 1999), where one agent’s action triggers the succeeding action of another agent (or the same agent): An ant path results from pheromone traces left by preceding ants so that the whole resultant is a self-organization. Such traces precisely constitute the vehicles of interaction, and they transmit information about the path to be followed. But, as we are going to argue, while many collective behaviors can be easily simulated, there are others that cannot be, and the reasons are not just a matter of intrinsic complexity of the phenomenon under investigation.

3.1 The embodied dimension of incompleteness

A simulation model involves writing a computer program, running it and observing the outcomes. Such a model should not be conceived as a veridical representation of the outside world but an analytical tool.Footnote 4 Indeed, all such models are, by definition, abstractions, created to capture specific phenomena of interest; and the modeler selectively specifies what should be included in the model and what should be left out, preserving its elegance. More in detail, let us consider a real-world phenomenon under investigation; call it “R.” The modeler specifies which things (variables and their relations) should account for the phenomenon under investigation. Such variables and relations are included in the simulation S1, which represents the modeler’s theory of R. The process is often iterative, indeed the model S1 could be inadequate to capture R. Hence, the model S1 is often revised (with a novel specification) and/or integrated (adding other variables and relations) through the creation of a new S2 model. The iterated process normally stops when a model presents a balance between elegance and predictive/explanatory power. Hence, models are always incomplete as they are built to analyze specific phenomena, not to reproduce the real world. A simulation model that includes everything (any possible feature in any situation) is simply not a model, strict senso, as a model works precisely by its elegance (how much it explains or predicts given a minimal number of ingredients). From a pragmatic point of view, the modeler, interested in a specific phenomenon, assumes that the model should include the necessary and sufficient conditions to capture the phenomenon under investigation, and he/she eventually revises or extends the model when he/she realizes that the model does not work. Such an interactive process preserves “Occam’s razor” as the simulation builds upon the minimal numbers of elements.

Now let us apply this logic to the phenomena involved in how an ant colony physically behaves in the natural environment in search for food. We know that a very elegant simulation model is able to replicate ants’ collective behavior; indeed, we simply need to specify proximity rules (where each ant follows the chemical signal of the preceding ant). The result is an emergent and ordered collective behavior, as minimal agents can generate complex collective behaviors (De Nicola et al. 2020; Heylighen 2016). Figure 1 is a stylized representation of such emergent, ordered, collective behavior.

Now, let us consider the phenomenon portrayed in Fig. 2: Ants often create living bridges made of their own bodies—by linking their legs—to cross the empty spaces, they encounter. Are we able to create an elegant computational model of this kind of phenomenon—a self-sustaining bridge made of ants?

When it comes to model how the ants build a bridge, using their own body, things are more complicated, and the elegance decreases. Indeed, the model should include the shapes of the ants, as the legs must present very specific morphological features (shapes, angles, etc.) to tangle. But this would not be enough. Biomechanical properties (degrees of freedom) should be added into the model (ants must be flexible in the right way to tangle but strong enough to avoid breaking, etc.). Hence, the number of features to be included in the model is significant. Moreover, nothing grants that the modeler will be able to include all the necessary variables, avoiding ad hoc assumptions. Indeed, the modeler should avoid assuming that the stylized legs must intersect and tangle in proximity of an empty space. Such an assumption would likely be adopted, by the modeler, to compensate for a phenomenon which is not “entailable” by the theory. In particular, the modeler should avoid assuming that (a) the ants consciously perceive an empty space, (b) in order to cross the space, they opt for building a bridge through their own bodies, and (c) when building the bridge, they deliberately coordinate their actions. Such kinds of dynamics would be based on a cognition which is far from being minimal, and they would require that ants behave like engineers, endowed with high-level cognitive faculties. Hence the fundamental point is not about the possibility of emulating the bridge through the simulation (it is possible), but rather about the difficulty of computationally entailing the bridge through the cognitive theory assumed for ants' behavior.

To see this, consider the difference between the first collective phenomenon in Fig. 1 and the second one in Fig. 2? In both phenomena, and in both potential models, the ants are minimal intelligence agents; indeed, in both models the ants behave through stigmergy mechanisms (as they follow each other through the chemical signals that they leave). But, while in the first case a minimal rule accounts for the emergence of the phenomenon (the first one is very elegant and admits a viable simulation), the second does not admit an easy simulation as it does not allow an elegant specification.Footnote 5 In the case of the bridge, the model is very complex, but it accounts for a phenomenon that, on its face, is not different from other collective phenomena, which conversely require minimal rules. We may reasonably be tempted to posit that this kind of phenomenon (the bridge made of ants) depends a great deal on the “six-leggedness” of the ants, and not only on the information steered through chemical signals.Footnote 6 Indeed, information, steered through chemical signals is a necessary but a not sufficient condition to create such a structure. In addition, we need the “six-leggedness” and in particular, some specific morphological and biomechanical traits to allow this type of bridge to come into being. On this point notice that to generate ordered walking paths (in Fig. 1), the morphological features are almost irrelevant (ants endowed with two legs, six legs or four wheels will generate, more or less, the same path pattern). But, when it comes to generate the bridge (in Fig. 2) the very specific morphological and physiological features are determinant for the emergence of the bridge; indeed, if ants had two legsFootnote 7 or four wheels the bridge would have been impossible (or, in the best of cases a very different type of bridge would have been built).

Importantly, this kind of phenomenon (the self-sustaining bridge made of ants) continues to require zero/minimal intelligence as the ants are able to build a bridge without a plan, and without sophisticated reasoning processes—they are just following chemical signals. To some extent, ants can build this type of bridge precisely because that have minimal intelligence plus six legs. So, at the very least, one needs zero intelligence plus embodiment.

Accordingly, the minimal intelligence of the ant is not disembodied but generates a substantively rational outcome precisely because it is complemented, and necessarily so, by embodied structures. And coordination does not occur, internally, on the basis of an abstract, high-level cognition but is rather a matter of the physical, interaction of legs, occurring on the fly. Indeed, the specific morphological features of the ant are requisite conditions that allow for creating the ant bridge. Notice that if the morphological and biomechanical features (such as the degrees of freedom among the different components of a leg) were slightly different, building the bridge might be impossible; that is to say, this type of bridge is enabled by the specific features of the ant’s legs: the specific shape, length, degrees of freedom, weight, flexibility, etc. of the legs are precisely the conditions that allow creating such bridging. Indeed, if such enabling conditions are missing, the bridge would not be possible (or at least a different type of bridge would be created).

3.2 Codetermination of minimal agents and institutions

The idea that institutions are emerging phenomena is part of the complexity approach to economics as suggested by Gilbert & Terna (2000): “institutions such as the legal system and government that are recognizable at the ‘macro-level’ emerge from the ‘micro-level’ actions of individual members of society” (2000, p. 61). The relationship between a minimal agent and the external institution (which does the work) has been traditionally conceived in a coevolutionary manner: “an institution such as government self-evidently affects the lives of individuals. The complication in the social world is that individuals can recognise, reason about and react to the institutions that their actions have created” (Gilbert & Terna 2000, p. 61). In the last two decades, such coevolutionary dynamics have been largely implemented by dedicated research projects aiming to add ecological dimensions to the simulation of institutional mechanisms (i.e., Ghorbani et al. 2013), relying on Ostrom’s framework for institutional analysis (Ostrom 2005).

Such attempts—despite their success in both academic and policy-making communities—are not persuasive in strict coevolutionary terms, because they miss a richer view of what intelligence is. In order to understand this point, let us consider, mutatis mutandis, the ant’s bridge as an institution. We easily realize that the bridge—while not properly an institution—possesses a number of features typical of institutions: this bridge is an external structure that scaffolds the “cognitive burden” that an ant faces in order to solve the problem of crossing the empty space between two surfaces. To be precise, building the bridge involves functional integration, and in that sense, it may be like an institution of the sort that Clark describes (see Sect. 2). Notice that, if ants could employ higher degrees of intelligence, they have two main options:

-

(1)

The ants should be able to identify alternative paths, choose such a path, plan the trip and implement the actions in order to cross the space;

-

(2)

The ants should be able to design and build a bridge (perhaps, with a centralized logic where an ant operates like an engineer designing the bridge and coordinating the operations).

As it is, however, ants neither plan complex alternative paths, nor are they able to design and build bridges (as an engineer might do). The bridge is a simple product of stigmergy, as each ant continues to follow the chemical signal of the preceding ant; and, stigmergy generates the process of bridge construction when the ants start to climb on each other, because of the presence of an empty space. The bridge is enacted by the ants, and it is what allows the ants to be “dumb,” so as to off-load their cognitive processes: Indeed, the ants continue to blindly follow their chemical signals, and yet solve a relevant problem—crossing the empty space between two surfaces—that is definitely too complex for their minimal cognitive endowment. The ant’s bridge shows that the minimal intelligence agent and the institution are codetermined and this codetermination presents some stylized features:

-

1.

The structure is an emergent phenomenon, not based on design and plans;

-

2.

The emerging structure requires that minimal computational intelligence of the agents be coupled with embodied endowments and mechanisms;

-

3.

The structure works in place of the agent’s intelligence;

-

4.

The agent’s minimal intelligence is preserved by the existence of the structure.

The ant bridge emerges from the local interaction of the ants—where the ants continue to follow their chemical signals—and is possible because the specific morphological traits of the ants can be contingently employed to build this type of structure. And, very importantly, this type of structure preserves the minimal intelligence of the ants as it allows them to continue to blindly follow their chemical signals. Indeed, this bridge is endogenous, run on chemical instructions characterizing other collective behaviors of ants and requires some embodied endowment to be built, hic et nunc. The minimal agent creates a structure that is functional to the preservation of its minimal intelligence. Such a structure is characterized by a balanced cost–benefits solution (Reid et al. 2015).

3.3 Interaction, unprestatability and unpredictability

Can we create a simulation able to spontaneously generate a bridge made of ants? Or, to put it differently, are we able to create a simulation of the coevolution between a minimal intelligence agent and the external structure that works in place of its cognition? As we anticipated in the previous sections, the difficulty of simulating such a bridge is not strictly a matter of intrinsic complexity of the phenomenon under investigation. In other words, the difficulty does not pertain to the difficulty of creating a complex model, but to the unpredictable dimension of the phenomenon under investigation. The notion of unpredictability that we call into account here is not related to well-known (computationally implementable) mechanisms, such as deterministic chaos. It pertains to the evolutionary matter of unprestatability, that is, the possibility for the modeler of specifying ex ante the properties of a system (specifically an organism) in its dynamical interplay with the environment. Indeed, several functional properties of a system remain latent and non-specified until they have a useful result in a contingent physical environment; they cannot be computationally deduced. Such latent properties have been conceptualized as the “adjacent possible”. As such they do not admit entailing laws (Longo et al. 2012; Kauffman 2000) and are instantiated by exaptative processes. While adaptations are traits shaped by natural selection for a current use (where such a use has been prespecified within the theory), exaptations are “characters, evolved for other usage (or no function at all) and later ‘co-opted’ for their current role” (Gould & Vrba 1982, p. 6). The notion of exaptation must be conceptualized in the light of the critique of panselectionism and adaptationism, which assume (without sufficient justification) modularizable organisms characterized by enumerable and statable functions, where the researcher prespecifies the properties of the system under investigation (Gould & Lewontin 1979). A fundamental non-adaptationist advancement of evolutionary theory has been the separation of the structure (e.g., how legs are) and function (e.g., how legs are used) in an organism, and the introduction of the notion of “spandrels.”Footnote 8 Spandrels are phenotypic traits that arise as a by-product of evolution of some other traits, rather than a product of adaptive selection (Gould & Lewontin 1979). The necessity of avoiding boiling down the evolutionary mechanisms to panselectionist logics, and adopting a more pluralistic and non-reductionist view on evolution has been conceptualized as the “extended evolutionary synthesis” (Laland et al. 2015).

Consistent with such arguments, we argue that some functions and structures—i.e., some institutions—are not computationally prestatable: while real ants build bridges, artificial ants do not, specifically because their minimal computational intelligence is not enough to create such structures if they lack material legs (with the specific arcuate shape and other morphological and physiological features) that make possible specific types of interaction in a specific situation. In other words, the bridge can be conceptualized as an adjacent possible; it is the product of minimal intelligence plus the six-leggedness of the ants and enables specific types of interactions which, by virtue of their situated and embodied nature, are not prestatable and a fortiori, do not admit a non-trivial computational formulation.Footnote 9

We note, however, that specific aspects of the ant bridge do admit a simulation (e.g., Ichimura & Douzono 2012). Such aspects are not based on the type of non-computational interaction that we discuss here. Indeed, we can easily simulate the topology of interactions (among stylized, dot-like ants moving in a Cartesian environment through local signals) but not the situated interaction per se. Indeed, the interactions through which the ants will build the bridge will depend on how all factors—minimal intelligence, body and environment—are coupled so that the ants can enact the situation in which they will build the bridge. Such couplings cannot be easily simulated, so as to result in a computationally emergent phenomenon.

To better understand this point imagine that the specific bridge, in order to be built, requires further conditions in addition to the morphology of legs and their biomechanical properties (discussed in the previous sections). Let us hypothesize that, to build the bridge, each ant needs a specific pulse-frequency range of the dorsal vesselFootnote 10 to obtain the specific muscular tone able to support the bridge; a higher frequency will result in the lack of flexibility of muscles to tangle, and a lower frequency will result in an insufficient tone to support the bridge. Again, can we reasonably expect that the modeler will include in the model the whole physiology of ants (along with a fine-grained simulation of the pulse-frequency of the dorsal vessel) in order to simulate the bridge? We think not.

The typical objection to this argument is that we do not necessarily need such level of detail, as a model is an abstraction and is incomplete by definition; hence, such physiological features are irrelevant and can be neglected for the specific purpose (simulating the bridge). Our rebuttal to such an objection is not about abstraction and incompleteness but about the simulation’s purpose: The modeler needs to prestate the existence of the phenomenon (the bridge, in Fig. 2), which should actually result from the simulation, as an emerging property; hence, he/she needs to specify some ad hoc assumptions. Notice that in the case of ants’ patterns, in Fig. 1, the researcher does not need to assume, ex ante, the emerging result of the simulation (ordered walking patterns). Hence, we can use simulation to model a number of collective phenomena, but there are other phenomena (like the bridge) that cannot be easily simulated—and do not admit entailing laws (see Longo et al. 2012)—because they make sense only in their embodied and situated dimension.

If this is the case for the coupling of bodies and physical environments of fish (with scales—see note 9) and ants (with legs), how much more complex is human social autonomy (grounded in human anatomy and more contingent human factors), an autonomy that one would have to model in order to simulate economic phenomena? Attempts to create a virtual human in a virtual human society would require meeting multiple correspondence claims which are unrealizable. As Kugele et al. (2021) argue: One would need to simulate (1) virtual humans who are autonomous agents with “control structures” (Newell 1973) that are sufficiently similar to real humans (there are some hugely significant differences between computer and human intelligence); (2) their virtual environments, given that the complexity of real environments may determine a good deal of behavior, as Simon (1996) shows in his example of ants on the beach; (3) the interactions that enact those environments, so they are sufficiently similar to those of humans in the physical world. One would also have to simulate (4) their experiential and evolutionary histories such that they result in sufficiently humanlike adaptations and attunements; (5) social and cultural contexts within the virtual environments that afford and normatively define humanlike opportunities for interactions with other virtual humans and their virtual world; and (6) institutional contexts, including norms, practices and related technologies available in the virtual environment to enable and at the same time limit the activities of virtual humans.

While it may be theoretically possible to engineer virtual humans and simulated environments that satisfy [these correspondence conditions], our current technological limitations and fragmentary understanding of minds will severely constrain the obtainable correspondence between real and virtual humans for the foreseeable future…. [Methodologically,] researchers [have] to either simulate all aspects of the real world, or defend claims about the irrelevance of those things they have neglected to include. Since the former is out of the question, a researcher’s only recourse is to argue for the sufficiency of their impoverished renderings of humans and the real world (Kugele et al. 2021).

Practically untenable, and perhaps theoretically untenable if it is not possible to determine a priori what factors make a significant difference.

Such an idea is also consistent with the notion of the “black swan,” which refers to a phenomenon which is rare, presents large impact and is not predictable (Taleb 2007). Specifically, as discussed by Taleb, a hindsight fallacy connotes the possibility of modeling unpredictable phenomena, as the modeler is able to identify and understand the phenomenon only ex post, that is to say, once the phenomenon has happened. An ex post rationalization unwittingly substitutes the prerogatives of the modeler. To better understand this point, imagine the case in which the bridge was still a totally unknown phenomenon, never seen by any researcher; can we reasonably hypothesize that the modeler would be able to create a fine-grained simulation model in which such a (still unknown) bridge was an emerging computational outcome? We think that this is highly improbable.

Felin et al. (2014, p. 273) link this to the classic AI frame problem and offer the familiar example of the screwdriver. If you hand me a screwdriver will you be able to predict what I will do with it? As they put it, “the number of uses of a screwdriver (as forms and functions of uses and activities) are both indefinite and unorderable.” One could “screw in a screw, wedge open a door, open a can of paint, tie to a stick as a fish spear, rent to locals and take 5 percent of the catch, kill an assailant, and so forth.” I could also ask why you are handing me the screwdriver, or thank you profusely for the gift, or just hand it back to you and say, “no thanks,” etc. What I will do will depend perhaps on the situation, my skills, my familiarity with screwdrivers, my mood, the history of our relations, etc. Generally speaking, such an unprestatable multitude of possibilities is a central feature of dynamical complexification of modern economic systems, where “the implicit frame of analysis for the econosphere changes in unprestatable and non-algorithmic ways” (Koppl et al. 2015, p. 1).

Hence, the interactions that constitute such processes cannot be reduced to an algorithm, because the nature of the coupling—between the agent and the physical and social structure—is situated and embodied, in a way that assumes the relational autonomy of the agent. The (unpredictable) social/intersubjective relations are what undermine simulation, and this includes social/cultural/normative factors that loop back into the bodily dimension.

4 Final remarks

A potential critique of the ant examples could be that a bridge is a physical object and, as such, it requires some materiality that requires an accounting of the materiality of ants’ legs. What about more abstract (and perhaps normative) phenomena? The trivial and totally inadequate answer is that economics is largely a science of material objects and physical interactions. However, what we want to emphasize here is that, while there are some collective behaviors that can be easily simulated, there are others whose simulation is very problematic, because they involve some degree of unpredictability and, because of unprestatability they do not admit entailing laws (cf. Koppl et al. 2015).

Consider also that our claim is theoretically plausible because of its prudentiality; indeed, the trivial cases that “everything can be simulated” or “nothing can be simulated” require more compelling justification than does our thesis, which reasonably posits that some specific interactions are more problematic than others, when it comes to being simulated. And we contend that the nature of such a problem is not merely due to the intrinsic complexity of the phenomenon under investigation, but to the difficulty of simulating embodied and situated factors, without resorting to ad hoc assumptions, in order to explain emerging properties.

Our thesis is totally consistent with several prominent views present in the contemporary debate about evolution and cognition; in particular, we endorse the impossibility of computationally entailing many emerging phenomena of the ecosphere (Longo et al. 2012; Kauffman 2000), and we rely on the idea that cognition and intelligence are strongly embodied and enactive and do not admit of cognitivist (computationalist) representability (Hutto and Myin 2017; Varela, Thompson & Rosch 1991).

Our claim is that there is a quantum of factual emergence that cannot be computationally deduced on the basis of entailing laws, because a number of interactions, since they are situated and embodied, are unprestatable and unpredictable. And, notice, the consideration that situated and embodied interactions are, per se, richer than their simulation may still be a trivial consideration. What matters here is that complex simulations (such as realistic, videogame-like, artificial ant societies) do not capture the irreducible emergence of important phenomena that constitute the agent–environment coupling.

The unpredictability of interactions presents theoretical and methodological implications.

-

1.

From the theoretical point of view of strong embodied cognition, there is no genuine interaction without autonomous agents, where autonomy is conceived here as relational autonomy: the autonomy of one agent depends on its relations with other agents (see Sect. 2.2). The degree of autonomy depends on the social relations, as well as on bodily and material factors that constitute a specific situation.

-

2.

From the methodological point of view, that a number of interactions cannot be easily simulated does not mean that all interactions cannot be. But deciding, ex ante, which types of interactions can be simulated and which others cannot, is not an ontological matter but depends a lot on the specific social and economic phenomenon under investigation. And there are a number of relevant phenomena that do not admit even a non-trivial simulation. Indeed, the relevant issue is to what degree artificial settings are able to simulate “agents in the wild” without a loss of salience, so as to preserve the advantages of simulation methodologies.

Computer simulations, in particular multi-agent systems, are based on the assumption that understanding the organization of social and economic systems involves model-building. We definitely do not reject such an approach. We simply want to emphasize that while a number of phenomena can be simulated (we can simulate many features of an ant society) there are others that are difficult to simulate (the ant bridge), and others that cannot be. The boundary between difficulty and impossibility, we think, is somehow an asymptotic matter. Indeed, we are not assuming that there is a clear threshold of (non) simulability (and we remain agnostic about the possibility of clearly establishing where such a threshold is). Rather, the pragmatic point is that when simulation models are problematic because of embodied and situated factors, their elegance radically decreases, and they are no longer useful.

5 Conclusions

Why do 200,000 simple ants make a complex society, but 200,000 simple neurons make only the elementary brain of an ant? Or, why can a limited number of simple interacting agents make a complex structure, but a limited number of simple neurons make only a minimal intelligence organism? The problem is not trivial and requires a number of arguments related to different levels of analysis. Probably the “minimal answer” is that the 200,000 interacting elements—whether they are neurons or ants—are not sufficient to account for a large component of the observable phenomenon, and there is some embodied coupling with external structures that makes such numbers meaningful; in other words, embodied intelligence is always more than minimal intelligence.

Hence, when it comes to simulating such kinds of intelligence which constitutively require embodied factors, simulations that include bodily details may be a viable strategy. Using again our example, if we cannot easily entail through computation the bridge made of ants (unless we introduce ad hoc assumptions) we can alternatively opt for an exploratory simulation to discover the hidden properties of the system, that is, properties non-specified as ex ante hypotheses (cf., Froese & Gallagher 2010; Froese & Ziemke 2009). This requires building some robotic ants that have the morphological features of real ants, and are endowed with the same minimal (cognitive) rules, such as following the chemical signal of the preceding ants.

In this case, we can reasonably expect that we can generate the bridge without relying on ad hoc assumptions. However, we must also expect that the specific bridge coming from such a simulation will present differences to the real one, depending on the similarity of the artificial legs’ features, including their materiality. For instance, if we make robotic ants out of iron, we can reasonably expect the emergence of a larger and more robust bridge, compared to the biological bridge based on real legs, characterized by specific flexibility and resistance.

Bio-inspired robotics (Husbands et al. 2021) is consistent with our framework (although we do not articulate, here, which type of embodiment is assumed in specific approaches to robotics). We think that swarm robotics, as a trending domain of research (Bredeche et al. 2018; Brambilla et al. 2013), faces several problems of the kind we are discussing here.Footnote 11

In conclusion, a number of emerging phenomena are not prestatable, even if we limit minimal intelligence to the computational boundary, they require embodied and situated interactions, not subject to entailing laws, in order to come into being. We cannot expect specific types of emergence if we do not add to our model a quantum of complexity and unpredictability that cannot be algorithmically compressed.

Data availability

Not applicable.

Code availability

Not applicable.

Notes

ZI is based on the cognitivist paradigm where intelligence is just computational (ZI = zero computation). As we will show in the next sections, intelligence is more than computation, and ZI works (if it works) not just because of the arrangement of the environment but because the system it is part of, is intelligent—embodied processes are intelligent in their coupling to the environment. In other words, the ZI system can be also conceived as zero computation, plus embodied intelligence operating in a structured environment.

The definition of bounded rationality, known through the scissors metaphor, is based on an adaptive argument: “Just as a scissors cannot cut paper without two blades, a theory of thinking and problem solving cannot predict behavior unless it encompasses both an analysis of the structure of task environments and an analysis of the limits of rational adaptation to task requirements” (Newell & Simon 1972, p. 55).

This is not the place to explicate the detail of how these versions of EC differ from or complement each other. Most simply, however, we can say that once it is acknowledged that the body plays a significant role in cognition (embodied approaches) and that the environment in some way plays a significant role (embedded/ecological approaches), there is a significant debate about the nature of the relation between body and environment, where enactive views focus on dynamical coupling, and extended views focus on functional integration. We return to these debates below.

Agent-based simulations can be used for explanatory or predictive purposes. Furthermore, they are useful to generate novel hypotheses (Gilbert & Troitzsch 2005).

Notice that a high-fidelity but non-parsimonious model, is not a model stricto senso but resembles a digital reproduction (more similar to the ones of realistic video games). Such a type of “simulation” presents marginal scientific utility. A hypothetical simulation that includes everything, like a 1:1 map, of the phenomenon is trivial; it not only presents marginal elegance (in Occam’s razor terms), but it is also very difficult to manage.

One could object that a researcher could also create a simulation of the bridge. For instance, he/she could create a stylized artificial ant (such sphere-like ants able to intersect) and, iteratively, add more and more morphological features, to improve the model. We think that assuming such an intersection is problematic as it represents an ad hoc assumption. Indeed, the bridge should be treated as an emerging and unexpected outcome of the model and should not be assumed by the researcher. The bridge should be treated like the ordered patterns of walking ants emerging according to simple proximity rules. If the researcher assumes that the ants must intersect to shape a bridge (and probably, the modeler must also establish how and when), the elegance of the model decreases, and the model becomes an ad hoc reproduction of the phenomenon under investigation.

Other species (such as human beings, evolutionary endowed with legs and arms) clearly are not able to build these kinds of bridges.

The original meaning of “spandrel” is an architectural by-product; spandrels are the roughly triangular spaces between the top of an arch and the ceiling (like the well-known spandrels in the Basilica of San Marco Basilica in Venice cited by Gould & Lewontin 1979).

To better understand the limits of simulability, consider the following example, which has been adapted from a talk of Stuart Kauffman (in Rome at the Kreyon Open Conference). Imagine an ancestral fish, endowed with a very minimal intelligence, so minimal that its actions consist simply in moving its fins so as to swim in the direction of water flows, and eat the plankton it randomly finds. We can easily model such kinds of phenomena as we can create a population of such fish so as to simulate the system under different conditions (reproductive rates, distribution of plankton, etc.). Now let us imagine that there is an exemplar born with more rugged scales. Because of such scales this exemplar gets caught on the surface of a rock so that it has no possibility of moving anymore. We could reasonably expect that it will die as it will not be able to eat plankton. But this idiosyncratic event (getting caught on a rock) generates a quite different outcome: Precisely because it gets caught, it will be able to eat more plankton than other fish: while other fish go in the same direction of plankton (as both the fish and the plankton follow the water flows) this specific exemplar benefits from being fixed so as to counteract the water flow. Having such rugged scales represents an evolutionary advantage that could be retained by future generations so as to create a population of fish that lives fixed on the rocks. Now the question, again, is how we can imagine simulating this kind of evolutionary phenomenon by means of agent-based models? Our answer (totally consistent with Kauffman’s answer) is that we can’t.

The dorsal vessel is the “heart” of the ant.

Swarm robotics is normally investigated for pragmatic purposes, mainly technological applications based on optimization problems (Dorigo et al. 2020). Here, swarm intelligence is discussed for scientific purposes related to economics.

References

Akerlof GA (1970) The market for “lemons”: quality uncertainty and the market mechanism. Q J Econ 84(3):488–500

Alsmith AJT, De Vignemont F (2012) Embodying the mind and representing the body. Rev Philos Psychol 3(1):1–13

Anderson M (2010) Neural reuse: a fundamental organizational principle of the brain. Behav Brain Sci 33(4):245

Ariely D (2008) Predictably irrational: the hidden forces that shape our decisions. New York, NY

Axtell RL, Epstein JM (1994) Agent based modeling: understanding our creations. The Bulletin of the Santa Fe Institute, Winter: pp 28–32

Barsalou LW (1999) Perceptual symbol systems. Behav Brain Sci 22(4):577–660. https://doi.org/10.1017/S0140525X99002149

Barsalou LW (2008) Grounded cognition. Annu Rev Psychol 59:617–645

Berlucchi G, Aglioti SM (2010) The body in the brain revisited. Exp Brain Res 200(1):25–35

Bonabeau E, Dorigo M, Theraulaz G (1999) Swarm intelligence: from natural to artificial systems from natural to artificial systems. Oxford University Press. https://doi.org/10.1093/oso/9780195131581.001.0001

Brambilla M, Ferrante E, Birattari M, Dorigo M (2013) Swarm robotics: a review from the swarm engineering perspective. Swarm Intell 7:1–41

Bredeche N, Haasdijk E, Prieto A (2018) Embodied evolution in collective robotics: a review. Front Robot AI 5:12

Brooks RA (1991) Intelligence without representation. Artif Intell 47(1–3):139–159

Casasanto D, Dijkstra K (2010) Motor action and emotional memory. Cognition 115(1):179–185

Chemero A (2009) Radical embodied cognitive science. MIT Press, Cambridge, MA

Clark A, Chalmers D (1998) The extended mind. Analysis 58(1):7–19

Clark A (1997) Economic reason: the interplay of individual learning and external structure. In: Drobak J, Nye J (eds.) The frontiers of the new institutional economics, Academic Press, San Diego, pp 269–290

De Jaegher H, Di Paolo E, Gallagher S (2010) Can social interaction constitute social cognition? Trends Cogn Sci 14(10):441–447

De Nicola R, Di Stefano L, Inverso O (2020) Multi-agent systems with virtual stigmergy. Sci Comput Program 187:102345

Denzau AT, North DC (1994) Shared mental models: ideologies and institutions. Kyklos 47(1):3–31

Dorigo M, Theraulaz G, Trianni V (2020) Reflections on the future of swarm robotics. Sci Robot. https://doi.org/10.1126/scirobotics.abe4385

Felin T, Kauffman S, Koppl R, Longo G (2014) Economic opportunity and evolution: Beyond landscapes and bounded rationality. Strateg Entrep J 8(4):269–282

Froese T, Gallagher S (2010) Phenomenology and artificial life: toward a technological supplementation of phenomenological methodology. Husserl Stud 26:83–106

Froese T, Ziemke T (2009) Enactive artificial intelligence: investigating the systemic organization of life and mind. Artif Intell 173(3–4):466–500

Furubotn EG, Richter R (1994) The new institutional economics: bounded rationality and the analysis of state and society: symposium June 16–18, 1993, Wallerfagen/Saar: Editorial Preface. J Inst Theor Econ (JITE)/Zeitschrift für die gesamte Staatswissenschaft, 11–17

Gallagher S (2005) How the body shapes the mind. Oxford University Press, Oxford

Gallagher S (2013a) Enactive hands. In: Radman Z (ed) The hand: an organ of the mind. MIT Press, Cambridge, MA, pp 209–225

Gallagher S (2013b) The socially extended mind. Cogn Syst Res 25–26:4–12

Gallagher S (2018) Embodied rationality. In: Bronner G, Di Iorio F (eds) The mystery of rationality: mind, beliefs and social science. Springer, Berlin, pp 83–94

Gallagher S (2020) Action and interaction. Oxford University Press, Oxford

Gallagher S, Crisafi A (2009) Mental institutions. Topoi 28(1):45–51

Gallagher S, Mastrogiorgio A, Petracca E (2019) Economic reasoning and interaction in socially extended market institutions. Front Psychol 10:1856

Gallese V, Goldman A (1998) Mirror neurons and the simulation theory of mindreading. Trends Cogn Sci 2(12):493–501

Gallese V, Sinigaglia C (2011) What is so special about embodied simulation? Trends Cogn Sci 15(11):512–519

Gallese V, Mastrogiorgio A, Petracca E, Viale R (2020) Embodied bounded rationality. In: Viale R (ed) Routledge handbook of bounded rationality. Routledge, pp 377–390. https://doi.org/10.4324/9781315658353-26

Ghorbani A, Bots P, Dignum V, Dijkema G (2013) MAIA: a framework for developing agent-based social simulations. J Artif Soc Soc Simul 16(2):9

Gilbert N, Troitzsch K (2005) Simulation for the social scientist. McGraw-Hill Education (UK)

Gilbert N, Terna P (2000) How to build and use agent-based models in social science. Mind Soc 1(1):57–72

Glenberg AM (2010) Embodiment as a unifying perspective for psychology. Wiley Interdiscip Rev Cogn Sci 1(4):586–596

Gode DK, Sunder S (1993) Allocative efficiency of markets with zero-intelligence traders: market as a partial substitute for individual rationality. J Polit Econ 101(1):119–137

Goldman AI (2012) A moderate approach to embodied cognitive science. Rev Philos Psychol 3(1):71–88

Goldman AI (2014) The bodily formats approach to embodied cognition. In: Kriegel U (ed) Current controversies in philosophy of mind. Routledge, New York, pp 91–108

Goldman AI, de Vignemont F (2009) Is social cognition embodied? Trends Cogn Sci 13(4):154–159

Gould SJ, Lewontin RC (1979) The spandrels of San Marco and the Panglossian paradigm: a critique of the adaptationist programme. Proc R Soc Lon Ser B Biol Sci 205:581–598

Gould SJ, Vrba E (1982) Exaptation—a missing term in the science of form. Paleobiology 8:4–15

Hegselmann R (1998) Modelling social dynamics by cellular automata. In: Liebrand WBG, Noyak A, Hegselmann R (eds.) Computer modeling of social processes. Sage, London

Heylighen F (2016) Stigmergy as a universal coordination mechanism I: definition and components. Cogn Syst Res 38:4–13

Hodgson GM (2004) The evolution of institutional economics. Routledge, London

Husbands P, Shim Y, Garvie M, Dewar A, Domcsek N, Graham P, Knight J, Nowotny T, Philippides A (2021) Recent advances in evolutionary and bio-inspired adaptive robotics: exploiting embodied dynamics. Appl Intell 51(9):6467–6496. https://doi.org/10.1007/s10489-021-02275-9

Hutto DD, Myin E (2017) Evolving enactivism. MIT Press, Cambridge, MA

Ichimura T, Douzono Y (2012) Altruism simulation based on pheromone evaporation and its diffusion in army ant inspired social evolutionary system. In: The 6th international conference on soft computing and intelligent systems, and the 13th international symposium on advanced intelligence systems, IEEE, pp 1357–1362

Kahneman D, Slovic P, Tversky A (eds) (1982) Judgment under uncertainty: heuristics and biases. Cambridge University Press. https://doi.org/10.1017/CBO9780511809477

Kauffman SA (2000) Investigations. Oxford University Press, Oxford

Koppl R, Kauffman S, Felin T, Longo G (2015) Economics for a creative world. J Inst Econ 11(1):1–31

Kugele S, Neemeh ZA, Kronsted C, Gallagher S, Franklin S (2021) Virtually impossible: obstacles to generalizing between simulated and real humans (Unpublished ms)

Laland KN, Uller T, Feldman MW, Sterelny K, Müller GB, Moczek A et al (2015) The extended evolutionary synthesis: its structure, assumptions and predictions. Proc R Soc B Biol Sci 282:20151019

Longo G, Montévil M, Kauffman S (2012) No entailing laws, but enablement in the evolution of the biosphere. In: Proceedings of the 14th annual conference companion on genetic and evolutionary computation, pp 1379–1392

Mastrogiorgio A, Felin T, Kauffman S, Mastrogiorgio M (2022) More thumbs than rules: is rationality an exaptation? Front Psychol 13:805743

Mastrogiorgio A (2020) Opaqueness as a mark of minimal intelligence, ZI Conference, Yale School of Management, Yale University, 22–24 October 2020

Newell A (1973) You can’t play 20 questions with nature and win: Projective comments on the papers of this symposium. Visual information processing. Elsevier, pp 283–308. https://doi.org/10.1016/B978-0-12-170150-5.50012-3

Newell A, Simon HA (1972) Human problem solving. Prentice-Hall, Englewood Cliffs, NJ

Newen A, De Bruin L, Gallagher S (eds) (2018) The oxford handbook of 4E cognition. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780198735410.001.0001

North DC (1993) What do we mean by rationality? In: Rowley CK, Schneider F, Tollison RD (eds) The next twenty-five years of public choice. Springer Netherlands, Dordrecht, pp 159–162. https://doi.org/10.1007/978-94-017-3402-8_16

Ostrom E (2005) Understanding institutional diversity. Princeton University Press, Princeton

Oztop E, Kawato M, Arbib M (2006) Mirror neurons and imitation: a computationally guided review. Neural Netw 19(3):254–271

Petracca E, Grayot J (2023) How can embodied cognition naturalize bounded rationality? Synthese 201(4):115

Pezzulo G, Barsalou LW, Cangelosi A, Fischer MH, Spivey M, McRae K (2011) The mechanics of embodiment: a dialog on embodiment and computational modeling. Front Psychol 2:5

Popp S, Dornhaus A (2023) Ants combine systematic meandering and correlated random walks when searching for unknown resources. Iscience 26(2):105916

Pulvermüller F (2005) Brain mechanisms linking language and action. Nat Rev Neurosci 6:576–582

Reid CR, Lutz MJ, Powell S, Kao AB, Couzin ID, Garnier S (2015) Army ants dynamically adjust living bridges in response to a cost–benefit trade-off. Proc Natl Acad Sci 112(49):15113–15118

Simon HA (1982) Models of bounded rationality. MIT Press, Cambridge, MA

Simon HA (1996) The sciences of the artificial, 3rd edn. MIT Press, Cambridge, MA

Slors M (2019) Symbiotic cognition as an alternative for socially extended cognition. Philos Psychol 32(8):1179–1203

Sutton J (2006) Distributed cognition: domains and dimensions. Pragmat Cogn 14(2):235–247

Taleb NN (2007) The black swan: the impact of the highly improbable. Random house

Tessitore G, Prevete R, Catanzariti E, Tamburrini G (2010) From motor to sensory processing in mirror neuron computational modelling. Biol Cybern 103:471–485

Thompson E (2007) Mind in life: biology, phenomenology, and the sciences of mind. Harvard University Press, Cambridge MA

Varela FJ, Rosch E, Thompson E (1991) The embodied mind: cognitive science and human experience. The MIT Press. https://doi.org/10.7551/mitpress/6730.001.0001

Viale R, Gallagher S, Gallese V (2023) Bounded rationality, enactive problem solving, and the neuroscience of social interaction. Front Psychol 14:1810

Williamson OE (1981) The economics of organization: the transaction cost approach. Am J Sociol 87:548–577

Funding

SG acknowledges the support of Università degli Studi Roma Tre, as Visiting Professor in the Dipartimento di Filosofia, Comunicazione e Spettacolo; and the Lillian and Morrie Moss Chair of Excellence in Philosophy at the University of Memphis.

Author information

Authors and Affiliations

Contributions

Both authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication. Authorship is listed alphabetically.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions