Abstract

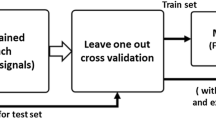

In this article, the new cognitive model convolutional brain emotional learning (CBEL) is introduced to recognize emotional speech on the Berlin dataset. This model is an improved model of brain emotional learning (BEL), which is inspired by the limbic function of the brain. The reason for choosing this model is that the limbic system of the brain is responsible for emotion, so it can help to recognize the emotion of speech. In the proposed method the input signals will be sent to the convolutional neural network (CNN) for the feature extraction. Then, changes have been made in the CNN pooling layer to increase the processing speed. Finally, the output of the pooling layers was sent to the Multi-Layer Perceptron (MLP) networks in the amygdala and orbitofrontal, from the CBEL model, to display the recognition rate on each basic emotional state. The results of the experiments show that the accuracy of emotional speech recognition of the proposed model is 97.39% and it has performed better than other methods introduced in the article.

Similar content being viewed by others

Data availability

There are many standard data, such as RAVDESS, eNTERFACE’ 05, etc., that can be used for this purpose.

References

Ververidis D, Kotropoulos C (2006) Emotional speech recognition: resources, features and methods. Speech Commun 48(9):1162–1181. https://doi.org/10.1016/j.specom.2006.04.003

Jayanthi K, Mohan S, Lakshmipriya B (2022) An integrated framework for emotion recognition using speech and static images with deep classifier fusion approach. Int J Inf Technol 14:3401–3411

Morén J (2002) Emotion and learning- a computational model of the Amygdala. Ph.D. Dissertation, Dept. of Cognitive Science, Lund University, Lund, Sweden.

Thakur A, Dhull SK (2002) Language-independent hyper-parameter optimization based speech emotion recognition system. Int J Inf Technol 14:3691–3699

Ayadia M E, Kamelb M S, Karrayb F (2011) Survey on speech emotion recognition: features, classification schemes and databases. Pattern Recognition, pp 572- 587.

Dellaert F, Polzin T, Waibel A (1996) Recognizing emotion in speech. International conference on spoken language processing, pp 1970–1973.

Harimi A, Shahzadi A, Ahmadyfard A, Yaghmaie K (2014) Classification of emotional speech spectral pattern features. J AI Data Mining 2(1):53–61

Rázuri JG, Sundgren D, Rahmani R, Larsson A, Cardenas AM, Bonet I (2015) Speech emotion recognition in emotional feedback for human - robot interaction. Int J Adv Res Artif Intell 4(2):20–27

Winter J, Xu Y, Lee W C (2005) Energy efficient processing of k-nearest neighbor queries in location aware sensor networks. The Second Annual International Conference on Mobile and Ubiquitous Systems: Networking and Services, San Diego,CA, pp 281–292.

Ayadi M , Kamel M S, Karray F (2007) Speech emotion recognition using gaussian mixture vector autoregressive models. International Conference on Acoustics, Speech, and Signal Processing.

Sheikhan M, Bejani M, Gharavian D (2012) Modular neural-SVM scheme for speech emotion recognition using ANOVA feature for method. Neural Comput Appl 23:215–227

Shashidhar R, Patilkulkarni s, Puneeth S B, (2022) Combining audio and visual speech recognition using LSTM and deep convolutional neural network. Int J Inf Technol 14:3425–3436

Haq S U, Jackson P J B, Edge J (2008) Audio-visual feature selection and reduction for emotion classification. International Conference on Auditory-Visual Speech Processing.

Petrushin V A (2000) Emotion recognition in speech signal: experimental study, development, and application. International conference on spoken language processing, pp 222- 225.

Trigeorgis G, Ringeval F, Brueckner R, Marchi E, Nicolaou M A, Schuller B, Zafeiriou S (2016) End-to-end speech emotion recognition using a deep convolutional recurrent network. In Proceedings of the 41st IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, pp 5200–5204.

Krizhevsky A, Sutskever I, Hinton G E (2012) Imagenet classification with deep convolutional neural networks. In Proceedings of the Twenty-Sixth Annual Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, pp 1097–1105.

Badshah A M, Ahmad J, Rahim N, Baik S W (2017) Speech emotion recognition from spectrograms with deep convolutional neural network. In Proceedings of the International Conference on Platform Technology and Service (PlatCon), Busan, Korea, pp 1–5.

Farooq M, Hussain F, Baloch NK, Raja FR, Yu H, Zikria YB (2020) Impact of feature selection algorithm on speech emotion recognition using deep convolutional neural network. Sensors 20(21):6008

Farhoudi Z, Setayeshi S, Rabiee A (2017) Using learning automata in brain emotional learning for speech emotion recognition. Int J Speech Technol 20(5):1–10

Farhoudi Z, Setayeshib S, Razzazi F, Rabieec A (2020) Emotion recognition based on multimodal fusion using mixture of brain emotional learning. Adv Cogni Sci 21(4):1067

Lotfi E (2013a) Brain-inspired emotional learning for image classification. Majlesi J Multimedia Process, 2.

Lotfi E (2013) Mathematical modeling of emotional brain for classification problems. Proc IAM 2(1):60–71

Lotfi E, Akbarzadeh MR (2013) Adaptive brain emotional decayed learning for online prediction of geomagnetic activity indices. Neurocomputing 126:188–196

Morén J, Balkenius C (2000) A computational model of emotional learning in the amygdala. From Animals to Animats 6: Proceedings of the 6th International Conference on the Simulation of Adaptive Behaviour. Cambridge, MA., USA, MIT Press, pp115–124.

Burkhardt F, Paeschke A, Rolfes M, Weiss B (2005) A database of German emotional speech. Paper presented at the Interspeech, Lisbon, Portugal.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Motamed, S., Askari, E. Convolutional brain emotional learning (CBEL) model. Int. j. inf. tecnol. (2024). https://doi.org/10.1007/s41870-024-01819-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41870-024-01819-9