Abstract

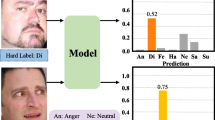

Face expression recognition in the wild faces challenges such as small data size, low quality images, and noisy labels. In order to solve these problems, this paper proposes a novel structural self-contrast learning method (SSCL) based on adaptively weighted negative samples. Firstly, two augmented variants are generated for each input sample as positive samples, which should be as similar in structure as possible. Secondly, EMD (Earth’s Mover Distance) is used to define the structural similarity between the two augmented samples and extract the features in a self-supervised way. Subsequently, the concept of negative samples is further introduced into SSCL, and the augmented variants of other categories are generated as negative samples, which makes the structural similarity between positive samples increase and between negative samples simultaneously decrease. Finally, an adaptive weighting method for the negative is proposed, which aims to solve the imbalance between positive samples and negative samples, mine difficult negative samples, and reduce their similarity with the input image in an adaptive way. The extensive experiments on benchmark datasets demonstrate that SSCL outperforms the state-of-the-art methods. The cross-dataset evaluation shows the superior generalization capability of the proposed method. The code will be available at https://github.com/zjhzhy/SSCL.

Similar content being viewed by others

References

Liang, H., Xu, H., Wang, Y., Pan, J., Fu, J., Pang, X.: Virtual emotional gestures to assist in the examination of the mental health of the deaf-mutes. Comput. Animat. Virtual Worlds 34(3–4), e2165 (2023)

Li, H., Wang, N., Yang, X., Wang, X., Gao, X.: Unconstrained facial expression recognition with no-reference de-elements learning. IEEE Trans. Affect. Comput. 15, 173–185 (2023)

Zhang, Y., Li, Y., Liu, X., Deng, W.: Leave no stone unturned: mine extra knowledge for imbalanced facial expression recognition. In: Thirty-Seventh Conference on Neural Information Processing Systems (2023)

Sheng, B., Li, P., Ali, R., Chen, C.L.P.: Improving video temporal consistency via broad learning system. IEEE Trans. Cybern. 52(7), 6662–6675 (2022)

Li, J., Chen, J., Sheng, B., Li, P., Yang, P., Feng, D.D., Qi, J.: Automatic detection and classification system of domestic waste via multimodel cascaded convolutional neural network. IEEE Trans. Ind. Inf. 18(1), 163–173 (2022)

Xie, Z., Zhang, W., Sheng, B., Li, P., Chen, C.L.P.: Bagfn: Broad attentive graph fusion network for high-order feature interactions. IEEE Trans. Neural Netw. Learn. Syst. 34(8), 4499–4513 (2023)

Jiang, N., Sheng, B., Li, P., Lee, T.-Y.: Photohelper: portrait photographing guidance via deep feature retrieval and fusion. IEEE Trans. Multimed. 25, 2226–2238 (2023)

Chen, Z., Qiu, G., Li, P., Zhu, L., Yang, X., Sheng, B.: Mngnas: distilling adaptive combination of multiple searched networks for one-shot neural architecture search. IEEE Trans. Pattern Anal. Mach. Intell. 45(11), 13489–13508 (2023)

Lin, S., Bai, M., Liu, F., Shen, L., Zhou, Y.: Orthogonalization-guided feature fusion network for multimodal 2d+3d facial expression recognition. IEEE Trans. Multimed. 23, 1581–1591 (2021)

Tang, Y., Zhang, X., Hu, X., Wang, S., Wang, H.: Facial expression recognition using frequency neural network. IEEE Trans. Image Process. 30, 444–457 (2021)

Chowanda, A.: Separable convolutional neural networks for facial expressions recognition. J. Big Data 8(1), 132 (2021)

Li, H., Wang, N., Yu, Y., Yang, X., Gao, X.: Lban-il: a novel method of high discriminative representation for facial expression recognition. Neurocomputing 432, 159–169 (2021)

Poux, D., Allaert, B., Ihaddadene, N., Bilasco, I.M., Djeraba, C., Bennamoun, M.: Dynamic facial expression recognition under partial occlusion with optical flow reconstruction. IEEE Trans. Image Process. 31, 446–457 (2022)

Gong, W., Fan, Y., Qian, Y.: Effective attention feature reconstruction loss for facial expression recognition in the wild. Neural Comput. Appl. 34, 10175–10187 (2022)

Chen, T., Kornblith, S., Swersky, K., et al.: Big self-supervised models are strong semi-supervised learners. Adv. Neural Inf. Process. Syst. 33, 22243–22255 (2020)

Li, M., Xu, H., Huang, X., Song, Z., Liu, X., Li, X.: Facial expression recognition with identity and emotion joint learning. IEEE Trans. Affect. Comput. 12(2), 544–550 (2022)

Chen, J., Guo, C., Xu, R., Zhang, K., Yang, Z., Liu, H.: Toward children’s empathy ability analysis: joint facial expression recognition and intensity estimation using label distribution learning. IEEE Trans. Ind. Inf. 18(1), 16–25 (2022)

Zhang, T., et al.: Cross-database micro-expression recognition: a benchmark. IEEE Trans. Knowl. Data Eng. 34(2), 544–559 (2022)

Jin, X., Lai, Z., Jin, Z.: Learning dynamic relationships for facial expression recognition based on graph convolutional network. IEEE Trans. Image Process. 30(11), 7143–7155 (2021)

Li, H., Xiao, X., Liu, X., Guo, J., Wen, G., Liang, P.: Heuristic objective for facial expression recognition. Vis. Comput. 39, 4709–4720 (2022)

Li, H., Wang, N., Ding, X., Yang, X., Gao, X.: Adaptively learning facial expression representation via c-f labels and distillation. IEEE Trans. Image Process. 30, 2016–2028 (2021)

Deng, J., Guo, J., Stefanos, Z.: Arcface: additive angular margin loss for deep face recognition. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2019)

Meng, Z., Liu, P., Cai, J., Han, S., Tong, Y.: Identity-aware convolutional neural network for facial expression recognition. In: IEEE International Conference on Automatic Face and Gesture Recognition, pp. 558–565 (2017)

Zhao, Z., Liu, Q., Wang, S.: Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 30, 6544–6556 (2021)

Zhao, Z., Liu, Q.: Former-dfer: dynamic facial expression recognition transformer. In: ACM Conference on Multimedia, pp. 1553–1561 (2021)

Chen, T., Pu, T., Wu, H., et al.: Cross-domain facial expression recognition: a unified evaluation benchmark and adversarial graph learning. IEEE Trans. Pattern Anal. Mach. Intell. 44, 9887–9903 (2021)

Zhao, Z., Liu, Q., Wang, S.: Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 30, 6544–6556 (2021)

Wang, K., Peng, X., Yang, J., Meng, D., Qiao, Y.: Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 29, 4057–4069 (2020)

Liang, L., Lang, C., Li, Y., Feng, S., Zhao, J.: Fine-grained facial expression recognition in the wild. IEEE Trans. Inf. Forensics Sec. 16, 482–494 (2021)

Li, S., Deng, W., Du, J.: Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2852–2861 (2017)

He, K., Fan, H., Wu, Y., et al.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2020)

Li, H., Wang, N., Yang, X., Gao, X.: Crs-cont: a well-trained general encoder for facial expression analysis. IEEE Trans. Image Process. 31, 4637–4650 (2022)

Vasudeva, K., Dubey, A., Chandran, S.: Scl-fexr: supervised contrastive learning approach for facial expression recognition. Multimed. Tools Appl. 82, 31351–31371 (2023)

Rubner, Y., Tomasi, C., Guibas, L.J.: The earth mover’s distance as a metric for image retrieval. Int. J. Comput. Vis. 40(2), 99–121 (2000)

Zhang, C., Cai, Y., Lin, G., Shen, C.: Deepemd: few-shot image classification with differentiable earth mover’s distance and structured classifiers. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12203–12213 (2020)

Zhuang, F.Q., Duan, K., et al.: A comprehensive survey on transfer learning. In: Proceedings of the IEEE (2020)

Van Engelen, J.E., Hoos, H.H.: A survey on semi-supervised learning. Mach. Learn. 109(2), 373–440 (2020)

Li, H., Wang, N., Yang, X., Wang, X., Gao, X.: Towards semi-supervised deep facial expression recognition with an adaptive confidence margin. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4166–4175 (2022)

Creswell, A., White, T., Dumoulin, V., et al.: Generative adversarial networks: an overview. IEEE Signal Process. Mag. 35, 53–65 (2018)

Xie, Y., Chen, T., Pu, T., et al.: Adversarial graph representation adaptation for cross-domain facial expression recognition. In: Proceedings of the 28th ACM International Conference on Multimedia (2020)

Zhou, L., Fan, X., Ma, Y., et al.: Uncertainty-aware cross-dataset facial expression recognition via regularized conditional alignment. In: Proceedings of the 28th ACM International Conference on Multimedia (2020)

Li, Y., Zeng, J., Shan, S., Chen, X.: Patch-gated cnn for occlusion-aware facial expression recognition. In: International Conference on Pattern Recognition, pp. 2209–2214 (2018)

Li, Y., Zeng, J., Shan, S., Chen, X.: Occlusion aware facial expression recognition using cnn with attention mechanism. IEEE Trans. Image Process. 28(5), 2439–2450 (2018)

Zhao, S., Cai, H., Liu, H., Zhang, J., Chen, S.: Feature selection mechanism in cnns for facial expression recognition. In: British Machine Vision Conference, p. 317 (2018)

Pan, B., Wang, S., Xia, B.: Occluded facial expression recognition enhanced through privileged information. In: Proceedings of the ACM International Conference on Multimedia, pp. 566–573 (2019)

Zhou, L., Fan, X., Tjahjadi, T., Das Choudhury, S.: Discriminative attention-augmented feature learning for facial expression recognition in the wild. Neural Comput. Appl. 1–12 (2021)

Florea, C., Florea, L., Badea, M.-S., Vertan, C., Racoviteanu, A.: Annealed label transfer for face expression recognition. In: British Machine Vision Conference, p. 104 (2019)

Wang, K., Peng, X., Yang, J., Lu, S., Qiao, Y.: Suppressing uncertainties for large-scale facial expression recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6897–6906 (2020)

Zhang, Y., Wang, C., Ling, X., Deng, W.: Learn from all: erasing attention consistency for noisy label facial expression recognition (2022)

Zhang, Y., Wang, C., Deng, W.: Relative uncertainty learning for facial expression recognition. In: Neural Information Processing Systems (2021)

Zhang, Y., Yao, Y., Liu, X., Qin, L., Wang, W., Deng, W.: Open-set facial expression recognition. In: AAAI (2024)

Li, Y., Hu, P., Liu, Z., Peng, D., Zhou, J.T., Peng, X.: Contrastive clustering. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 8547–8555 (2021)

Sharma, V., Tapaswi, M., Sarfraz, M.S., Stiefelhagen, R.: Clustering based contrastive learning for improving face representations. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 109–116. IEEE (2020)

Fang, B., Li, X., Han, G., He, J.: Rethinking pseudo-labeling for semi-supervised facial expression recognition with contrastive self-supervised learning. IEEE Access 11, 45547–45558 (2023)

Dosovitskiy, A., Springenberg, J.T., Riedmiller, M., Brox, T.: Discriminative unsupervised feature learning with convolutional neural networks. Adv. Neural Inf. Process. Syst. 27 (2014)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.E.: A simple framework for contrastive learning of visual representations. ArXiv arXiv:2002.05709 (2020)

Khosla, P., Teterwak, P., Wang, C., Sarna, A., Tian, Y., Isola, P., Maschinot, A., Liu, C., Krishnan, D.: Supervised contrastive learning. Adv. Neural. Inf. Process. Syst. 33, 18661–18673 (2020)

Fetterman, A., Albrecht, J.: Understanding self-supervised and contrastive learning with "bootstrap your own latent" (byol). In: Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning (2020)

Mollahosseini, A., Hasani, B., Mahoor, M.H.: Affectnet: a database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 10(1), 18–31 (2017)

Lucey, P., Cohn, J.F., Kanade, T., Saragih, J., Ambadar, Z., Matthews, I.: The extended Cohn–Kanade dataset (ck+): a complete dataset for action unit and emotion-specified expression. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 94–101 (2010)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Guo, Y., Zhang, L., Hu, Y., He, X., Gao, J.: Ms-celeb-1m: a dataset and benchmark for large-scale face recognition. In: European Conference on Computer Vision, pp. 87–102. Springer (2016)

Zhang, K., Zhang, Z., Li, Z., Qiao, Y.: Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 23(10), 1499–1503 (2016)

Zhao, Z., Liu, Q., Zhou, F.: Robust lightweight facial expression recognition network with label distribution training. In: AAAI, pp. 3510–3519 (2021)

Zhao, Z., Liu, Q., Wang, S.: Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 30, 6544–6556 (2021)

Liu, C., Liu, X., Chen, C., Wang, Q.: Soft thresholding squeeze-and-excitation network for pose-invariant facial expression recognition. Vis. Comput. 1–16 (2022)

Li, S., Deng, W.: A deeper look at facial expression dataset bias. IEEE Trans. Affect. Comput. 13(2), 881–893 (2022)

Liu, P., Lin, Y., Meng, Z., Lu, L., Deng, W., Zhou, J.T., Yang, Y.: Point adversarial self-mining: a simple method for facial expression recognition. IEEE Trans. Cybern. 52(12), 12649–12660 (2022)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell., Enhua (2019)

Wang, C., Xue, J., Lu, K., Yan, Y.: Light attention embedding for facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 32(4), 1834–1847 (2022)

Fu, Y., Wu, X., Li, X., Pan, Z., Luo, D.: Semantic neighborhood-aware deep facial expression recognition. IEEE Trans. Image Process. 29, 6535–6548 (2020)

Vo, T.-H., Lee, G.-S., Yang, H.-J., Kim, S.-H.: Pyramid with super resolution for in-the-wild facial expression recognition. IEEE Access 8, 131988–132001 (2020)

She, J., Hu, Y., Shi, H., Wang, J., Shen, Q., Mei, T.: Dive into ambiguity: latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6248–6257 (2021)

Zeng, D., Lin, Z., Yan, X., Liu, Y., Wang, F., Tang, B.: Face2exp: Combating data biases for facial expression recognition. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 20259–20268 (2022)

Liao, L., Zhu, Y., Zheng, B., Jiang, X., Lin, J.: Fergcn: facial expression recognition based on graph convolution network. Mach. Vis. Appl. 33(3), 40 (2022)

Xiao, J., Gan, C., Zhu, Q., Zhu, Y., Liu, G.: Cfnet: facial expression recognition via constraint fusion under multi-task joint learning network. Appl. Soft Comput. 141, 110312 (2023)

Zhang, Z., Tian, X., Zhang, Y., Guo, K., Xu, X.: Enhanced discriminative global-local feature learning with priority for facial expression recognition. Inf. Sci. 630, 370–384 (2023)

Liu, C., Hirota, K., Dai, Y.: Patch attention convolutional vision transformer for facial expression recognition with occlusion. Inf. Sci. 619, 781–794 (2023)

Maaten, L., Hinton, G.: Visualizing data using t-sne. J. Mach. Learn. Res. 9(86), 2579–2605 (2008)

Acknowledgements

The work was supported by Guangdong Basic and Applied Basic Research Foundation(Grant No. 2023A1515010939), National Natural Science Foundation of China(Grant Nos.62006049, 62176095), Project of Education Department of Guangdong Province (Grant No.2022KTSCX068), Guangdong Province Key Area R and D Plan Project (Grant No.2020B1111120001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, H., Zhu, J., Wen, G. et al. Structural self-contrast learning based on adaptive weighted negative samples for facial expression recognition. Vis Comput (2024). https://doi.org/10.1007/s00371-024-03349-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00371-024-03349-8