Abstract

The Two-Component Extreme Value (TCEV) distribution is traditionally known as the exact distribution of extremes arising from Poissonian occurrence of a mixture of two exponential exceedances. In some regions, flood frequency is affected by low-frequency (decadal) climate fluctuations resulting in wet and dry epochs. We extend the exact distribution of extremes approach to such regions to show that the TCEV arises as the distribution of annual maximum floods for Poissonian occurrences and (at least two) exponential exceedances. A case study using coastal basins in Queensland and New South Wales (Australia) affected by low-frequency climate variability, shows that the TCEV produces good fits to the marginal distribution over the entire range of observed values without the explicit need to resort to climate covariates and removal of potentially influential low values. Moreover, the TCEV reproduces the observed dog-leg, a key signature of different flood generation processes. A literature review shows that the assumptions underpinning the TCEV are conceptually consistent with available evidence on climate and flood mechanisms in these basins. We provide an extended domain of the TCEV distribution in the L-moment ratio diagram to account for the wider range of parameter values encountered in the case study and show that for all basins, L-skew and L-kurtosis fall within the extended domain of the TCEV.

Similar content being viewed by others

1 Introduction

Flood Frequency Analysis (FFA) aims to describe the relationship between flood magnitude and its frequency. It is used in the design of civil infrastructure, risk assessment and mitigation. One of the basic hypotheses underlying FFA is that the hydrological quantity of interest can be studied in a probabilistic framework under the assumption that flood observations can be considered to be a sample of an independent identically distributed random variable (Stedinger et al. 1993). A number of probability models have been used in FFA, the most common being Lognormal, Gumbel (GUM), Generalized Extreme Value (GEV), Pearson type 3 (PE3), Log-Pearson type 3 (LP3), Generalized Pareto (GPA) and Generalized Logistic (GLO) (e.g., Cunnane 1989; Laio et al. 2009; Rao and Hamed 2000).

As noted by Klemes (2000), a tension has emerged since the beginnings of the science of hydrology between the need to extrapolate values of interest from observations limited in time and space (Fuller 1914; Hazen 1914a, b). Warnings “to recognize the nature of the physical processes involved and their limitations in connection with the use of statistical methods” were also raised by Horton (1931). One of the more pressing problems in FFA considered left unresolved at the end of the twentieth century (e.g., Bobée and Rasmussen 1995; Singh and Strupczewski 2002) regards the need of better integrating the knowledge of hydrological processes within the FFA methods used for engineering problems in order to reduce the gap between practice and research in hydrology.

The development of asymptotic Extreme Value Theory provided a basis for a more rigorous foundation in the choice of probability model. Gumbel (1958), in the first chapter of his book on the Statistics of Extremes, while focusing on “the flood problem”, stated: “until recent years, engineers employed purely empirical procedures for the analysis of hydrological data and, especially, the evaluation of the frequencies of floods and droughts”.

The asymptotic properties of extreme value distributions have recently been revisited exploiting new theoretical insights and larger datasets (El Adlouni et al. 2008; Koutsoyiannis 2004a, b; Papalexiou and Koutsoyiannis 2013; Papalexiou et al. 2013; Serinaldi and Kilsby 2014). Koutsoyiannis (2004a) acknowledged that the convergence to the asymptotic distributions can be too slow compared to the usual length of extreme event samples and called for renewed attention on development of exact analytical models for analysis of extremes (see also De Michele 2019; Lombardo et al. 2019). In fact, parallel to the asymptotic Extreme Value Theory is the theory of exact distributions of extremes first presented by Todorovic (1970) and Todorovic and Zelenhasic (1970). This theory seeks a stronger link between physics of floods (rainfalls) and stochastic processes. In particular, it allows one to derive the distribution of the Annual Maximum (AM) value from hydrological series characterized by Poissonian occurrences and assigned distribution of exceedances. In this framework the distributions of the GEV family have been recognized as the exact distributions of annual maxima arising from a Generalized Pareto distribution of exceedances (Madsen et al. 1997). Furthermore, Rossi et al. (1984) derived the Two-Component Extreme Value (TCEV) distribution, from a mixture of exponential exceedances, whose investigation is the main focus of this paper.

The interest in the use of mixed distributions dates back to Potter (1958) who observed the so-called dog-leg in flood frequency plots of Eastern United States. He stated that switching between different flood-producing processes (e.g., rainfall runoff and snowmelt) may lead to such a step-change in the slopes of the flood frequency curve. Cunnane (1985) recognized that flood distributions can be affected by the presence of different subpopulations due to different causes for flood generation. He also stated that a mixture of two subpopulations might be useful to explain the presence of very large outliers and the condition of separation of skewness observed by Matalas et al. (1975).

The physical processes affecting flood generation are numerous and may include different types of atmospheric forcing (e.g., Gaál et al. 2015; Hirschboeck 1987; House and Hirschboeck 1997; Merz and Bloschl 2003), different runoff generation mechanisms (saturation/infiltration excesses, e.g., Iacobellis and Fiorentino 2000; Sivapalan et al. 1990), and influence of fluvial geomorphological features (Archer 1981; Beven and Wood 1983; Woltemade and Potter 1994).

In extensive analysis of rainfall extreme values (Papalexiou and Koutsoyiannis 2013; Serinaldi and Kilsby 2014) the often-observed heavy flood frequency tail has been attributed to the mixture of different processes (extreme and non-extreme events) or temporal fluctuations of the parent distribution as affected by oscillation of climate and other physical mechanisms controlling the rainfall process.

Open issues in flood-type classification are discussed by Sikorska et al. (2015), particularly the uncertainty associated with flood classification. A number of recent studies highlight the growing interest in this field of research due to availability of large datasets, new kinds of observations and more powerful (analytical and computational) tools (e.g., Barth et al. 2016; Smith et al. 2011, 2018; Szolgay et al. 2016; Villarini 2016; Villarini and Smith 2010; Villarini et al. 2011; Tarasova et al. 2020).

In recent decades rising attention has been devoted to the influence of climate and environmental change on flood frequency, the role of changing flood generating mechanisms or other sources of “permanent change” in time series (e.g., Koutsoyiannis 2013, Bloschl et al. 2017, 2019; Do et al. 2017; Hesarkazzazi et al. 2021; Ishak and Rahman 2019; Tabari 2020; Vormoor et al. 2015, 2016; Yan et al. 2017). To account for change, several authors (e.g., Khaliq et al. 2006; Salas and Obeysekera 2014; Strupczewski and Kaczmarek 2001; Strupczewski et al. 2001a, b) have suggested the use of distributions with parameters dependent on time or other covariates accounting for trends in mean and/or variance. Zeng et al. (2014) suggested the use of mixed distributions when a change point is detected in time series analysis.

This paper investigates whether the use of mixed distributions coupled with Poissonian occurrences can be used to model the frequency of flood series affected by low-frequency (decadal) climate fluctuations. In many areas of the world, consistent results have been obtained by studying associations between global climate indices such as the El Niño/Southern Oscillation (ENSO) on hydrological variables (e.g., Vecchi and Wittenberg 2010). In the coastal basins of Eastern Australia, Verdon et al. (2004) observed lower rainfall and increased air temperatures in El Niño (warm phase of ENSO) years when compared with La Niña years. Moreover, it has been shown that multidecadal variations in the Pacific Decadal Oscillation (PDO) and Interdecadal Pacific Oscillation (IPO) indices modulate the frequency of ENSO events (Kiem and Franks 2004; Kiem et al. 2003; Power et al. 1999). Both the IPO and PDO indices are subject to chaotic effects, which prevents deterministic prediction of the climate state (Franks 2004). Nonetheless, according to Kiem et al. (2003), these indices are characterized by persistent phases at decadal scales. Kiem et al. (2003) also showed, by using stratified data, that flood distributions in Eastern Australia are affected by low-frequency climate oscillation related to ENSO and its multidecadal IPO modulation.

We focus on the theoretical properties of exact distributions of extremes arising from compound processes and provide a general theoretical framework aimed to extend the use of mixed distributions to time series affected by climate fluctuations at interannual scales. In particular, for modeling occurrences that may take place in years characterized by different interannual conditions, we exploit properties of thinning and superposition of Poissonian processes within the classical theoretical framework of compound Poisson processes.

The descriptive capacity of the TCEV distribution and the physical consistency of its estimated parameters with the proposed theoretical development is assessed in a case study involving flood series in eastern Australia. We find that the TCEV is a good candidate for modelling the frequency of flood series affected by low-frequency (decadal) climate fluctuations. However, its parameters were found to sometimes lie outside the TCEV domain on the L-moment ratio diagram (LMRD) presented by Gabriele and Arnell (1991). We therefore extend the LMRD domain of the TCEV distribution to provide a graphical tool to help assess whether the TCEV is a reasonable option for observed data in comparison with many other distributions of extremes.

The paper is structured as follows. Section 2 introduces the theory underlying the derivation of TCEV distribution. In Sect. 3 a theoretical background is first developed for processes with interannual modulation of flood risk and then followed by the analytical derivation of AM flood models based on mixture processes. The conditions under which the TCEV distribution emerges as the AM distribution are identified. Section 4 describes the updating of the TCEV L-moment ratio diagram. Section 5 presents application of the TCEV to Eastern Australian AM flood data, previously shown to be affected by multidecadal variation in IPO phases. A discussion of results and their physical interpretation is reported in Sect. 6. Conclusions and final remarks are summarized in Sect. 7.

2 Theoretical background and development

2.1 Distribution of the largest exceedance in a year

The main concepts of the theory of exact distributions of extremes are described in the works of Todorovic (1970), Todorovic and Yevjevich (1969), Todorovic and Zelenhasic (1968, 1970) and Zelenhasic (1970). Consider a streamflow time series represented by a positive random variable \(Q\) and let \(n\) be the number of exceedances over a certain threshold \({q}_{0}\) during the time interval \(\left[0,t\right].\) The \(j\)-th exceedance (\(j=\mathrm{1,2},..,n\)), occurring at time \({t}_{j}\), assumes the value:

This is a marked point stochastic process (e.g., Snyder and Miller 2012). We adopt a block maxima approach, where the highest of all recorded values for a given time period or “block” is investigated. Given our focus on annual maximum, the interval \(\left[0,t\right]\) is set equal to one year. According to Todorovic (1970), we assume that both the exceedances \({X}_{j}\) and their number of occurrences, \(N\), are random variables. Therefore, as observed by Lombardo et al. (2019), n can be considered as the realization of \(N\).

If exceedances are independent, distributed as \(H(x)=Pr\left\{X\le x\right\}\), the cumulative distribution function (cdf) of the largest exceedance in any year, \(Y=max\left\{{X}_{1}\dots {X}_{n}\right\}\), can be shown to be (Zelenhasic 1970, pag. 8)

being \({p}_{N}(n)\) the probability of observing n exceedances in a year.

Suppose N follows a Poisson distribution. Then:

where \(\uplambda\) represents the mean number of exceedances per year. It then follows that

According to Todorovic (1978), a common distribution function for \(H(x)\) can be of the exponential type, i.e.,

with \(\beta\) equal to the mean exceedance. Substituting Eq. (5) into Eq. (4) leads to:

which is the Gumbel distribution. It should be remarked that, as presented in Gumbel (1958), this distribution holds also for negative values. If \(H(x)\) corresponds to the Generalized Pareto distribution, then \(F(y)\) follows a GEV distribution (Madsen et al. 1997).

2.2 Mixture distributions and two-component Poissonian model

In flood frequency analysis, mixture distributions were developed to account for the presence of two (or more) physical processes responsible for extreme hydrological events. Two broad classes of mixtures have been identified (Alila and Mtiraoui 2002; Waylen et al. 1993).

The first class consists of additive mixture models (Moran 1959), which are appropriate when only one process generates the annual maximum flood in a year. Mixtures of lognormal distributions were studied by Alila and Mtiraoui (2002) and Singh and Sinclair (1972). More complex additive mixture models were developed by Grego and Yates (2010), Shin et al. (2015), and Yan et al. (2017, 2019).

The second class consists of compound models (Gumbel 1958), which are appropriate when two or more different processes may generate the annual maximum flood. Early applications were developed by Waylen and Woo (1982) and Rossi et al. (1984) followed by Strupczewski et al. (2009), Rulfova et al. (2016) and Fischer et al. (2016).

2.3 Two-component Poisson models

Define \({H}_{1}(x)\) and \({H}_{2}(x)\) as the distributions of exceedances of the first and the second component. In the mixture process approach, the distribution of an exceedance arising from two components is:

where \(p\) is the probability of the exceedance being sampled from \({H}_{1}(x).\)

If the annual number of exceedances of each component, \({N}_{1}\) and \({N}_{2}\), follow a Poisson distribution with parameters \({\uplambda }_{1}\) and \({\uplambda }_{2}\), then the total annual number of exceedances \(N={N}_{1}+{N}_{2}\) is also Poisson distributed with parameter \({\uplambda =\uplambda }_{1}+{\uplambda }_{2}\). This property is known as superposition of Poisson processes. Under the hypothesis that component exceedances are exponentially distributed, combining Eqs. (4) and (7), Rossi et al. (1984) obtained:

which represents the Two-Component Extreme Value (TCEV) distribution with constraints \({\uplambda }_{1}>{\uplambda }_{2}\ge 0\). The first two parameters (\({\uplambda }_{1}\) and \({\uplambda }_{2}\)) represent the mean number of independent peaks in a year for each component, while the last two parameters (\({\theta }_{1}\) and \({\theta }_{2}\)) represent the mean peak amplitude of the ordinary and the outlying components respectively.

The TCEV can be re-expressed using the dimensionless parameters:

to give

3 Modeling low-frequency climate variability

The primary focus of this paper is on exploiting properties of two-component Poisson models in order to model flood frequency analysis accounting for the presence of low-frequency variability (e.g., ENSO, PDO) which modulates flood risk over multiyear time scales. Let the low-frequency variability be represented by alternating wet/dry epochs (years), here respectively identified by the subscript \(i=\mathrm{1,2}\), and assume that their length (expressed in number of years) follows a Poisson distribution with mean \({\mu }_{i}\) so that \(\mu ={\mu }_{1}+{\mu }_{2}\).

In fact, the process of occurrences in the timeline of alternating different epochs can be modelled exploiting properties associated with thinning and superposition of Poisson processes (e.g., Lewis and Shedler 1979), where:

-

Thinning: suppose \(N\) is a Poisson process (with parameter \(\uplambda\)) counting the number of occurrences, where events can be recognized as one of two different types: Type 1 and Type 2. Let \({p}_{j}\) denote the probability that an event is of Type \(j\) with \(j = 1, 2\). The \({N}_{j}\) process counting the events of Type \(j\) is a Bernoulli thinned Poisson process with parameter \({\uplambda }_{j}={p}_{j}\uplambda\). (Chandramohan and Liang 1985; Isham 1980,).

-

Superposition: suppose \({N}_{1}\) and \({N}_{2}\) are independent Poisson counting processes with parameters \({\uplambda }_{1}\) and \({\uplambda }_{2}\) respectively. The sum process \(N = {N}_{1} + {N}_{2}\), consisting of all arrivals generated by process 1 and process 2, is a Poisson counting process with parameter \(\uplambda ={\uplambda }_{1}+{\uplambda }_{2}\).

Based on these properties of Poisson processes, in the next sections we develop two cases. In Case 1 we assume that the influence of climatic fluctuations is sufficiently strong that the type of flood event is almost deterministically dependent on climatic epoch, then different type of events may be obtained only considering different epochs. In Case 2 we assume that two (or more) different type of event may occur in any of the climatic epochs. For each case, we derive the marginal annual maximum distributions using the mixed process approach and show which additional assumptions are needed to produce a TCEV distribution of the marginal AM values.

3.1 Case 1: One component per epoch

Suppose the flood generation process in each year of epoch \(i\) is characterized by a Poissonian number of occurrences, \({N}_{i}\), with mean value \({\uplambda }_{{\text{i}}}\) and distribution of exceedances \({H}_{i}(x)\)

reflecting different interannual hydrological or climatological conditions peculiar of epoch \(i\). We show that the distribution of AM may arise from a timeline of random years characterized by a stochastic alternation of different epochs under the hypothesis that different types of hydrological events may be triggered in any year, with the distribution conditional on epoch.

In particular, in this case, we assume that in dry (wet) epochs, Type 1 (Type 2) events prevail. In other words, Type \(i\) events are typical of epoch \(i\). Their counting process can be described as a thinned Poisson process \({N}_{1}\) (\({N}_{2}\)) characterized by probability \({p}_{1}\) (\({p}_{2}\)) with.

The superposition of the two thinned Poisson processes \({N}_{1}\) and \({N}_{2}\), with parameters \(\lambda_{1}^{\prime }\) and \(\lambda_{2}^{\prime }\), provides the overall annual process accounting for intra- and inter-annual variability, which is still a Poisson process with parameter

Following this interpretation, the distribution of exceedances is represented by a mixture distribution of two components, descending from Eq. (7):

which when substituted into Eq. (4) with exponential distributions of exceedances \({H}_{1}(x)\) and \({H}_{2}(x)\), results in a TCEV distribution (Eq. 8) of AM with Poisson parameters

Note that, in this case, if the flood generation mechanism is not affected by epoch, (i.e., the distribution of exceedance is unconditional on epochs), it results \({\theta }_{1}={\theta }_{2}\), then \(F(y)\) reduces to a Gumbel distribution.

3.2 Case 2: Two components per epoch

Suppose within each epoch \(i\), two independent flood processes, \(k=\mathrm{1,2}\), generate floods with Poisson number of occurrences \({N}_{k,i}\) with mean \(\lambda_{k,i}^{\prime }\) and exceedances with distribution

where \((k,i)=(\mathrm{1,1}), (\mathrm{1,2}), (\mathrm{2,1}), (\mathrm{2,2})\).

Thus, two different types of hydrological events may be triggered in any year, with the distribution conditional on epoch. Four \({N}_{k,i}\) Poisson processes occur, two in each epoch i(\({N}_{\mathrm{1,1}}\), \({N}_{\mathrm{2,1}}\), and \({N}_{\mathrm{1,2}}\), \({N}_{\mathrm{2,2}}\)) with parameters \(\lambda_{1,1}^{\prime }\), \(\lambda_{1,2}^{\prime }\), \(\lambda_{2,1}^{\prime }\), \(\lambda_{2,2}^{\prime }\) and four exceedance distributions \({H}_{\mathrm{1,1}}(x)\), \({H}_{\mathrm{1,2}}(x)\), \({H}_{\mathrm{2,1}}(x)\), \({H}_{\mathrm{2,2}}(x)\).

Considering a Bernoulli thinning of those Poisson processes, applied for each epoch \(i\) to both \({N}_{k,i}\) (for \(k=1, 2\)), the overall Poisson process accounting for any type of event in any year is obtained by the superposition of four thinned Poisson processes with parameters:

and namely:

The mixture distribution of exceedances is obtained as:

with

and the distribution of AM is found by inserting Eq. (19) in Eq. (4).

If all exceedance distributions are exponential, the AM distribution has eight parameters (\({\uplambda }_{\mathrm{1,1}}\), \({\uplambda }_{\mathrm{1,2}}\), \({\uplambda }_{\mathrm{2,1}}\), \({\uplambda }_{\mathrm{2,2}}\) and \({\theta }_{\mathrm{1,1}}\), \({\theta }_{\mathrm{1,2}}\), \({\theta }_{\mathrm{2,1}}\), \({\theta }_{\mathrm{2,2}}\)). The AM mixed distribution of Case 1 is trivially obtained by this general formulation by assuming \({\uplambda }_{\mathrm{1,2}}={\uplambda }_{\mathrm{2,1}}=0\).

A TCEV distribution is also obtained by assuming \({\theta }_{1}={\theta }_{\mathrm{1,1}}={\theta }_{\mathrm{1,2}}\), and \({\theta }_{2}={\theta }_{\mathrm{2,1}}={\theta }_{\mathrm{2,2}}\) , hence:

and

stating that the hydrological conditions depending on the epoch actually affect only the number of occurrence of events of different types and not their distribution of exceedances. The remaining two TCEV parameters can be shown to be

In the above-described conditions, the TCEV distribution holds as the distribution of AM affected by both intra- and inter-annual mixed processes. This development could be easily extended to more than 2 types of events by further incrementing k values. The marginal AM always results in a TCEV distribution because the number of components is equal to the number of climatic epochs.

The conditions \({\theta }_{1}={\theta }_{\mathrm{1,1}}={\theta }_{\mathrm{1,2}}\), and \({\theta }_{2}={\theta }_{\mathrm{2,1}}={\theta }_{\mathrm{2,2}}\) are consistent with a known property of the exponential distribution (often referred to as the memoryless property). Following this property, the probability of exceedances is conditionally independent of the threshold value and, as a consequence, only the frequency of exceedances is affected by epoch, not their distributions.

We find the mixture process approach with Poissonian occurrences and exponential exceedances yields a TCEV distribution with a process-based interpretation for the parameters. This suggests the TCEV is a promising and robust candidate for application to locations where flood risk alternates between dry and wet epochs. This will be evaluated in the case study using sites from eastern Australia which have been shown to exhibit low-frequency variability in flood risk.

4 Model assessment and parameter estimation

Despite the wide interest in mixture distributions and the recognized existence of different processes, the application of the methods described in Sect. 2 is mainly limited by problems connected with the estimation of parameters and difficulty in recognizing and observing different physical processes. To overcome these problems, different strategies have been proposed. Some authors (Singh and Sinclair 1972; Waylen and Woo 1982; Woo and Waylen 1984) suggest separating the events of the mixture components to estimate the parameters of each component and evaluate the weights of the total distribution in the case of the first class. Nevertheless, difficulties are encountered identifying the component for each single event in a data series when it is not sufficient to identify flood components by means of the season or period of the event’s occurrence (e.g., Hirschboeck 1987). Other authors (Arnell and Gabriele 1988; Fiorentino et al. 1985, 1987; Rossi et al. 1984), with reference to the TCEV model, recommend regional analysis to estimate parameters (in particular parameters related to skewness and kurtosis) and discourage at-site application of such models. The absence of a complete methodological framework for assessment of the robustness of mixture distributions in FFA (Kjeldsen et al. 2018) and for the evaluation of uncertainty and confidence limits of quantiles has been noted by Bocchiola and Rosso (2014). Furthermore, this class of models is not always suitable for comparison by goodness of fit tests with homogenous distributions (Laio et al. 2010; Bocchiola and Rosso 2014).

The L-moment ratio diagram, introduced by Hosking (1990), is considered a valuable aid for the selection of a parent distribution (e.g., Cunnane 1989; Salinas et al. 2014; Stedinger et al. 1993; Strupczewski et al. 2011; Vogel and Fennessey 1993). The LMRD representation of TCEV was developed by Arnell and Beran (1988) and Gabriele and Arnell (1991). To our knowledge the TCEV is the only mixed compound distribution whose domain has been represented in the LMRD. Some of its characteristics are illustrated in Connell and Pearson (2001).

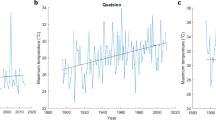

In Figure 1a, the TCEV domain is the same as used by Gabriele and Arnell (1991), namely \(2\le {\uptheta }_{*}\le 100\) and \(0.05\le {\uplambda }_{*}\le 1\). The figure compares this domain with the domains of frequently used distributions (GEV, GLO, GPA, PE3).

In Fig. 1b we extend the domain of TCEV parameters in the LMRD by increasing the range for \({\uplambda }_{*}\) from 1 to 5 to cover the wider range of values of \({\uplambda }_{2}\) and \({\uplambda }_{1}\) found in the case study. We kept the original range of \({\theta }_{*}\) with a maximum value of 100 (Arnell and Beran 1988; Gabriele and Arnell 1991). Higher values are also possible, but for practical purposes it can be deemed a reliable upper limit. The following features of the extended LMRD are noted:

-

1.

The TCEV domain moves from the Gumbel point towards higher values of L-skewness and L-kurtosis.

-

2.

For some combination of its parameters, the TCEV collapses to the Gumbel distribution as a special case. Holding the inequality \({\theta }_{1}<{\theta }_{2}\) (introduced by Beran et al. 1986), this occurs if only one component is present (e.g., \({\uplambda }_{1}\to 0\) or \({\uptheta }_{1}\to 0\)).

-

3.

The GEV, GLO and GPA lines lie within the TCEV domain for a wide range of L-skewness and L-kurtosis.

-

4.

The extension fills a gap in the neighborhood of the Gumbel point. In the extended area the curves for increasing \({\uplambda }_{*}\) (above 1) are progressively closer (almost indistinguishable), while those of \({\theta }_{*}\) are very close to each other, making visual interpretation of sample points difficult. Understanding the physical behavior of the processes underlying the extended area could assume a greater importance for a proper assessment of the TCEV model.

It needs to be stressed that other four-parameter distributions can span a domain similar to TCEV in the LMRD. Of particular relevance is the four-parameter Kappa distribution (Hosking 1994) which has the GEV as a special case when the second shape parameter \(h=0\). Hosking showed that the Kappa distribution is the distribution of the largest exceedance in a year if \(H(x)\) corresponds to the Generalized Pareto distribution and the number of events in a year are sampled from a binomial rather than Poisson distribution. He further showed that as the binomial parameter n increases, the Kappa converges from below to the GEV line on the LMRD.

While several methods are available for estimating TCEV parameters, practical and implementation issues limit the choice. In general, the method of moments is not considered a reliable approach for distributions with three or more parameters due to the high bias connected with relatively small samples. In TCEV studies only a few methods have been used: Rossi et al. (1984) suggested a Maximum Likelihood (ML) approach; Beran et al. (1986) derived TCEV Probability Weighted Moments (PWMs); Arnell and Beran (1988), Gabriele and Arnell (1991) and Gabriele and Iiritano (1994) reported L-moments expressions for TCEV parameters. Connell and Pearson (2001) applied a least squares approach. For the evaluation of TCEV parameters we use unbiased L-moments estimators (Hosking and Wallis 1995; Wang 1996) exploiting the computational approach for the evaluation of TCEV parameters from sample L-moments provided in Gabriele and Iiritano (1994).

5 Case study: dataset and results

This section evaluates the ability of TCEV to fit annual maximum of peak flows for eastern Australia where multidecadal variability is known to affect flood risk. The aim is not to compare TCEV against other models but to evaluate its potential in a region where flood risk is affected by multidecadal variability.

Annual maximum instantaneous discharge data for New South Wales (NSW) and Queensland (QLD) employed in this study were obtained from Australian Rainfall and Runoff (ARR) Project 5 (P5) Regional Flood Methods (Rahman et al. 2015). The dataset retrieved is very large and comprises flood data from 372 gauged sites, with sample size ranging between 20 and 102 years and observations from 1910 to 2012. The same sites are provided online by the Bureau of Meteorology (BoM) of Australian Government and are freely available at the website http://www.bom.gov.au/waterdata/. To maximize length of records, we integrated the P5 project data up to 2020 by retrieving data from this latter source. Locations were selected with the aim of sampling the eastern coast of Australia at different latitudes. Hence, we focused on stations with longer records spread along the coast of Eastern Australia. They are all located in New South Wales and Queensland. The main features of catchments and data series are reported in Table 1, while their geographical position is shown in Fig. 2.

Table 2 reports estimates of the TCEV parameters and sample L-moments ratios (\({t }_{3}, \, {t }_{4}\)).

Figure 3 presents the LMRD for the case study sites showing all sites lie within the extended TCEV domain of Fig. 1b. With respect to curves of three-parameter distributions shown in the LMRD, all sites lie below the Generalized Logistic, 1 of 12 sites lies between the GLO and GEV lines, while remaining 11 sites are below the GEV line and closer to Generalized Pareto or Pearson type 3 distributions.

Figure 4 presents TCEV probability plots for all sites with parameters estimated using L-moments.

A good fit is obtained to observed annual maximum series at all sites. However, what is of more interest is evidence of the presence of two components, which is associated by the presence of a dog-leg, a step-change in the slopes of the extremal straight lines of the cdf. Plots at all but one site (Pascoe River at Fall Creek) clearly display such a sharp transition in curvature.

6 Discussion

One of the main motivations for this work comes from the observation that, in some regions, the flood regime is subject to the influence of low-frequency climate variability. This is the case for Eastern Australia for which several studies have highlighted the influence of decadal climate variations on flood phenomenology (Franks and Kuczera 2002; Micevski et al. 2006), thus challenging the classical hypothesis that annual maximum floods can be treated as identically distributed. By using stratified flood frequency data of the same region, through robust ENSO classification, Kiem et al. (2003) showed that significantly different regional flood index distributions are obtained from data series stratified by IPO multidecadal phases. In particular a strong control of La Niña events, modulated in magnitude by multidecadal IPO processes is assessed and a strong shift in flood frequency curves is observed comparing extremes that occurred in different climatic epochs.

The results in Sect. 5 suggest the TCEV distribution can adequately fit the marginal distribution over the entire range of observed values without the explicit need to resort to climate covariates and removal of potentially influential low values. Since Potter (1958), a dog-leg shaped cdf has been considered a key signature of the presence of different flood generation processes. Figure 4 clearly displays this signature. Nevertheless, we see a good fit as a necessary but not sufficient condition justifying the use of TCEV. To tackle this with rigor would require a clear and complete understanding of all physical processes relating climatic patterns to flood generation processes thus allowing for a continuous simulation approach similar to that described in Samuel and Sivapalan (2008). Such a task is well beyond the scope of this study, nonetheless, some headway can be made by reviewing our understanding of the hydroclimate mechanisms responsible for floods in Eastern Australia and assessing whether these mechanisms are consistent with the assumptions in Sect. 3 that led to the TCEV.

Kiem et al. (2003) show that IPO negative phases have a significantly higher frequency of La Niña events than IPO positive phases. While La Niña years are known to have above average rainfall, it is of interest to understand what features of rainfall events change in La Niña years. In his work on building a high-resolution stochastic rainfall model, Frost (2003) analyzed the statistics of storm events using long-term high-resolution rainfall records at Sydney and Brisbane. He found there was little difference in average storm duration and intensity for La Niña, El Niño and Neutral years. What differed significantly was the average duration of dry spells—for example, at Sydney, average dry spells were 41.6 h in La Niña years, 45.3 h in Neutral years and 49.4 h in El Niño years.

The increased frequency of rainfall events in La Niña years would be expected to result in wetter antecedent moisture conditions. This was confirmed by Pui et al. (2011) when investigating the influence of IPO phases on flood events using a large dataset of rainfall and flow across Australia. They concluded that the significant differences in flood characteristics across the two IPO phases are due more to differences in antecedent soil moisture conditions than differences in rainfall. In La Niña years wetter antecedent moisture conditions increase the occurrence of significant flood events and increase the magnitude of the flood for the same rainfall.

Following these observations, we may identify wet\dry epochs according to IPO different phases (\(IPO<-0.5\) and \(IPO>-0.5\)). Hence the two-component-per-epoch model of Sect. 3.2 (i.e., Case 2, as defined by Eqs. (21) to (24)) appears to be conceptually consistent with the reviewed evidence. The most significant difference between IPO wet and dry epochs is the frequency of La Niña years. If component 1 is associated with non-La Niña years and component 2 with La Niña years, we would expect the exceedance distributions to satisfy \({H}_{1}(x)>{H}_{2}(x)\) which for exponentially distributed exceedances implies \({\theta }_{2}>{\theta }_{1}\). This is consistent with the clear separation between \({\theta }_{2}\) and \({\theta }_{1}\) reported in Table 2.

Table 2 reveals a clear separation for the inequality \({\uplambda }_{1}>{\uplambda }_{2}\). Kiem et al. (2003) show that in IPO positive phases there is about a 12% chance of La Niña year, while in IPO negative phases there is a 50% chance of a La Niña year. Using the results reported by Frost (2003), we would expect up to 20% more rainfall events in a La Niña year than a non-La Niña year. As a result, we would expect \(\lambda_{1,1}^{\prime }\) to be considerably greater than \(\lambda_{2,1}^{\prime }\) in an IPO positive phase, and \(\lambda_{1,2}^{\prime }\) to be slightly less than \(\lambda_{2,2}^{\prime }\) in an IPO positive phase. On balance this suggests \({\uplambda }_{1}>{\uplambda }_{2}\) is plausible. Moreover, the increase of \(\lambda_{2,2}^{\prime }\)(counterbalanced somewhat by a lower \({p}_{2}\) weight) relative to \(\lambda_{1,2}^{\prime }\) inflates \({\uplambda }_{2}\) and contributes to the fact that 5 of 12 sites in Table 2 have \({\uplambda }_{2} > 1\), and hence a higher \({\uplambda }_{*}\). In fact, \({\uplambda }_{*}\) exceeds 0.7 at 6 of the 12 sites and exceeds 1 at 3 sites, thus locating the TCEV parameters in the extended area of the TCEV domain in Fig. 3.

In order to provide a numerical assessment of the proposed theoretical derivation of TCEV coming from thinning and superposition of Poisson processes, we performed numerical experiments based on Monte Carlo numerical simulations whose underlying process is consistent with the physical evidence documented above and with the two cases defined in Sect. 3. For characterizing the TCEV parameters, following Kiem et al. (2003), we assumed that the probability of falling into the wet epoch is equal to the percentage of years characterized by \(IPO<-0.5\). Results of this analysis, reported in the Appendix, support the agreement of TCEV distribution to this class of underlying processes.

The theoretical development of TCEV provided in Sect. 3 and the analysis of the study case suggests the TCEV is a robust choice when dealing with mixed processes. Several studies report that different mechanisms are considered responsible for flood runoff generation in a significant portion of Australian catchments (e.g., Johnson et al. 2016). Trancoso et al. (2016) analyzed the hydrological regime of a large number of catchments in Eastern Australia highlighting the presence of three mechanisms as dominant in the streamflow hydrograph (i.e., Horton overland flow, subsurface stormflow and return flow). Jarihani et al. (2017) applied a coarse-scale water balance for the Upper Burdekin catchment, a large basin in Queensland whose hydrological behavior is considered representative of basins across northern Australia and Queensland. They observed that at the annual scale, runoff is triggered by saturation overland flow (from November to March due to wetter seasonal conditions) and Hortonian overland flow from March to October with weights depending on local hydrological factors. Similar results are also reported in plot studies in Australian savannas (McIvor et al. 1995; Roth 2004; Silburn et al. 2011). Considering that high rainfall variability is linked with ENSO events, with above average rainfall during La Niña events and drier conditions during El Niño events, they also concluded that the impact of these changes on runoff events is mainly related to a generalized increase in the antecedent soil moisture and a higher frequency of different type of events.

The use of TCEV is warranted without the need to a priori identify the type of event from the available series of the annual maxima or the events’ marginal annual maximum distribution (Pelosi et al. 2020). The marginal distribution of AM of such a mixed process may be pursued also using additive mixture distributions, but their use requires knowledge of the marginal distributions of the two types of events and also their rate of occurrence in different climate epochs. Applying additive mixture models to annual maximum flood series affected by low-frequency climatic signatures raises problems of identification of different periods with reference to their influence on flood generation mechanisms. Problems would include whether (i) the climate phenomena and phases are always clearly distinguishable and historically identifiable without uncertainty; (ii) the flood generation processes are well recognized and it is possible to know how many years have maxima belonging to the different types, (iii) the probability that in some definite years (as it could arise from climatic phases and phenomena) more than one type of event may occur.

With regard to TCEV parameter estimation, in the original formulation of TCEV there were two physical constraints, respectively on the values of the variable (\(x\ge 0\)) and on the mutual values of two parameters, i.e., \({\uplambda }_{2}>{\uplambda }_{1}\ge 0\). This latter can find justification from the conceptual basis of the nature of flood generation mechanisms. In fact, its definition (Rossi et al. 1984, pag. 852) relies also on a semantic distinction between the two components, assuming that the “ordinary” component can generate “frequent” floods, and the “outlying distribution” is responsible of rare and heavy events. The constraint \({\theta }_{2}>{\theta }_{1}>0\) is theoretically justified by the condition imposed by Beran et al. (1986) for deriving all the analytical derivations of moments and PWMs (\({\theta }_{*}>1\)).

Kjeldsen et al. (2018) stated that the use of a TCEV distribution should be considered reasonable whenever two processes generate an event in every year, “e.g., at least one rainfall and one snowmelt flood event in each year”. Actually, the TCEV, as shown in Sect. 2, arises as the probability of the annual maximum value in a process characterized by a Poissonian occurrence, where the discrete random Poisson variable may assume a value equal to zero, with probability equal to \(exp(-\uplambda )\), and its average value \(\uplambda\) may assume values less than 1. To our knowledge, in almost all TCEV applications in regional analysis of annual maximum rainfall and floods, the mean annual number of extraordinary events (\({\uplambda }_{2}\)) assumes values less than 1. In this paper we show that the TCEV application, following arguments and results shown in Sects. 4 and 5, is also feasible in cases where \({\uplambda }_{1}\) and \({\uplambda }_{2}\) have similar values, both of them can be higher than 1 and their ratio can be close to 1 or even smaller confirming that the TCEV can be considered as a candidate parent both in presence of infrequent phenomena, as in its regional applications (see Castellarin et al. 2012), and for high frequency (i.e., seasonal, Strupczewski et al. 2012) or low-frequency phenomena.

The LMRD of Fig. 3 also suggests that all but one of the 12 sites fall below the GEV line while all of them are within the domain of a four-parameter Kappa distribution (Hosking 1994). As noted in Sect. 2.1, the GEV is the distribution of the largest exceedance in a year if \(H(x)\) corresponds to the Generalized Pareto distribution while the Kappa is obtained if \(H(x)\) is a Generalized Pareto distribution and the number of events in a year are sampled from a binomial. While the sample of 12 sites is small, the separation shown in Fig. 3 is conceptually consistent with the two-component foundation of the TCEV but also compatible with a Kappa or different three-parameter distributions (GEV, GLO, GPA, PE3).

Collectively this evidence is believed to provide reasonable physical support for the hypotheses leading to the choice of a TCEV distribution if process dependence by physical (e.g., climatic) factors is assessed. Further insights into the effect of multidecadal climatic fluctuations and ENSO events on the flood cdf could be obtained through a deeper analysis of stratified series which in turn could shed light about the presence of multiple runoff generation mechanisms in any epoch.

We conclude with a comment on the methods used for parameter evaluation and distribution assessment. As mentioned in the introduction, a number of studies have highlighted the problem of slow convergence to asymptotic distributions and the need for long sample records. Following these recommendations, we have chosen among the longest flood series available in the coastal basins of Eastern Australia. The record lengths range from 53 to 101 years producing, especially in the shorter series, a possible bias in the evaluation of higher order L-moments. On the other hand, in this paper we do not pursue the issue of comparison between different probabilistic models. In fact, the use of mixed distributions calls and allows for the development of new criteria for model selection strongly based on the knowledge and investigation of physical processes.

7 Conclusions

A theoretical formulation is presented supporting the use of compound mixture distributions to describe flood populations affected by low-frequency climate oscillations such as the decadal IPO and ENSO-related phenomena. The formulation exploits the property of superposition and thinning Poissonian counting processes. It shows that the TCEV is a promising and robust candidate for this purpose. This is confirmed by the good results obtained by fitting the TCEV distribution to the longest available series of annual maximum floods in the coastal basins of Queensland and New South Wales in Eastern Australia. The TCEV is shown to adequately fit the marginal distribution over the entire range of observed values without the explicit need to resort to climate covariates and removal of potentially influential low values. Furthermore, a literature review of evidence regarding rainfall and flood generation in the different IPO epochs is shown to be not inconsistent with the assumptions underpinning the TCEV.

Previous applications of TCEV have focused on regional analysis. This study shows that TCEV can be applied to at-site analyses of flood series with lengths ranging from 53 to 101 years) where the dog-leg signature of different flood generation processes is evident. It also extends the domain covered by the TCEV distribution in the L-moment ratio diagram and shows for the Eastern Australian case study that all sites lie within the TCEV extended domain.

This study shows that the choice of a parent distribution can be guided by a deeper knowledge of the climate and runoff controls affecting floods. The incorporation of physically based reasoning into flood frequency analysis has been already undertaken in a significant number of studies which used a derived distribution or mixtures of different populations approaches. Nonetheless, at the operational level, FFA handbooks and guidelines, all over the world, largely rely on curve fitting and statistical testing. While we acknowledge this is necessary, it is not necessarily sufficient. Curve fitting cannot subject a probability model to scrutiny beyond the observed data. When the intent of FFA is to extrapolate well beyond the data, a deeper form of model scrutiny is required.

References

Alila Y, Mtiraoui A (2002) Implications of heterogeneous flood-frequency distributions on traditional stream-discharge prediction techniques. Hydrol Process 16:1065–1084. https://doi.org/10.1002/hyp.346

Archer DR (1981) A catchment approach to flood estimation. J Inst Water Eng Sci 35:275–289

Arnell N, Beran M (1988) Probability-weighted moments estimators for TCEV parameters. Technical Report Institute of Hydrology, Wallingford

Arnell NW, Salvatore G (1988) Extreme value distribution in regional flood frequency analysis. Water Resour Res 24:879–887

Asquith WH (2021) L-momco: l-moments trimmed l-moments, l-comoments, and many distributions. R Package Version 2(3):7

Barth NA, Villarini G, Nayak MA, White K (2016) Mixed populations and annual flood frequency estimates in the western United States: the role of atmospheric rivers. Water Resour Res 53:257–269. https://doi.org/10.1002/2016WR019064

Beran M, Hosking JRM, Arnell N (1986) Comment on “two-component extreme value distribution for flood frequency analysis” by Fabio Rossi, Mauro Florentino, and Pasquale Versace. Water Resour Res 22:263–266. https://doi.org/10.1029/WR022i002p00263

Beven K, Wood EF (1983) Catchment geomorphology and the dynamics of runoff contributing areas. J Hydrol 65:139–158. https://doi.org/10.1016/0022-1694(83)90214-7

Blöschl G, Hall J, Parajka J et al (2017) Changing climate shifts timing of European floods. Science 357:588–590. https://doi.org/10.1126/science.aan2506

Blöschl G, Hall J, Viglione A et al (2019) Changing climate both increases and decreases European river floods. Nature 573:108–111. https://doi.org/10.1038/s41586-019-1495-6

Bobée B, Rasmussen PF (1995) Recent advances in flood frequency analysis. Rev Geophys 33:1111–1116. https://doi.org/10.1029/95RG00287

Bocchiola D, Rosso R (2014) Safety of Italian dams in the face of flood hazard. Adv Water Resour 71:23–31. https://doi.org/10.1016/j.advwatres.2014.05.006

Castellarin A, Kohnová S, Gaál L, Fleig A, Salinas J, Toumazis A, Kjeldsen T, Macdonald N (2012) Review of applied-statistical methods for flood-frequency analysis in Europe. NERC/Centre for Ecology & Hydrology http://nora.nerc.ac.uk/id/eprint/19286

Chandramohan J, Liang LK (1985) Bernoulli, multinomial and Markov chain thinning of some point processes and some results about the superposition of dependent renewal processes. J Appl Probab 22(4):828–835

Connell RJ, Pearson CP (2001) Two-component extreme value distribution applied to Canterbury annual maximum flood peaks. J Hydrol (New Zealand) 40:105–127

Cunnane C (1985) Factors affecting choice of distribution for flood series. Hydrol Sci J 30:25–36. https://doi.org/10.1080/02626668509490969

Cunnane C (1989) Statistical distributions for flood frequency analysis. Oper Hydrol Rep 33:718

De Michele C (2019) Advances in deriving the exact distribution of maximum annual daily precipitation. Water (Switzerland) 11:2322. https://doi.org/10.3390/w11112322

Do HX, Westra S, Leonard M (2017) A global-scale investigation of trends in annual maximum streamflow. J Hydrol 552:28–43. https://doi.org/10.1016/j.jhydrol.2017.06.015

El Adlouni S, Bobée B, Ouarda TBMJ (2008) On the tails of extreme event distributions in hydrology. J Hydrol 355:16–33. https://doi.org/10.1016/j.jhydrol.2008.02.011

Fiorentino M, Versace P, Rossi F (1985) Regional flood frequency estimation using the two-component extreme value distribution. Hydrol Sci J 30:51–64. https://doi.org/10.1080/02626668509490971

Fiorentino M, Gabriele S, Rossi F, Versace P (1987) A hierarchical approach to regional flood frequency analysis. In: Singh VP (ed) Regional flood frequency analysis. Springer, Netherlands, pp 35–49

Fischer S, Schumann A, Schulte M (2016) Characterisation of seasonal flood types according to timescales in mixed probability distributions. J Hydrol 539:38–56. https://doi.org/10.1016/j.jhydrol.2016.05.005

Franks SW (2004) Multi-decadal climate variability, New South Wales, Australia. Water Sci Technol 49:133–140. https://doi.org/10.2166/wst.2004.0437

Franks SW, Kuczera G (2002) Flood frequency analysis: evidence and implications of secular climate variability, New South Wales. Water Resour Res 38:201–207. https://doi.org/10.1029/2001wr000232

Frost AJ (2003). Spatio-temporal hidden Markov models for incorporating interannual variability in rainfall, PhD thesis, The University of Newcastle (Australia)

Fuller WE (1914) Flood flows. Trans Am Soc Civ Eng 77:564–617. https://doi.org/10.1061/taceat.0002552

Gaál L, Szolgay J, Kohnová S, Hlavčová K, Parajka J, Viglione A et al (2015) Dependence between flood peaks and volumes: a case study on climate and hydrological controls. Hydrol Sci J 60:968–984. https://doi.org/10.1080/02626667.2014.951361

Gabriele S, Iiritano G (1994) Alcuni aspetti teorici ed applicativi nella regionalizzazione delle piogge con il modello TCEV. GNDCI–Linea 1 U.O. 1.4, Pubblicazione N. 1089. Rende (CS) (in Italian)

Gabriele S, Arnell N (1991) A hierarchical approach to regional flood frequency analysis. Water Resour Res 27:1281–1289. https://doi.org/10.1029/91WR00238

Grego JM, Yates PA (2010) Point and standard error estimation for quantiles of mixed flood distributions. J Hydrol 391:289–301. https://doi.org/10.1016/j.jhydrol.2010.07.027

Gumbel EJ (1958) Statistics of extremes. Columbia University Press, New York

Hazen A (1914a) Storage to be provided impounding reservoirs for municipal water supply. Trans Am Soc Civ Eng 77:1539–1659

Hazen A (1914b) Discussion on “flood flows” by W. E. Fuller. Trans Am Soc Civ Eng 77:626–632

Hesarkazzazi S, Arabzadeh R, Hajibabaei M et al (2021) Stationary vs non-stationary modelling of flood frequency distribution across northwest England. Hydrol Sci J 66:729–744. https://doi.org/10.1080/02626667.2021.1884685

Hirschboeck KK (1987) Hydroclimatically defined mixed distributions in partial duration flood series. In: Singh VP (ed) Hydrologic frequency modeling. Reidel, Norwell, Mass, pp 199–212

Horton RE (1931) The field, scope, and status of the science of hydrology. Eos Trans Am Geophys Union 12:189–202. https://doi.org/10.1029/TR012i001p00189-2

Hosking JRM (1990) L-moments: analysis and estimation of distributions using linear combinations of order statistics. J R Stat Soc Ser B 52:105–124. https://doi.org/10.1111/j.2517-6161.1990.tb01775.x

Hosking JRM (1994) Four-parameter kappa distribution. IBM J Res Dev 38:251–258. https://doi.org/10.1147/rd.383.0251

Hosking JRM, Wallis JR (1995) A comparison of unbiased and plotting-position estimators of L moments. Water Resour Res 31:2019–2025. https://doi.org/10.1029/95WR01230

House PK, Hirschboeck KK (1997) Hydroclimatological and paleohydrological context of extreme winter flooding in Arizona. Rev Eng Geol 11:1–24

Iacobellis V, Fiorentino M (2000) Derived distribution of floods based on the concept of partial area coverage with a climatic appeal. Water Resour Res 36:469–482. https://doi.org/10.1029/1999WR900287

Ishak E, Rahman A (2019) Examination of changes in flood data in Australia. Water (Switzerland) 11:1–14. https://doi.org/10.3390/w11081734

Isham V (1980) Dependent thinning of point processes. J Appl Probab 17(4):987–995

Jarihani B, Sidle RC, Bartley R et al (2017) Characterisation of hydrological response to rainfall at multi spatio-temporal scales in savannas of semi-arid Australia. Water (Switzerland) 9:7–9. https://doi.org/10.3390/w9070540

Johnson F, White CJ, van Dijk A et al (2016) Natural hazards in Australia: floods. Clim Change 139:21–35. https://doi.org/10.1007/s10584-016-1689-y

Khaliq MN, Ouarda TBMJ, Ondo JC et al (2006) Frequency analysis of a sequence of dependent and/or non-stationary hydro-meteorological observations: a review. J Hydrol 329:534–552. https://doi.org/10.1016/j.jhydrol.2006.03.004

Kiem AS, Franks SW (2004) Multi-decadal variability of drought risk, eastern Australia. Hydrol Process 18:2039–2050. https://doi.org/10.1002/hyp.1460

Kiem AS, Franks SW, Kuczera G (2003) Multi-decadal variability of flood risk. Geophys Res Lett. https://doi.org/10.1029/2002GL015992

Kjeldsen TR, Ahn H, Prosdocimi I, Heo JH (2018) Mixture Gumbel models for extreme series including infrequent phenomena. Hydrol Sci J 63:1927–1940. https://doi.org/10.1080/02626667.2018.1546956

Klemes V (2000) Tall Tales about Tails of Hydrological Distributions. I. J Hydrol Eng 5:227–231. https://doi.org/10.1061/(ASCE)1084-0699(2000)5:3(227)

Koutsoyiannis D (2004a) Statistics of extremes and estimation of extreme rainfall: I. Theoret Investig Hydrol Sci J 49:575–590. https://doi.org/10.1623/hysj.49.4.575.54430

Koutsoyiannis D (2004b) Statistics of extremes and estimation of extreme rainfall: II. Empirical investigation of long rainfall records. Hydrol Sci J 49:591–610. https://doi.org/10.1623/hysj.49.4.591.54424

Koutsoyiannis D (2013) Hydrology and change. Hydrol Sci J 58:1177–1197. https://doi.org/10.1080/02626667.2013.804626

Laio F, Di Baldassarre G, Montanari A (2009) Model selection techniques for the frequency analysis of hydrological extremes. Water Resour Res. https://doi.org/10.1029/2007WR006666

Laio F, Allamano P, Claps P (2010) Exploiting the information content of hydrological “outliers” for goodness-of-fit testing. Hydrol Earth Syst Sci 14:1909–1917. https://doi.org/10.5194/hess-14-1909-2010

Lewis P, Shedler G (1979) Simulation of non-homogeneous Poisson process by thinning. Nav Res Logist 26:403–413

Lombardo F, Napolitano F, Russo F, Koutsoyiannis D (2019) On the exact distribution of correlated extremes in hydrology. Water Resour Res 55:10405–10423. https://doi.org/10.1029/2019WR025547

Madsen H, Rasmussen P, Rosbjerg D (1997) Comparison of annual maximum series and partial duration series for modelling exteme hydrological events: 1. At-site modeling. Water Resour Res 33:747–757. https://doi.org/10.1029/96WR03848

Matalas NC, Slack JR, Wallis JR (1975) Regional skew in search of a parent. Water Resour Res 11:815–826. https://doi.org/10.1029/WR011i006p00815

McIvor JG, Williams J, Gardener CJ (1995) Pasture management influences runoff and soil movement in the semi-arid tropics. Aust J Exp Agric 35:55–65. https://doi.org/10.1071/EA9950055

Merz R, Blöschl G (2003) A process typology of regional floods. Water Resour Res 39:1–20. https://doi.org/10.1029/2002WR001952

Micevski T, Franks SW, Kuczera G (2006) Multidecadal variability in coastal eastern Australian flood data. J Hydrol 327:219–225. https://doi.org/10.1016/j.jhydrol.2005.11.017

Moran PAP (1959) The theory of storage. Wiley, New York

Papalexiou SM, Koutsoyiannis D (2013) Battle of extreme value distributions: a global survey on extreme daily rainfall. Water Resour Res 49:187–201. https://doi.org/10.1029/2012WR012557

Papalexiou SM, Koutsoyiannis D, Makropoulos C (2013) How extreme is extreme? An assessment of daily rainfall distribution tails. Hydrol Earth Syst Sci 17:851–862. https://doi.org/10.5194/hess-17-851-2013

Pelosi A, Furcolo P, Rossi F, Villani P (2020) The characterization of extraordinary extreme events (EEEs) for the assessment of design rainfall depths with high return periods. Hydrol Process 34:2543–2559. https://doi.org/10.1002/hyp.13747

Potter WD (1958) Upper and lower frequency curves for peak rates of runoff. Eos, Trans Am Geophys Union 39:100–105. https://doi.org/10.1029/TR039i001p00100

Power S, Casey T, Folland C et al (1999) Inter-decadal modulation of the impact of ENSO on Australia. Clim Dyn 15:319–324. https://doi.org/10.1007/s003820050284

Pui A, Lal A, Sharma A (2011) How does the interdecadal pacific oscillation affect design floods in Australia? Water Resour Res 47:5. https://doi.org/10.1029/2010WR009420

Rahman A, Haddad K, Rahman AS (2015) Australian rainfall and runoff project 5: regional flood methods: database used to develop ARR RFFE technique 2015. Commonwealth of Australia (Geoscience Australia)

Rao AR, Hamed KH (2000) Flood frequency analysis. CRC Press, Boca Raton, Fla

Rossi F, Fiorentino M, Versace P (1984) Two-Component Extreme Value Distribution for Flood Frequency Analysis. Water Resour Res 20:847–856. https://doi.org/10.1029/WR020i007p00847

Roth CH (2004) A framework relating soil surface condition to infiltration and sediment and nutrient mobilization in grazed rangelands of northeastern Queensland, Australia. Earth Surf Process Landforms 29:1093–1104. https://doi.org/10.1002/esp.1104

Rulfová Z, Buishand A, Roth M, Kyselý J (2016) A two-component generalized extreme value distribution for precipitation frequency analysis. J Hydrol 534:659–668. https://doi.org/10.1016/j.jhydrol.2016.01.032

Salas JD, Obeysekera J (2014) Revisiting the concepts of return period and risk for nonstationary hydrologic extreme events. J Hydrol Eng. https://doi.org/10.1061/(ASCE)HE.1943-5584.0000820

Salinas JL, Castellarin A, Viglione A et al (2014) Regional parent flood frequency distributions in Europe - Part 1: is the GEV model suitable as a pan-European parent? Hydrol Earth Syst Sci 18:4381–4389. https://doi.org/10.5194/hess-18-4381-2014

Samuel JM, Sivapalan M (2008) Effects of multiscale rainfall variability on flood frequency: comparative multisite analysis of dominant runoff processes. Water Resour Res 44:1–15. https://doi.org/10.1029/2008WR006928

Serinaldi F, Kilsby CG (2014) Rainfall extremes: Toward reconciliation after the battle of distributions. Water Resour Res 50:336–352. https://doi.org/10.1002/2013WR014211

Shin JY, Lee T, Ouarda TBMJ (2015) Heterogeneous mixture distributions for modeling multisource extreme rainfalls. J Hydrometeorol 16:2639–2657. https://doi.org/10.1175/JHM-D-14-0130.1

Sikorska AE, Viviroli D, Seibert J (2015) Flood-type classification in mountainous catchments using crisp and fuzzy decision trees. Water Resour Res 51:7959–7976. https://doi.org/10.1002/2015WR017326

Silburn DM, Carroll C, Ciesiolka CAA et al (2011) Hillslope runoff and erosion on duplex soils in grazing lands in semi-arid central Queensland. I. Influences of cover, slope, and soil. Soil Res 49:105–117. https://doi.org/10.1071/SR09068

Singh KP, Sinclair RA (1972) Two- distribution method for flood- frequency analysis. ASCE J Hydraul Div 98:28–44

Singh VP, Strupczewski WG (2002) On the status of flood frequency analysis. Hydrol Process 16:3737–3740. https://doi.org/10.1002/hyp.5083

Sivapalan M, Wood EF, Beven KJ (1990) On hydrologic similarity: 3. A dimensionless flood frequency model using a generalized geomorphologic unit hydrograph and partial area runoff generation. Water Resour Res 26:43–58. https://doi.org/10.1029/WR026i001p00043

Smith JA, Villarini G, Baeck ML (2011) Mixture distributions and the hydroclimatology of extreme rainfall and flooding in the Eastern United States. J Hydrometeorol 12:294–309. https://doi.org/10.1175/2010JHM1242.1

Smith JA, Cox AA, Baeck ML et al (2018) Strange floods: the upper tail of flood peaks in the United States. Water Resour Res 54:6510–6542. https://doi.org/10.1029/2018WR022539

Snyder DL, Miller MI (2012) Random point processes in time and space, 2nd edn. Springer, Berlin

Stedinger JR, Vogel RM, Foufoula-Georgiou E (1993) Frequency analysis of extreme events. In: Maidment DA (ed) Handbook of applied hydrology (chapter 18. McGraw-Hill, New York, pp 18-1–18-66

Strupczewski WG, Kaczmarek Z (2001) Non-stationary approach to at-site flood frequency modelling II. Weighted Least Squares Estimation J Hydrol 248:143–151. https://doi.org/10.1016/S0022-1694(01)00398-5

Strupczewski WG, Singh VP, Feluch W (2001a) Non-stationary approach to at-site flood frequency modelling I. Maximum Likelihood Estimation J Hydrol 248:123–142. https://doi.org/10.1016/S0022-1694(01)00397-3

Strupczewski WG, Singh VP, Mitosek HT (2001b) Non-stationary approach to at-site flood frequency modelling. III. Flood analysis of Polish rivers. J Hydrol 248:152–167. https://doi.org/10.1016/S0022-1694(01)00399-7

Strupczewski WG, Kochanek K, Feluch W et al (2009) On seasonal approach to nonstationary flood frequency analysis. Phys Chem Earth 34:612–618. https://doi.org/10.1016/j.pce.2008.10.067

Strupczewski WG, Kochanek K, Markiewicz I et al (2011) On the tails of distributions of annual peak flow. Hydrol Res 42:171–192. https://doi.org/10.2166/nh.2011.062

Strupczewski WG, Kochanek K, Bogdanowicz E, Markiewicz I (2012) On seasonal approach to flood frequency modelling. Part I: Two-component distribution revisited. Hydrol Process 26:705–716. https://doi.org/10.1002/hyp.8179

Szolgay J, Gaál L, Bacigál T et al (2016) A regional comparative analysis of empirical and theoretical flood peak-volume relationships. J Hydrol Hydromechanics 64:367–381. https://doi.org/10.1515/johh-2016-0042

Tabari H (2020) Climate change impact on flood and extreme precipitation increases with water availability. Sci Rep 10:1–10. https://doi.org/10.1038/s41598-020-70816-2

Tarasova L, Basso S, Merz R (2020) Transformation of generation processes from small runoff events to large floods. Geophys Res Lett 47:e290547. https://doi.org/10.1029/2020GL090547

Todorovic P (1970) On some problems involving random number of random variables. Ann Math Stat 41:1059–1063. https://doi.org/10.1214/aoms/1177696981

Todorovic P (1978) Stochastic models of floods. Water Resour Res 14:345–356. https://doi.org/10.1029/WR014i002p00345

Todorovic P, Yevjevich V (1969) Stochastic process of precipitation. Hydrol Pap 35(35):68

Todorovic P, Zelenhasic E (1968) The extreme values of precipitation phenomena. Hydrol Sci J 13:7–24. https://doi.org/10.1080/02626666809493622

Todorovic P, Zelenhasic E (1970) A Stochastic model for flood analysis. Water Resour Res 6:1641–1648

Trancoso R, Larsen JR, McAlpine C et al (2016) Linking the Budyko framework and the Dunne diagram. J Hydrol 535:581–597. https://doi.org/10.1016/j.jhydrol.2016.02.017

Vecchi GA, Wittenberg AT (2010) El Niño and our future climate: where do we stand? Wiley Interdiscip Rev Clim Chang 1:260–270. https://doi.org/10.1002/wcc.33

Verdon DC, Wyatt AM, Kiem AS, Franks SW (2004) Multidecadal variability of rainfall and streamflow: Eastern Australia. Water Resour Res 40:1–8. https://doi.org/10.1029/2004WR003234

Villarini G (2016) On the seasonality of flooding across the continental United States. Advan Water Res 87:80–91. https://doi.org/10.1016/j.advwatres.2015.11.009

Villarini G, Smith JA (2010) Flood peak distributions for the eastern United States. Water Resour Res 46:1–17. https://doi.org/10.1029/2009WR008395

Villarini G, Smith JA, Baeck ML, Krajewski WF (2011) Examining flood frequency distributions in the midwest U.S. J Am Water Resour Assoc 47:447–463. https://doi.org/10.1111/j.1752-1688.2011.00540.x

Vogel RM, Fennessey NM (1993) L moment diagrams should replace product moment diagrams. Water Resour Res 29:1745–1752

Vormoor K, Lawrence D, Heistermann M, Bronstert A (2015) Climate change impacts on the seasonality and generation processes of floods & n-dash; projections and uncertainties for catchments with mixed snowmelt/rainfall regimes. Hydrol Earth Syst Sci 19:913–931. https://doi.org/10.5194/hess-19-913-2015

Vormoor K, Lawrence D, Schlichting L et al (2016) Evidence for changes in the magnitude and frequency of observed rainfall vs. snowmelt driven floods in Norway. J Hydrol 538:33–48. https://doi.org/10.1016/j.jhydrol.2016.03.066

Wang QJ (1996) Direct sample estimators of L moments. Water Resour Res 32:3617–3619. https://doi.org/10.1029/96WR02675

Waylen P, Woo M-k (1982) Prediction of annual floods generated by mixed processes. Water Resour Res 18:1283–1286. https://doi.org/10.1029/WR018i004p01283

Waylen PR, Caviedes CN, Juricic C (1993) El nino-southern oscillation and the surface hydrology of chile: a window on the future? Can Water Resour J 18:425–441. https://doi.org/10.4296/cwrj1804425

Woltemade J, Potter W (1994) A watershed modeling analysis of fluvial geomorphologic influences on flood peak attenuation. Water Resour Res 30:1933–1942. https://doi.org/10.1029/94WR00323

Woo MK, Waylen PR (1984) Areal prediction of annual floods generated by two distinct processes. Hydrol Sci J 29:75–88. https://doi.org/10.1080/02626668409490923

Yan L, Xiong L, Liu D et al (2017) Frequency analysis of nonstationary annual maximum flood series using the time-varying two-component mixture distributions. Hydrol Process 31:69–89. https://doi.org/10.1002/hyp.10965

Yan L, Xiong L, Ruan G et al (2019) Reducing uncertainty of design floods of two-component mixture distributions by utilizing flood timescale to classify flood types in seasonally snow covered region. J Hydrol 574:588–608. https://doi.org/10.1016/j.jhydrol.2019.04.056

Zelenhasic E (1970) Theoretical Probability Distributions for Flood Peaks. Colo State Univ (Fort Collins), Hydrol Pap 42

Zeng H, Feng P, Li X (2014) Reservoir flood routing considering the non-stationarity of flood series in North China. Water Resour Manag 28:4273–4287. https://doi.org/10.1007/s11269-014-0744-6

Acknowledgements

Dedicated to the memory of prof. Fabio Rossi (1944-2021).

Funding

Open access funding provided by Politecnico di Bari within the CRUI-CARE Agreement. This work is partially funded by the project entitled “Ricognizione delle disponibilità della risorsa idrica in Puglia mediante utilizzo di strumenti probabilistici nel contesto dei cambiamenti climatici” (Puglia regional programme “Research for Innovation") in the framework of the Puglia Regional Operational Programme FESR FSE 2014-2020.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Vincenzo Totaro and Andrea Gioia. The first draft of the manuscript was written by Vincenzo Totaro, Vito Iacobellis and George Kuczera and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In what follows we describe the numerical experiments carried out for assessing the suitability of the TCEV distribution for fitting a time series generated by processes described in Sect. 3. For the two cases in Sect. 3, we show results obtained from Monte Carlo simulations with 50,000 samples assuming as a basis for characterizing the parent distribution four sites from Table 2, namely Fisher Creek at Nerada, Don River at Ida Creek, Waterpark Creek at Byfield and Corang River at Hockeys. The four sites were selected to sample the extremes of the values of \({\theta }_{*}\) and \({\uplambda }_{*}\) in Fig. 3. The value of \({{\text{p}}}_{1}\), as defined in Eq. (12), has been evaluated exploiting data from Kiem et al. (Table 1, 2003), who find, after series stratification, the number of El Niño, La Niña and Neutral events occurring within each of the IPO phases characterized by \(IPO<-0.5\) and \(IPO>-0.5.\) Assuming that the probability of falling into the wet epoch 1 is equal to the percentage of year characterized by \(IPO<-0.5,\) then, \({p}_{1}\) has been set equal to the ratio between the sum of events with \(IPO<-0.5\) and the total number of events, i.e., \({p}_{1}=21/76=0.27\).

1.1 Case 1: One component per epoch

The number of events in a year was sampled using Eq. (12) and the exceedance for each event sampled using Eq. (13). Parameters were obtained from the site estimates of \({\lambda }_{1}, {\lambda }_{2}, {\theta }_{1} \, {\text{and}} \,{\theta }_{2}\) and the value assigned to \({p}_{1}\). Results presented in the following figures show that the fitted TCEV is indistinguishable from the parent and that the L-moment parameters estimates are close to the parent values (see Fig. 5 and Table 3).

1.2 Case 2: Two components per epoch

For a given \({\lambda }_{1}, {\lambda }_{2}, {\theta }_{1} \,{\text{and}}\, {\theta }_{2}\) and \({p}_{1}\), the eight parameters of the underlying thinned Poisson and exceedance distributions are assigned as follows: the four exceedance parameters are derived using \({\theta }_{1} = {\theta }_{\mathrm{1,1}}={\theta }_{\mathrm{1,2}}\), and \({\theta }_{2}={\theta }_{\mathrm{2,1}}={\theta }_{\mathrm{2,2}}\). The four Poisson parameters must satisfy Eqs. (23) and (24). However, two additional equations are needed for closure:

This means that the following system has to be solved:

The analytical solution of this system leads to the following relationships:

Ensuring the positivity of both parameters requires the holding of three conditions:

Using Table 2 the ratio \({\uplambda }_{1}/{\uplambda }_{2}\) ranged from a minimum value of 1.21 to a maximum of 9.45. Combining Inequalities (B2) with constraints reported in the Discussion section, i.e., that \({\mathrm{\lambda^{\prime}}}_{\mathrm{1,1}}\) considerably greater than \({\mathrm{\lambda^{\prime}}}_{\mathrm{2,1}}\) and \({\mathrm{\lambda^{\prime}}}_{\mathrm{1,2}}\) slightly lower than \({\mathrm{\lambda^{\prime}}}_{\mathrm{2,2}}\), led to assigning \({\alpha }_{1}\) to 10 and \({\alpha }_{2}\) to 0.9 for characterizing our parent distribution.

As for Case 1, the results presented in the following figures show that the fitted TCEV is indistinguishable from the parent and that the L-moment parameters estimates are close to the parent values (see Fig. 6 and Table 4).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Totaro, V., Gioia, A., Kuczera, G. et al. Modelling multidecadal variability in flood frequency using the Two-Component Extreme Value distribution. Stoch Environ Res Risk Assess (2024). https://doi.org/10.1007/s00477-024-02673-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s00477-024-02673-8