Abstract

Population-based meta-heuristic optimization algorithms play a vital role in addressing optimization problems. Nowadays, exponential distribution optimizer (EDO) can be considered to be one of the most recent among these algorithms. Although it has achieved many promising results, it has a set of shortcomings, for example, the decelerated convergence, and provides local optima solution as it cannot escape from local regions in addition to imbalance between diversification and intensification. Therefore, in this study, an enhanced variant of EDO called mEDO was proposed to address these shortcomings by combining two efficient search mechanisms named orthogonal learning (OL) and local escaping operator (LEO). In mEDO, the LEO has been exploited to escape local optima and improve the convergence behavior of the EDO by employing random operators to maximize the search process and to effectively discover the globally optima solution. Then the OL has been combined to keep the two phases (i.e., exploration and exploitation) balanced. To validate the effectiveness and performance of the mEDO algorithm, the proposed method has been evaluated over ten functions of the IEEE CEC’2020 test suite as well as eight real-world applications (engineering design optimization problems), Furthermore we test the applicability of the proposed algorithm by tackling 21 instance of the quadratic assignment problem (QAP). The experimental and statistical results of the proposed algorithm have been compared against seven other common metaheuristic algorithms (MAs), including the basic EDO. The results show the supremacy of the mEDO algorithm over the other algorithms and reveal the applicability and effectiveness of the mEDO algorithm compared to well-established metaheuristic algorithms. The experimental results and different statistical measures revealed the reliability and applicability of the mEDO method in solving the global, engineering design, and combinatorial optimization problems by achieving a reasonable solution in terms of scoring a global optima solutions and avoiding premature convergence by increasing the population’s diversity.

Similar content being viewed by others

1 Introduction

Overall optimization is a systematic activity developed to obtain advantageously available resources. In other words, tuning some parameters and apply a list of constraints and limitations on a given optimization problem with the aim of maximizing or minimizing a specified objective function. Evaluate the objective functions to discover the best among a set of candidate solutions. Optimization algorithms have already been applied almost in all domains, such as the financial domain [1, 2], location-location problems [3, 4], engineering design [5, 6], database design [7, 8], and chemical engineering design [9, 10]. Recently, optimization algorithms have an outstanding ability to tackle different kinds of problem, such as detecting COVID-19 [11, 12], detecting breast cancer [13], edge computing [14], minimizing the rate of energy consumption [15], feature selection [16] and selecting characteristics for classification of medical data [17]. Recently the optimization field achieve a promising results in solving problems that suffer from difficulties such as high dimensionality, and differentiability [18]. Generally, optimization algorithms can be classified into two main classes, stochastic and deterministic. In deterministic optimization algorithms, the particular features of a specific problem are exploited to obtain the optimal solution, as well as to provide mathematical proof to validate the optimality of this solution [19]. Although these algorithms provide good solutions for problems with clear features, they suffer from many problems, such as relying on the gradient information of search space, trapping into local optima, high computational cost, and dramatic performance degradation as increasing the dimensionality of the problem [20, 21]. On the other hand, stochastic optimization algorithms do not have the evidence of the optimal solution; this is due to the involvement of a set of random factors in some constructive way during the discovery process of the optimal solution. Additionally, the 100% certainty of finding the global optimal solution with stochastic algorithms can be considered practically impossible, since it requires an infinite execution time [22]. In real life, there are situations that do not require the best global solution. It is sufficient to obtain results that are good enough in a reasonable computational time. Therefore, stochastic could be a good choice as it can speed up finding a good solution even with complex problems or a large search space [23]. Recently, researchers have made an effort to encounter the drawbacks of stochastic optimization algorithms [24].

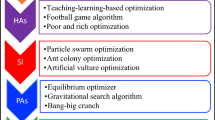

The stochastic category of optimization algorithms can be classified as heuristic Algorithms (HAs) and metaheuristic Algorithms (MAs). Generally, the main characteristics of HA can be summarized into three main points: (1) Design based on problems (i.e., one-to-one relationship with the problem or designed specifically for a specific problem). Although HAs do not provide mathematical proof to ensure optimality as in deterministic algorithms, they are problem-dependent algorithms that are used to find promising solutions matching particularities of the problem [19]. (2) Non-measurable Success (that is, HAs do not provide a clear indication of how close the obtained solution is to the optimality), and (3) Non-measurable Execution Time (that is, time for obtaining the optimal solution is difficult to measure). In sum, the specific problem, the precision, and the computational time are the three main factors that should be considered during the construction of HAs with the main objective of maintaining a trade-off between the last two factors that is the main purpose of the appearance of metaheuristics [25].

Although there is no wide difference between the two MA and HA, researchers prefer to use the MA due to many motives summarized in the following six points [26]: (1) Sophisticated the MAs is more Sophisticated than HAs [19]. They provide a good quality solution in a reasonable amount of time, specifically for large-space or complex problems. (2) Abstraction The MAs provide a high level of abstraction as it treats the problems being solved as a black box. Therefore, they can be considered problem-independent algorithms [27]. This consideration may be suitable for most real-life optimization problems [28]. (3) Simplicity, MAs can be simply inspired by very simple concepts such as physical phenomena, animal behaviors, or evolutionary concepts. This simplicity has allowed researchers to introduce new metaheuristics, improve an existing metaheuristic, or even hybridize two or more metaheuristic optimization algorithms. (4) Flexibility, the newly inspired metaheuristics can be applied to different classes of problems without any hard constraints or deep understanding of the problem. It just considers the main component of the problem (i.e. input(s) and output(s), which allows these metaheuristics to be used rapidly in many fields. (5) Stochastic nature, the majority of MAs are stochastically applying the optimization process on the problems, in which the optimization process starts with random generation(set of random solutions) without calculating the search spaces derivative for obtaining the optimal solution. Thus, the metaheuristic has great merit when dealing with expensive, complex, and unknown derivative information problems. (6) Local optima avoidance, the MAs have a supreme capability to treat the problem of local optimization in contrast to the other classes of optimization algorithms. The MAs avoid dropping in local solutions during the search process of the entire space. According to these six motivations, MAs have achieved rapid growth compared to other classes of algorithms over the last two decades. They become more popular among scientists of different fields, e.g., medical [29], drug design [30, 31], feature selection [32, 33], and image segmentation [34], combinatorial problems [35].

The MAs can be categorized, in turn, into single-solution based MAs (SMAs), and population-based MAs (PMAs). For SMAs, e.g., simulated annealing (SA) [36], the optimization process performs a local search operation by starting with a candidate solution and trying to enhance this solution depending on its neighborhood over a set of iterations with the aim of obtaining the optimal solution. On the contrary, the PMAs, for example, the genetic algorithm (GA) [37], employ a set of solutions, called population, located at different positions in the search space. This population is continually evolving over the course of iterations to obtain the optimal solution during the optimization process. Due to their superiority over the SMAs in that they cannot investigate several parts of the search space, the PMAs perform better than the SMAs overall. SMAs have a chance of being abandoned in local optima in the absence of effective SMA exploration. Therefore, PMAs have drawn considerable interest in recent studies compared to SMAs. In PMAs, a population of individuals is expected to move toward better and better solutions along with iteration. Most PMAs mimic natural phenomena [38, 39]. Regardless of the phenomenon, the process of optimization begins by building a generation (set of randomly generated individuals), where each member of the generation represents a potential solution to the problem being optimized. A better freshly created population will be used in place of the current in order to evolve the population iteratively utilizing some stochastic operators. The optimization process will be repeated as the maximum number of repetitions (iterations) is not reached or the satisfaction condition is achieved [40] [41].

There are different taxonomies of PMAs in the literature; in this paper, PMAs have been classified into five groups which are swarm intelligence (SI) algorithms, evolutionary algorithms (EAs), human-based algorithms, physics-based algorithms (PAs), and other natural sciences-based algorithms (NSs) including Math-based, chemistry-based, other natural phenomena. The swarm intelligence includes algorithms that imitate the behaviors of beings living groups (e.g., swarms, herds, or flocks) [42]. One of the most well-known SI algorithms that mimics a flock of birds is called particle swarm optimization (PSO) [43]. In PSO, every individual within the flock represents a candidate solution to the problem. Each individual is updated during the optimization process by considering two best positions (i.e., the local best position and the global best position). Ant colony optimization (ACO) [44], quantum-based avian navigation optimizer algorithm (QANA) [45], Cuckoo Search (CS) [46], Improved Symbiotic Organisms Search (I-SOS) [47], hybrid symbiosis organism search algorithm(HSOS) [48], A Modified Butterfly Optimization Algorithm (mLBOA) [49], Artificial Bee Colony (ABC) [50], Quasi-reflected slime mold (QRSMA) method [51] and honey badger algorithm (HBA) [52] are well-known instances of SI algorithms. evolutionary algorithms includes algorithms that mimic behaviors occurring in biological evolution (i.e., recombination, mutation, crossover, and selection), on of the oldest and most used algorithms in this group is the genetic algorithm (GA) [53], differential evolution (DE) [54], starling murmuration optimizer (SMO) [55], genetic programming (GP) [56], improved backtracking search algorithm (ImBSA) [57], and bio-geography-based optimizer (BBO) [58] are a different well-known instances of EA. The human-based algorithms simulates some humans’s behaviors is the main inspiration of this group. Tabu search (TS) [59] and teaching learning-based optimization (TLBO) [60]. The physics-based algorithms includes physical laws-inspired algorithms. The most popular instances of this class are the gravitational search algorithm (GSA) [61], and the big-bang big-crunch (BBBC) [62]. Eventually, the natural sciences-based algorithms, the exponential distribution optimizer (EDO) [63], chemical reaction optimisation (CRO) [64], and political optimizer (PO) [65].

In the same context, the Math-based optimization algorithms represent one of the well-known subcategories from PMAs. Some math-based algorithms fall under this group including; Gaussian swarm optimization (GSO) [66], Sine cosine algorithm (SCA) [67], Lévy flight distribution (LFD) [68], and archimedes optimization algorithm (AOA) [69]. To be specific, in this paper, a new variant of exponential distribution optimizer (EDO) is developed. EDO is considered one of the newest algorithms in Math-based group, draws its inspiration from the mathematical exponential probability distribution model [63]. Although the EDO is a promising algorithm, it still suffers from some shortcomings, including immature convergence limitations and local optima (LO) trapping.

1.1 Motivations

No matter how diverse these groups of algorithms are, the search process for an optimal solution is always done in two stages: exploration (diversification) and exploitation (intensification) [70]. In the exploration stage, the algorithms make an effort to entirely explore as possible numerous regions in different sides of the search space by utilizing and promoting its randomized operators. In the exploitation stage, the algorithms concentrate on the adjacent regions of the high quality solutions situated inside the search space. An efficient optimizer must have the ability to achieve a reasonable balance between exploitation and exploration [71]. It is valuable here to mention the no free lunch (NFL) optimization theorem [72], which concentrates on a universal metaheuristic solver is impossible, that is, metaheuristic algorithm that can be used as the best solver for a specified types of problem and does not wok better in the other types. This theory motivates researchers to develop novel algorithms and new variants. This makes optimization a constantly hot issue. So, it is critical to present new algorithms to deal with optimization problems that are complex in it’s nature, or to improve the results of an existing optimization algorithm. In particular, in this paper, a new variant of Exponential Distribution Optimizer (EDO) is developed. EDO is considered one of the newest algorithms in the Math-based category, draws its inspiration from the mathematical exponential probability distribution model [63]. Researchers benefit from the EDO algorithm to develop little number of publications to improve the overall performance of the EDO and employing them to solve different classes of problems. In [73,74,75] we can find examples of these publications.

1.2 Contributions

Although EDO is a promising algorithm, it still has some shortcomings. This study proposes that a modified version of the EDO algorithm called (mEDO) has been proposed to solve the shortcomings of the original algorithm to tackle the global and engineering optimization challenges. The study contributions can be summarized and listed as follows:

-

Introducing the main concepts in the optimization field.

-

Explaining two of the most promising enhancement strategies (i.e, local escaping operator (LEO) and orthogonal learning (OL)) that can be used to improve the overall performance of the optimization algorithms.

-

Employing two powerful search strategies the OL and The LEO to treat the shortcomings of the original EDO algorithm and propose an enhanced version of the EDO algorithm named mEDO in which “m” stands for modification.

-

A comprehensive and experimental comparative study to assess the performance’ supreme of the proposed algorithm is provided.

-

Validating the performance of mEDO on 10 CEC’2020 benchmark test functions on all dimensions.

-

Comparing the proposed mEDO algorithm against the winners and competitive algorithms

-

Comparing the proposed mEDO algorithm against set of the most recent and high performance algorithms

-

Test the applicability of the proposed by achieving a proposing results in solving eight engineering design problems.

-

Establishing the applicability of the proposed algorithm by solving 21 different instances of the QAP combinatorial problem.

-

Various statistical, quantitative and qualitative metrics are used in evaluating the performance of the proposed mEDO algorithm.

The remainder of this paper is organized as follows; the original EDO, LEO, and OL are discussed in Sect. 2. The proposed mEDO algorithm is introduced in Sect. 3. In Sect. 4, the performance of the proposed mEDO algorithm is evaluated over the CEC’2020 test suite. The results of the proposed mEDO algorithm and the competitors over engineering design and Combinatorial optimization problems are reported in Sects. 5 and 6. Finally, Sect. 7 presents the conclusions and future directions.

2 Preliminary

In this section, exponential distribution optimizer (EDO), local escaping operator (LEO) and orthogonal learning (OL) as two enhancement strategies are illustrated in more detail.

2.1 Exponential distribution optimizer (EDO)

The exponential probability distribution (EPD) served as the inspiration for the novel metaheuristic called EDO, in which each individual uses an EPD curve in position update during the exploration and exploitation phases. According to the EDO, a set of random EPDs is generated as an initial population. Then, each solution is handled as a chain of exponential random variables. These solutions are updated during the exploitation phase using three crucial EPD concepts; memoryless property, exponential variance, and guiding solution. For mimicking the memoryless property, fresh generated solutions (i.e., successful and unsuccessful solutions) will be preserved in a memoryless matrix irrespective of their costs compared to their corresponding solutions in the basic original population, which was assumed to include only the victors (i.e., regard fitness values). Preservation of unsuccessful solutions helps to improve next-time solutions, whereas previous historical solutions are not memorized entirely. New solutions during the exploration phase are updated based on the selection of two solutions from the original solution according to the exponential distribution, as well as the mean solution. The three main optimization phases (i.e. initialization, exploitation, and exploration) of the EDO metaheuristic algorithm are presented in more detail in this section. In the initialization phase, a set of solutions (Xvictors population)should be randomly initialized with a set of solutions (\(Xvictors_{i,j}\)) generated according to the Eq. (1).

where ub represents the upper bound, lb represents the lower bound, and rand is a random number \(\in [0,1]\).

In the exploitation phase, an objective function is used to evaluate and rate the solutions generated in the previous phase (i.e., initialization) to be sorted from best to worst. The following principles are essential to the EDO’s exploitation phase [63]:

-

1.

Looking for the optimal global solution around the guiding solution (Xmentor), which is the average of the top three solutions [see Eq. (2)].

$$\begin{aligned} Xmentor^{\text{ time }}=\left( Xvictors_{best1}^{\text{ time }} + Xvictors_{best2}^{\text{ time }} + Xvictors_{best3}^{\text{ time }} \right) /3 \end{aligned}$$(2)where \(Xmentor^{\text {time}}\) is the guiding solution in iteration (time). The word “time” denotes the interval until the occurrence of the next event, while \(Max\_time\) denotes the total number of iterations (number of intervals).

-

2.

The new solution is updated using a number of parameters of the EPD curve, including mean \(\mu\), exponential rate \(\lambda\), and standard variance (square inverse of \(\lambda\)). \(\lambda\) is calculated as 1/\(\mu\), while \(\mu\) is calculated using Eq. (6).

-

3.

The memoryless matrix should store the most recently generated solutions regardless of their fitness scores. Therefore, updating the currently generated solution \(V_i^{\text {time+1}}\) using Eq. (3) depends upon the \(i^{th}\) solution of the memoryless matrix (i.e., \(memoryless_i^{\text{ time }}\)). Initially, the memoryless matrix and the original Xvictors are the same.

where \(\phi\) is a randomly produced uniform number \(\in [0, 1]\), a and b are adaptive parameters determined using Eqs. (4) and (5) respectively, where rand is a number chosen randomly in the interval \([-1,1]\).

In the Exploration Phase, the most promising regions of the search space are discovered to build the optimization model. It is believed that the global optimal solution is located in these regions. In this phase, the currently generated solution is updated using Eq. (7).

where \(M^{time}\) denotes the average of all the solutions found in the initial population, rand is a randomly generated number \(\in [-1,1]\), and \(Xvictors\_{rand1}\) and \(Xvictors\_{rand2}\) are randomly selected from the initial population (Xvictors) according to the exponential distribution.

2.2 Local escaping operator (LEO)

As mentioned in [76], the LEO enhancement strategy has been proposed to explore new promising regions that are necessary to solve real-world problems. The quality of obtained solutions can be enhanced by the LEO primarily, mainly by the enhancement done by upgrading their positions according to certain criteria. The enhancement gives the algorithm the ability of avoiding the local optima solution, also it works improving the behavior of the convergence. Mathematically, an alternative solution \((X_{LEO})\) is produced using Eq. (11) and (12), the \((X_{LEO})\) can be mathematically formulated. To maintain excellent performance, this solution uses various strategies, including selecting the best position \((X_{best})\), choosing two solutions \((X_{r1}^m)\) and \((X_{r2}^m)\) from the population at random, generating two new solutions \(X1_{n}^m\) and \(X2_{n}^m\) from the current population at random (see Eq. (13)), and creating a new solution at random \((X_{k}^m)\).

where \(X_{n}^m\) indicates the current position, r2 is a randomly generated value within the range of [0,1], a pair of uniformly distributed random values within the range [\(-1\),1] is donated by f1 and f2, the limits of their lower and upper values are represented respectively in equations as ub and lb, d stands for the dimension of a given solution, and n with integer values from 1 up to N, and m with integer values from 1 up to D give the coordinates of the given solution. Additionally, \(u_1, u_2,\ and\ u_3\) represent random variables generated using Eqs. (14), (15), and (16).

where \(L_{1}\) = 1 if \(\mu _{1}\) \(\le\) 0.5 and is equal to 0 otherwise (\(\mu _{1}\) is a random number \(\in [0,1]\)). Additionally, \(\rho _{1}\) is used to balance the trade-off between exploration and exploitation during the search process using Eq. (17) based on the sine function presented in Eqs. (18), and (19).

the current iteration number is represented by t while \(t_{max}\) represents the maximum iterations, that is,\(Max\_time\)). Finally, the solution \(X_{k}^{m}\) can be determined using Eq. (20).

where the solution \(x_{p}^{m}\) is randomly selected from the population (p \(\in [1,2, \ldots N]\)) randomly, and \(\mu _{2}\) is a random generated value \(\in [0,1]\).

2.3 Orthogonal learning (OL)

As mentioned previously, a lot of search methods are used to enhance the search process, one of the best mechanisms is orthogonal learning (OL). Specifically, the OL approach provides a good sustain of the exploration and exploitation balance. The OL is used to generate effective agents (solutions) that guide the population to achieve a global optimal solution [77]. The generation of the solution can be accomplished by using Orthogonal Experimental Design (OED) methods that can obtain the ideal combination of factors’ levels during a small number of experiments [78]. Mathematically in OL it consists of two components orthogonal array (OA) and factor analysis (FA).

In the first component or first stage of OL, a table includes a series of numbers denoted as \(L_M(L^Q)\), where \(M = 2\cdot log_2(D+1)\), D is the dimension of the search space, L represents the number of levels per factor and Q represents the number of factors generated. This table

For more clarification, the following generated table provides an example of OA for a 3D Sphere function \(O_{array}(2^3)\) [79]. This OA table consists of three columns, indicating that it is generated for problems with up to three factors, each with two levels.

In the second stage (FA) of the OL, Depending on the experimental results (fitness) of all M combinations of the OA, the effect of each level on each factor is rapidly evaluated using equation. (21):

m = 1, 2..., M, q = 1, 2..., Q, and l = 1, 2..., L where the values of m, q, andl are an integers starts with 1 up to M, Q, andL respectively, \(W_{q,l}\) is used to represent the effect of the lth level on the qth and \(f(C_m)\) represents the experimental result (fitness) of \(m^{th}\) combination in the OA. For the mth combination, \(E_{m,q,l}\) = 1, if the qth factor level is l; otherwise \(E_{m,q,l}\)=0. Table 1 provides an example of applying Eq. (21) on the previous OA example. After obtaining the effects of all levels on all factors, by comparing these effects, we can easily determine the ideal mixture of these levels.

Generally, the previous orthogonal experimental design can be employed to apply the OL enhancement strategy. The OL method consider the optimization problem dimensions as a orthogonal experimental factors. To maintain and save the information about these two best solutions, the OL strategy use the best optimal solution and its neighbour as a two factors for the problem and combine them orthogonally. In the previous combination the two solutions can be considered as a two learning exemplars and allow the algorithm to discover and save useful information about the solutions obtained in addition to taking a direct steps toward the global optimal solution, hence the algorithm’s efficiency and effectiveness improved. The orthogonal combination of the two solutions can be mathematically expressed using Eq. (22).

where \(\oplus\) represents the OL operation that denotes combining the best recorded solution \(X_{{n}_{best}}^{m}\) with the its neighbour solution \(X_{n}^{m}\). The \(X_{n}^{m}\) is the new efficient generated solution that benefits from orthogonally combine the two previous solutions.

3 The proposed mEDO method

3.1 The shortcomings of the original EDO

Despite the good results scored by the EDO algorithm, it suffers from some shortcomings that should be addressed. The EDO is susceptible to be dropped in a local optima optimization problem such as numerous optimization algorithms [80]. When an algorithm gets stuck in a local optimal, its ability to find the best global optimal solution is diminished. This problem can occur frequently in complex high-dimensional optimization problems [81]. Furthermore, the EDO relies on generating new solutions, depending on the solutions generated in the previous iteration. This may provide a negative effect on the convergence rate of the algorithm and impedes the solutions from covering the search space entirely, leading to premature convergence. In addition to these shortcomings, the NFL optimization theorem discussed previously in Sect. 1 represents one of the primary motivations for any optimization study.

Enhancing the search ability, and addressing the limitations of the EDO algorithm to improve its ability to solve complex optimization problems represent the most important motives of the proposed algorithm. To achieve all these motivations, the two efficient strategies (i.e., LEO and OL) are integrated with the original EDO optimizer. While the original EDO steps are executed as usual, the mEDO algorithm employs the LEO and OL strategies to improve the exploratory and exploitative performance of the optimizer. A description in detail of the main optimization phases of the proposed mEDO optimizer; including initialization, exploitation, exploration, and the stop condition of the updating process are presented in the following paragraphs. At the end of this section, the time complexity of the proposed mEDO algorithm will be discussed.

3.2 mEDO optimization phases

Similar other optimization algorithms the modified Exponential Distribution Optimizer (mEDO) begins its work by The initialization phase in which the Eq. (1) is used to generate a starting population (Xvictors) of N randomly generated solutions with very dispersed values. Once the population (first generation) is initialized, the algorithm applies both explorative and exploitative capabilities during iterations in search for the globally best optimal solution.

For the exploitation capability of the mEDO, once (Xvictors) is initialized, its solutions are evaluated using the objective function. According to the values of this function, these solutions are ranked in descending order, starting from the best. The update the currently generated solution. The EPD curve attributes (that is, mean, standard variance, and exponential rate) are employed in the process of updating the currently generated solution. Additionally, the characteristics of the memoryless matrix are exploited to store the current new solutions regardless of their fitness values. As discussed above, the memoryless matrix is initialized by the original Xvictors population. The current solution is updated using one of two approaches (i.e., OL and LEO) based on a random value (r1), where \(r1 \in [0,1]\). If \(r1 > 0.5\), the OL is used as in Sect. 2.3 and Eq. (22). Otherwise, the LEO is used based on the value of u1 is calculated using Eq. (14) as discussed in Sect. 2.2. If \(u1 < 0.5\), Eq. (11) is used to update the solution. Otherwise, Eq. (12) is used.

For the exploration capability of mEDO, Eq. (7) is utilized to update the current generated solution to allow the algorithm to detect regions prone to optimal solution within the search space that are believed to contain the global optimal solution. In the mEDO exploration phase, the optimization model is constructed using two victors from the original basic population that meet the exponential distribution.

For the termination step of the mEDO, The process of updating the solution through the exploration and exploitation phases is iteratively implemented until a stopping condition ( max number of iterations) is met/reached. To provide a clear understanding of the proposed mEDO algorithm, Algorithm 1 presents the pseudo-code of the proposed mEDO algorithm. Furthermore, in Fig. 1 a flowchart that illustrates the mEDO algorithm in detail.

3.3 Computational complexity

In this section we will illustrate the computational analysis for the mEDO by computing the time and space complexity.

3.3.1 The time complexity

The time complexity of mEDO can be estimated depending on the main operations of the optimization algorithms, that is, initialization, update of the solution and evaluation of solutions. Therefore, it is possible to calculate the time complexity of the mEDO algorithm considering the total time consumed by these operations, as shown in the following paragraphs.

Generating a population of random solutions N, each with dimensions d, involves selecting a random value for each of the dimensions d in each solution. Since N solutions need to be generated, this process must be repeated N times, resulting in a total of selections of random values. \(N \times d\). Therefore, the time required for the initialization operation is \(O(N \times d)\).

The time required for solution evaluation can vary depending on the complexity of the objective function used in the optimization process. Let us assume that the cost function consumes C time units for each evaluation. In the mEDO algorithm, the fitness function is assessed twice for each solution: once after the initialization operation and once after each solution update process. The initialization operation involves generating N random solutions, so the time required to evaluate these solutions is \(O(C \times N)\). After the initialization operation, the algorithm continues to update the solutions iteratively until a stopping criterion is met. In each iteration, the fitness(objective) function is evaluated once for each new solution. Therefore, the time required for the evaluation of the solution during the update process is \(O(Max\_Fes \times C \times N)\), where \(Max\_Fes\) is the number of independent runs multiplied by the maximum number of iterations (\(Max\_time\)). In general, the time required for the evaluation operation of the solution is \(O(C \times N) + O(Max\_Fes \times C \times N)\).

In the mEDO algorithm, the solution update operation involves two main phases: exploration and exploitation. The exploration phase involves generating new candidate solutions by applying a mutation operator to current solutions. In mEDO, the mutation operator involves adding a small random value to each dimension of the solution. The time required to generate each new candidate solution is proportional to the dimensionality of the problem, which is d. Since N new solutions are generated in each iteration, the time complexity of the exploration phase is \(O(0.5 \times Max\_Fes \times N \times d)\).

The exploitation phase involves improving the current solutions by applying local search operations, such as LEO and OL. The time required for each of these operations is proportional to the dimensionality of the problem and the size of the population, which is \(N \times d\). Since \(Max\_Fes\) evaluations are performed, the time complexity of the exploitation phase is approximately \(O(0.5 \times Max\_Fes \times N \times d)\). Therefore, the overall time complexity of the solution update process in mEDO is approximately \(O(Max\_Fes \times N \times d)\).

From the previous calculations, the overall time complexity of mEDO is calculated as follows: Time complexity (mEDO) = Time complexity (initialization) + Time complexity (evaluation) + Time complexity (updating) = \(O(N \times d) + O (C \times N) + O (Max\_Fes \times C \times N) + O (0.5\times Max\_Fes \times N \times d) + O (0.5 \times Max\_Fes \times N \times d)\) = \(O (Max\_Fes \times C \times N) + O (Max\_Fes \times N \times d)\) = \(O (Max\_Fes \times C \times N + Max\_Fes \times N \times d)\) = \(O (Max\_Fes \times N (C + d)).\)

3.3.2 The space complexity

The space complexity for any algorithm can be defined as the memory space occupied during the initialization of the algorithm. In our case, the total space complexity for the mEDO algorithm is equal to \(O(N \times d)\). where N is the total number of search agents and d is the dimension of the problem.

4 Simulation results and discussion: CEC’2020 test suite analysis

In this section, the proposed mEDO algorithm has been evaluated by conducting three experimental series. The evaluation environment is located as a MATLAB R2019a installed on a computer with the following specifications: Intel (R) Core (TM) i7-6820HQ CPU running on 2.71 GHz, 16 GB of RAM, and a 64-bit version of Windows 10 Pro.

To assess the performance of mEDO, first the mEDO algorithm is tested on the IEEE Congress on Evolutionary Computation (CEC) CEC’2020 benchmark test functions [82]. Four types of functions (i.e., unimodal, multimodal, hybrid, and composition functions) are included in the CEC’2020 CEC’2020 benchmark test functions. Table 2 provides information on the type, description and optimal value (Fi*) of the function for each of these functions. To provide an understood representation of the differences and characteristics of each problem, Fig. 2 shows the 2-dimensional visualization of these functions.

4.1 Experimental series 1: comparison of the proposed mEDO with well-known algorithms

4.1.1 Statistical results analysis

The mEDO algorithm and it’s competitive algorithms is assessed in terms of mean and standard deviation (STD) of the best obtained solutions over 30 independent runs. The scores are presented in Table 3, where the best scores are highlighted in bold. To render these results more clear, the averages (i.e., the average of STD and the average of Mean) of the ten CEC’2020 benchmark functions have been computed for each algorithm. These averages are presented in Fig. 3 using the logarithmic scale. This figure shows the superior performance of mEDO compared to the other algorithms, where it has achieved an improvement in STD of its closest competitor (i.e., EDO) by 50% at the same average of the mean (i.e. 2.0e+03). Furthermore, the results of the Friedman test confirm the same conclusion that the mEDO algorithm statistically outperforms the other algorithms.

4.1.2 Parameter settings

As mentioned in [83], the performance of search algorithms can be affected by their parameters, such as population size. Adjusting the algorithms’ parameters may produce promising performance [84]. For fair and statistically significant comparative benchmarking, thirty independent runs with a maximum of five hundred iterations (equivalent to 1500 evaluations) have been conducted for each algorithm. Each algorithm used in the comparison process has its own parameters that should be set to their default values. Table 4 is used for this purpose.

4.1.3 Convergence curve analysis

One of the most crucial tests used to assess the stability of an algorithm is convergence analysis; Fig. 4 illustrates the convergence rates of the proposed algorithm against competing algorithms. As shown in this figure, the proposed algorithm achieves the fastest convergence rate over the CEC’2020 benchmark test functions in comparison to the other algorithms. It reaches its optimal solution in a small number of iterations compared to the other algorithms. This indicates that the proposed algorithm could provide promising results for solving real-time optimization problems and problems that require fast computation.

4.1.4 Box-plot analysis

To assess the strength of the proposed algorithm versus the other comparison algorithms graphically, the different types of graphical analysis are introduced in the following subsection. Boxplots are a type of exploratory data analysis used to understand the variability of data or how widely the data is spread out. Specifically, boxplots help determine the summary of five numbers of a data, which includes the lowest value (min), the lower quartile (Q1), the median (Q2), the upper quartile (Q3), and the highest value (max). Figure 5 shows the boxplot curves for the proposed mEDO algorithm and its competing algorithms over the ten functions of the CEC’2020 benchmark. A narrow boxplot represents a good distribution of data. The boxplots for the mEDO algorithm are the narrowest among the other competing algorithms for nine functions out of ten. Therefore, the mEDO algorithm outperforms these algorithms.

4.1.5 Qualitative metrics analysis

Based on the analysis mentioned previously, the high performance of the mEDO algorithm can be confirmed. However, further analytical tests are needed to clearly demonstrate the strength of the mEDO algorithm in solving optimization problems. Monitoring the behavior of search agents during the search process is one of these analytics. It is important to gain insights into the convergence rate of the proposed algorithm and to provide a deeper understanding of its suitability for real-world applications. The group of figures shown in Fig. 6 displays the behaviors of the mEDO agents in terms of the 2D representation of the ten CEC’2020 functions, diversity, search history, average fitness history, and optimization history. The qualitative analysis of these terms is observed in the following paragraphs.

The 2D visualization of the functions As in Fig. 6 column one shows the CEC’2020 functions in a 2D space. As presented a distinctive graphical topology is exhibited for these functions, which gives an insight of both shape and type of the functions.

Search history The second column of Fig. 6 displays the search history of the agents, from the initial iteration to the final one, it is clear that the search agents (points) become closer to each other and closely collected around the best optimal solution which indicates a decrease in the fitness function value. The vast majority of the ten functions, the search history shows that the mEDO algorithm is capable to explore and exploit the space around the global optimum (the red point), where the lowest values of fitness values exist.

The average fitness history for the gents behavior The third pillar of Fig. 6 displays the average values of the fitness values as a function of the number of iterations. Simply, this figure gives an insight about the general behavior of the search agents as the optimization process goes on. As seen in the figure, behavior of the average fitness is inversely propositional with the number of iterations. From this relation we can say that the population goes better over time and that there are constant improvements in the average fitness history demonstrating the collaborative searching behavior of the search agents and the effectiveness of the mEDO algorithm.

The Convergence curve and the analysis of optimization history In the Fig. 6 specifically it’s fourth part, the optimization history curves show the value of the fitness function of the search agents as a function of the algorithm iterations. For all the ten testing functions, the convergence curves of the mEDO algorithm exhibit a gradual decrease, indicating a high level of collaboration between the search agents in the algorithm while searching for the optimal solution, and eventually reaching an equilibrium state. The different shapes of the optimization history curves give insight as there is a link between the class of functions being optimized and the optimization history. This observation highlights the importance of understanding the attributes of the optimized problem and how they can affect the performance of the algorithm.

Population diversity Graphing the diversity as in the last column of Fig. 6 shows how close the search agents are to each other throughout the search process. As shown in the curves, the diversity value is high at the beginning of the optimization process, which gives a confirmation that the search agents are exploring the search space. As the iteration number increases and the algorithm scores progress of the optimization process, the exploitation phase takes over, and the diversity value decreases. These remarks help us to discover the great balance between the explorative and exploitative behavior of mEDO in comparison to the original EDO algorithm.

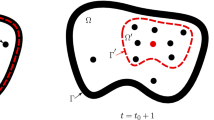

4.1.6 Exploration and exploitation analysis

To clearly demonstrate the behavior of the mEDO algorithm in the exploration and exploitation phases of the mEDO algorithm, a 2D view during the search process about the global best solution over the ten CEC’2020 functions is presented in Fig. 7. As shown in this figure, the mEDO algorithm begins with a high exploration-low exploitation ratio. As the iterations progress, the transitions to the exploitation stage occur for most of the search process. This is good evidence about the exploration-exploitation phase balance of the mEDO algorithm, and its ability to easily transition between the two states. As a result, the mEDO algorithm has the ability to achieve optimal solutions while maintaining efficient computation.

4.1.7 Scalability analysis

Testing the algorithm performance against the test function of different dimensions is required. To achieve further assessment of the proposed algorithm, this section is introduced to present the scalability test. In general, scaling up the dimensions of the problems affects the performance of the algorithm, so the scalability test is required. In this regard, we perform the scalability test on CEC’2020 benchmark functions with dimensions 5, 10, 15, 20. The test environment has been prepared to run using 45,000 as the maximum number of function evaluations for each algorithm and tested over 30 distinct runs. Figure 8 is presented to visualize the performance of mEDO and the other 7 algorithms participate in the comparison. For each dimension, Fig. 8 plots the average fitness value achieved by each algorithm for all the 10 test functions in four different dimensions. As shown, the mEDO algorithm has been scored. Specifically, for F2 the mEDO algorithm has been scored as the second significant performance, but in all other 9 functions the mEDO achieves the best performance compared to the other comparative algorithms against the four dimensions.

4.1.8 Wilcoxon’s rank-sum test analysis

In order to test the algorithm against more types of statistical tests, this section is presented to perform face-to-face comparisons of the algorithms that participate in the comparison. The Wilcoxon rank sum test is the main one used to judge the results obtained by the algorithms if they are consistent or random due to the stochastic nature of the MAs. The result obtained by the MAs must be stable, even though the stochastic nature of the MAs. Visit [85] if you want to know all the information on Wilcoxon’s rank sum test. In Table 5 the proposed mEDO algorithm has been compared with the other seven comparative algorithms in terms of the best average scores. We carried out the experiment using 0.05 as a significant level. As shown in Table 5, for most functions, the p-values are less than 0.05 which indicates a high statistically significant level for all four categories of the IEEE CEC’2020 benchmark functions and stable performance for the proposed mEDO algorithm.

4.2 Experimental series 2: comparing of the proposed mEDO with other recent optimizers

To test the proposed mEDO algorithm against recently published optimizers to solve high-dimensional problems, this section is introduced to compare the mEDO algorithm with eight recent optimisers, namely the Walrus Optimiser (WO) [86], A Sinh Cosh Optimiser (SCHO) [87], Kepler Optimisation Algorithm (KOA) [88], The Seagull Optimisation Algorithm (SOA) [89], RUN beyond the metaphor [90], INFO [91], Chaotic Hunger Games Search Optimisation Algorithm (HGS) [92], and the original EDO algorithm.

4.2.1 Parameter settings

To compare the performance of each algorithm in solving high-dimensional problems, the CEC’2020 benchmark functions with dim =100 are used. To achieve a fair comparison, the algorithms have been implemented 30 independently. Each algorithm used in the comparison process has its own parameters, which should be set to their default values. Table 6 is used for this purpose.

4.2.2 Convergence curve analysis

Figure 9 illustrates the convergence rates of the proposed algorithm against competitive algorithms. As shown in this figure, the proposed algorithm achieves the fastest convergence rate over the CEC’2020 benchmark test functions in comparison to the other algorithms. It reaches its optimal solution quickly in a small number of iterations compared to the other algorithms. The convergence behaviour of the proposed mEDO algorithm indicates to high performance for the mEDO algorithm compared to the other 8 algorithms in solving high dimensional and real time optimization problems.

4.2.3 Box-plot analysis

In terms of boxplot analysis and as shown in Fig. 10 the proposed mEDO algorithm have a narrower boxplot boxes than the other recent algorithms for the most of the 10 functions that indicates a good distribution of data. Therefore, the mEDO algorithm outperforms these algorithms in solving real world and high dimensional problems.

4.2.4 Running time analysis

To clear the impact analysis of the proposed algorithm and to compute the total running time consumed by each algorithm during the execution process, Fig. 11 is presented to visualize the total running time consumed by mEDO and the other recent algorithm for 10 distinct time. The average running time for all 10 times is also calculated in the last part of the bar figure.

As we can see our proposed algorithm have been scored the minimum running time among all the other algorithms participate in the comparison.

4.3 Experimental series 3: comparing of the proposed mEDO with other competitive and winners optimizers

To test the proposed mEDO algorithm against high performance and competitive algorithms to solve high-dimensional problems, this section is introduced to compare the mEDO algorithm against three of the competitive and CEC winners algorithms which are the adaptive guided differential evolution (AGDE) algorithm [93], CMA-ES: evolution strategies and covariance matrix adaptation [94], and Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood (LSHADE_cnEpSin) [95], and five other algorithms including the original EDO algorithm.

4.3.1 Parameter settings

CEC’2020 benchmark functions used to evaluate the performance of each algorithm and to achieve a fair comparison, the algorithms have been implemented 30 independently. In addition, each algorithm used in the comparison process owns its parameters that should be set to their default values. Table 7 is used for this purpose.

4.3.2 Convergence curve analysis

Figure 12 illustrates the convergence rates of the proposed algorithm against competitive algorithms. As shown in this figure, the proposed algorithm achieves the fastest convergence rate over the CEC’2020 benchmark test functions in comparison to the other algorithms. It reaches its optimal solution in a small number of iterations compared to the other algorithms. These curves indicate that the proposed mEDO algorithm performs better than the other 8 algorithms in solving high-dimensional and real-time optimization problems.

4.3.3 Box-plot analysis

In terms of boxplot analysis and as shown in Fig. 13 the proposed mEDO algorithm has narrower boxplot boxes than the other competitive algorithms for most of the 10 functions, indicating a good distribution of data. Therefore, the mEDO algorithm outperforms these algorithms in solving real world and high dimensional problems.

4.3.4 Running time analysis

To clear the impact analysis of the proposed algorithm and to calculate the total running time consumed by each algorithm during the execution process, Fig. 14 is presented to visualize the total running time consumed by mEDO and the other competitive algorithms for 10 distinct time. The average running time for all 10 times is also calculated in the last part of the bar figure.

As we can see our proposed algorithm have been scored the minimum running time among all the other algorithms participate in the comparison.

5 Simulation results and discussion: engineering design problems

Companies that mainly depend on engineering and mechanical systems seek to score a high position in the competitive market. Therefore, the researcher benefits from employing optimization algorithms to achieve promising results in terms of performance, cost, and product life cycle. In this context, optimization algorithms provide a high level of effectiveness and simplicity to solve problems related to the engineering and mechanical system. Using the proposed algorithm to address engineering design issues is a typical best practice to test and validate our optimization technique. The efficiency and capability of the algorithm to address other types of issue can be determined by how well it performs in tackling challenges of this nature. Studies show the effectiveness of this practice in evaluating the suggested algorithms in a variety of design problems [96]. The proposed mEDO algorithm is used to provide a solution to eight engineering design problems (that is, the tension/compression spring design problem, the rolling element bearing design problem, the pressure vessel design, the cantilever beam design problem, the welded beam design problem, the problem of three bars of truss and the vertical deflection of the I beam as a constrained problems and the gear train design problem as an unconstrained problem [97]). A set of methods can be used to solve the constrained optimization problems such as penalty method. In the penalty method a sufficient large value is added to the objective value in case of violating one or more constraint. In our experiment a penalty value equals to 10,000 have been added to the objective function value in case of one or more constraints violated (i.e., infeasible solution obtained), otherwise the value of the objective function is scored as a feasible solution value. The simulations are conducted with a setting of 30 independent runs for each optimization problem, using 30 as a number of search agents and a maximum of 1000 iterations and the achieved mEDO results are compared to those achieved by the other competing algorithms.

5.1 Tension/compression spring design problem

According to [98], the major optimization goal of this engineering design issue is to reduce a tension/compression spring’s weight within limits on some of the factors that are the lowest shear stress, deflection and surge frequency. and set o design factors (i.e., The coil diameter (D), wire diameter (d), and the number of active coils (N) ). Figure 15 illustrates the main elements of this problem. The optimized design variables and the values of the associated cost function are shown in Table 8, while the statistical findings of the 30 different runs are shown in Table 9.

According to the results recorded in Tables 8 and 9, it is obvious that the mEDO algorithm produced the best design when compared to those produced by the competing algorithms. Furthermore, the mEDO algorithm produced the best statistical results, as seen in the bold numbers for the statistical best, mean, and standard deviation measures.

5.1.1 Rolling element bearing design problem

As it mentioned in [99], the primary goal of the rolling element bearing design problem is to maximize the bearing’s fatigue life, which depends on its dynamic load-carrying capacity. The problem consists of ten decision variables in addition to nine different constraints. The details of the problem are shown in Fig. 16. The values of the ideal decision variables produced by each optimization algorithm considered in the study are presented in Table 10. The statistical results of the 30 independent runs for each algorithm are displayed in Table 11.

It is clear from Tables 10 and 11 that mEDO provides a more efficient solution to this problem than the other competitive algorithms.

5.1.2 Pressure vessel design

Another engineering design problem is the pressure vessel design problem, which mainly seeks to minimize material costs while satisfying various constraints on the design. As introduced in [100] the head thickness \((T_{h})\), inner radius (R), shell thickness \((T_{s})\), and the length of the cylindrical section excluding the head (L) are the design variables of this problem. To be visually obvious with the cylindrical pressure vessel design problem, Fig. 17 is presented. Table 12 represents the system along with its variables and the cost function \(f_{cost}\), while Table 13 presents the statistical results of 30 distinct runs.

The proposed mEDO algorithm achieves the best \(f_{cost}\) value. Furthermore, the proposed algorithm achieved the lowest mean, worst and standard deviation values, demonstrating its high performance and applicability compared to the other algorithms used in comparison.

5.1.3 Gear train design problem

Sandgran first introduces the gear train (GT) design in [101] as one of the famous and well-known optimization design problems. Mainly the GT design problem has a special complexity than the other engineering design problems as it’s design variables is mixed in nature (that is, discrete, continuous, and integer). In the GT problem, we aim to minimize the number of connecting gears in the train used to drive the spindles by selecting the most suitable number of fix input and the fix output drive spindles, in other words minimizing the ratio between the input and the output gears. As shown in Fig. 18 the A, B, C, and D are used to symbolize the four design variables of the GT problem. Equation 23 is used to present the mathematical representation of the GT problem. The optimized design variables and associated cost function values are shown in Table 14, while the statistical findings of the 30 different runs are shown in Table 15.

As shown in Tables 14 and 14 the design ordained by the proposed algorithm is better than the design obtained by the other competing algorithms which prove the superiority of the proposed algorithm. Additionally, the mEDO algorithm produced the best statistical outcomes, as seen from the bold numbers for the statistical best, mean, and standard deviation measures.

5.1.4 Cantilever beam design problem

Minimal weight of the beam is the main goal of the cantilever beam design problem. The design, as shown in Fig. 19, comprises of five identically thick hollow square parts followed by free parts. Along with the goal function and limitations. The proposed mEDO algorithm and seven other algorithms have been utilized to solve this problem. Table 16 lists the statistical measures of the mEDO and the other comparative algorithms for 30 distinct runs, and Table 17 presents the optimal choice of decision variable’s values for each optimization algorithm.

Based on these results, it can be deduce that the mEDO algorithm outperforms the other competitive algorithms in providing a minimized weight of the cantilever beam by achieving \({\textbf {1.33996}}\) as best value. Additionally, the proposed algorithm has achieved the lowest mean, worst, and standard deviation values, indicating that it achieved a high degree of performance and applicability compared to the other algorithms.

5.1.5 Welded beam design problem

The optimization aim of the welded beam design problem is to satisfy a number of design requirements while achieving the best manufacturing cost. According to Fig. 20, the design factors for this problem are weld thickness (h), length (l), height (t), bar thickness (b), and pressure (P). The problem has been addressed using the mEDO algorithm, as well as seven additional algorithms, and the statistical results for 30 different runs are shown in Table 18. Table 19 presents the values of the optimal choice variable for each optimization algorithm.

Based on these results, it can be deduced that the mEDO algorithm has achieved better results compared to the other optimizers, with the best cost value of 1.473036. Additionally, the proposed mEDO algorithm has achieved the lowest mean, worst, and standard deviation values, indicating that it has achieved a high degree of performance and applicability compared to the other algorithms.

5.1.6 Three-bar truss problem

The three-bar truss problem’s main optimization goal is to reduce the truss’ overall weight, where the decision variables are the cross-sectional areas of the truss bars (i.e., \(x_A1\) and \(x_A2\)). The elements of the problem are shown in Fig. 21. The optimal decision variable achieved by the competing optimization algorithm are introduced in Table 20, while the statistical results of these algorithms are shown in Table 21.

The results show that the mEDO algorithm has achieved the lowest mean, worst, and standard deviation values, indicating that it has performed the highest level of performance. In summary, the proposed mEDO optimization algorithm has outperformed the other competing algorithms on the eight engineering problems. This superiority proves its ability to handle complex engineering problems.

5.1.7 I-beam vertical deflection design problem

I-beam design problem is one of the most used problems in the civil engineering field. The optimization goal of the I-beam design problem is to achieve the a minimal vertical deflection of the beam. As shown in Fig. 22 the I-beam design problem contain a four design variables symbolized as b, h, \(t_w\) and \(t_f\). For the I-beam design problem the main two restrictions (i.e., stress and cross-sectional area) that need to be handled in the same time. From the mathematical perspective Eq. (24) is introduced to provide the mathematical form of the objective function and constraints. The optimized results achieved by the mEDO and other algorithms participate in the comparison are listed in Table 22. While in the Table 23 the statistical measures of the best solutions are collected.

As shown in Tables 22 and 23 the proposed algorithm produces the best optimal design among the other algorithms that participate in the comparisons, which is evidence about the high degree of algorithm’s applicability. Additionally, the mEDO algorithm achieves the best statistical results in terms of the best, mean, and standard deviation, as shown in bold form.

6 Simulation results and discussion: combinatorial optimization problems

In addition to engineering design problems and to achieve a better degree of effectiveness and applicability of the proposed mEDO, this section is introduced to test the performance of the proposed algorithm in solving a more complex type of problem. As mentioned in [102], metaheuristic algorithms perform better in solving the NP-hard problems. In the following subsection, we provide the details of the NP-hard Quadratic Assignment Problem (QAP) and how the mEDO score better solutions in solving this combinatorial optimization issue.

6.1 Quadratic assignment problem

The quadratic assignment problem (QAP) is one of the most common instances of the combinatorial optimization issues introduced in [103] for the first time. Assigning a set of distinct stations to a set of positions to achieve a minimum assignment cost is the main goal of the QAP issue. The assignment cost depends mainly on each pair of positions and the flows among the pairs of facilities. In the QAP problem, we need to minimize the assignment cost scored by assigning n facilities must be assigned to n locations; the main two inputs used to address this problem are the facilities F of size \(N \times N\) and locations L and the values of N starts from 1 up to n. Equation (25). is used to mathematically model the objective function of the assignment process [104, 105].

where \(f_{i j}\) is used to symbolize the weight of couple (i.e., i and j) of facilities F. The \(d_{i j}\) is introduced into the equation to denote the displacement between the couple i of the locations L and the couple j. and the number of locations attached to the facility i is determined by \(\pi (i)\). The data set used in the QAP problem can be found in [106], due to the large number of instances contained in the data set of the QAP contained, we have selected \({\textbf {21}}\) problems to be solved by the proposed algorithm and the other algorithms used in the comparisons. To avoid unfair comparisons, we have run the algorithms for 30 distinct runs, each run including the number of iterations equal to 300. Two performance metrics are used to statistically evaluate performance of the algorithms used in the comparison, which are the average error \(AE_{avg}\) and the lowest error \(E_{lowest}\), both metrics are introduced through Eqs. (26) and (27).

where optimal solution is symbolized as symbolized as opt, the size (i.e., the number of facilities) of the QAP issue is denoted by the term N and the term \(y_{i}\) is used to denote to the result scored in run i, and the the value of lowest is computed as \(lowest = \underset{i \in [N]}{\min }\ y(i)\).

The selected \({\textbf {21}}\) instance of the QAP problem is used to test the performance and applicability of mEDO and the other seven comparing algorithms. The solutions obtained are collected in Table 24. As shown in the result, the mEDO algorithm scores the most optimal values of the average error \(AE_{avg}\) and the lowest error \(E_{lowest}\) among all the other algorithms for the majority of problems. Additionally, the Friedman mean rank test is used to demonstrate the general comparison of all algorithms in solving all instances together, and as we can see in the last row of Table 24 the mEDO algorithms achieve the first rank among all other competing algorithms. To achieve visual comparison, Fig. 23 is introduced to simply visualise how the solutions are distributed. Almost for all the instance the mEDO achieves the narrower boxplot charts and a better distribution of the solutions than the other competing algorithms, which proves the superiority of the mEDO algorithm for solving more complex and combinatorial problems also in terms of boxplot analysis.

7 Conclusion and future work

This study has presented a new version of the exponential distribution optimizer (EDO) algorithm called mEDO, which combines two enhancement strategies; orthogonal learning (OL) and Local escaping operator (LEO) to improve the performance of the original EDO algorithm. To validate the quality and performance of the proposed mEDO algorithm, we have evaluated its performance over ten functions from the IEEE CEC’2020 benchmark, eight constrained engineering design optimization problems and 21 instance of the QAP combinatorial optimization problem. The proposed mEDO has been compared with seven other metaheuristic algorithms, including the original (i.e., EDO). The results obtained by mEDO demonstrate its superior ability to locate the optimal region, achieve a better trade-off between exploring and exploiting mechanisms, converge faster to a nearly optimal solution than other algorithms, and the ability of solving more complex and combinatorial problems. Furthermore, statistical analysis reveals that mEDO outperforms other algorithms.

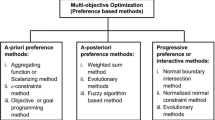

Finally, the following research points can be conducted in future directions: 1. solving different complex real-world applications, such as biomedical problems. 2. Multiobjective version of the EDO algorithm to deal with different multiobjective optimization problems such as vehicle routing and multiagent systems.

Data availability

Data sharing is not applicable to this article as no data sets were generated or analyzed during the current study.

References

Young, M.R.: A minimax portfolio selection rule with linear programming solution. Manag. Sci. 44(5), 673–683 (1998)

Sharpe, W.F.: A linear programming approximation for the general portfolio analysis problem. J. Fin. Quant. Anal. 6(5), 1263–1275 (1971)

Faina, L.: A global optimization algorithm for the three-dimensional packing problem. Eur. J. Oper. Res. 126(2), 340–354 (2000)

Li, H.-L., Chang, C.-T., Tsai, J.-F.: Approximately global optimization for assortment problems using piecewise linearization techniques. Eur. J. Oper. Res. 140(3), 584–589 (2002)

Fu, J.-F., Fenton, R.G., Cleghorn, W.L.: A mixed integer–discrete–continuous programming method and its application to engineering design optimization. Eng. Optim. 17(4), 263–280 (1991)

Houssein, E.H., Sayed, A.: Dynamic candidate solution boosted beluga whale optimization algorithm for biomedical classification. Mathematics 11(3), 707 (2023)

Tsai, J.-F., Li, H.-L.: Technical note-on optimization approach for multidisk vertical allocation problems. Eur. J. Oper. Res. 165(3), 835–842 (2005)

Lin, M.-H.: An optimal workload-based data allocation approach for multidisk databases. Data Knowl. Eng. 68(5), 499–508 (2009)

Floudas, C.A.: Global optimization in design and control of chemical process systems. J. Process Control 10(2–3), 125–134 (2000)

Pardalos, P.M., Floudas, C.A.: Deterministic Global Optimization: Theory, Algorithms and Applications. Kluwer Academic Publishers, New York (2000)

Khalid, A.M., Hosny, K.M., Mirjalili, S.: Covidoa: a novel evolutionary optimization algorithm based on coronavirus disease replication lifecycle. Neural Comput. Appl. 34(24), 22465–22492 (2022)

Houssein, E.H., Abohashima, Z., Elhoseny, M., Mohamed, W.M.: Hybrid quantum-classical convolutional neural network model for covid-19 prediction using chest X-ray images. J. Comput. Des. Eng. 9(2), 343–363 (2022)

Houssein, E.H., Emam, M.M., Ali, A.A.: An efficient multilevel thresholding segmentation method for thermography breast cancer imaging based on improved chimp optimization algorithm. Expert Syst. Appl. 185, 115651 (2021)

Hosny, K.M., Awad, A.I., Khashaba, M.M., Mohamed, E.R.: New improved multi-objective gorilla troops algorithm for dependent tasks offloading problem in multi-access edge computing. J. Grid Comput. 21(2), 21 (2023)

Houssein, E.H., Saad, M.R., Ali, A.A., Shaban, H.: An efficient multi-objective gorilla troops optimizer for minimizing energy consumption of large-scale wireless sensor networks. Expert Syst. Appl. 212, 118827 (2023)

Nadimi-Shahraki, M.H., Varzaneh, A., Zahra, Z., Hoda, M.S.: Binary starling murmuration optimizer algorithm to select effective features from medical data. Appl. Sci. 13(1), 564 (2022)

Houssein, E.H., Saber, E., Ali, A.A., Wazery, Y.M.: Centroid mutation-based search and rescue optimization algorithm for feature selection and classification. Expert Syst. Appl. 191, 116235 (2022)

Nama, Sukanta, Saha, A.K., Sharma, S.: A hybrid tlbo algorithm by quadratic approximation for function optimization and its application. In: Balas, V.E., Kumar, R., Srivastava, R. (eds.) Recent Trends and Advances in Artificial Intelligence and Internet of Things, pp. 291–341. Springer, Cham (2020)

Schneider, J., Kirkpatrick, S.: Stochastic Optimization. Springer, Cham (2007)

Cavazzuti, M., Cavazzuti, M.: Deterministic optimization. In: Cavazzuti, M. (ed.) Optimization Methods: From Theory to Design Scientific and Technological Aspects in Mechanics, pp. 77–102. Springer, Cham (2013)

Lin, M.-H., Tsai, J.-F., Yu, C.-S.: A review of deterministic optimization methods in engineering and management. Math. Probl. Eng. (2012). https://doi.org/10.1155/2012/756023

Fouskakis, D., Draper, D.: Stochastic optimization: a review. Int. Stat. Rev. 70(3), 315–349 (2002)

Uryasev, S., Pardalos, P.M.: Stochastic Optimization: Algorithms and Applications, vol. 54. Springer, Cham (2013)

Zakaria, A., Ismail, F.B., Lipu, M.S.H., Hannan, M.A.: Uncertainty models for stochastic optimization in renewable energy applications. Renew. Energy 145, 1543–1571 (2020)

Sörensen, K., Sevaux, M., Glover, F.: A history of metaheuristics. In: Mart, R., Pardalos, P.M., Resende, M.G. (eds.) Handbook of Heuristics, pp. 791–808. Springer, Cham (2018)

Mirjalili, S., Lewis, A.: The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016)

Sörensen, K., Glover, F.: Metaheuristics. Encyclopedia of operations research and management science 62, 960–970 (2013)

Hussain, K., Salleh, M.N.M., Cheng, S., Shi, Y.: Metaheuristic research: a comprehensive survey. Artif. Intell. Rev. 52, 2191–2233 (2019)

Hashim, F.A., Houssein, E.H., Hussain, K., Mabrouk, M.S., Al-Atabany, W.: A modified henry gas solubility optimization for solving motif discovery problem. Neural Comput. Appl. 32(14), 10759–10771 (2020)

Houssein, E.H., Hosney, M.E., Oliva, D., Mohamed, W.M., Hassaballah, M.: A novel hybrid Harris Hawks optimization and support vector machines for drug design and discovery. Comput. Chem. Eng. 133, 106656 (2020)

Houssein, E.H., Hosney, M.E., Mohamed, E., Diego, O., Waleed, M.M., Hassaballah, M.: Hybrid Harris hawks optimization with cuckoo search for drug design and discovery in chemoinformatics. Sci. Rep. 10(1), 1–22 (2020)

Neggaz, N., Houssein, E.H., Hussain, K.: An efficient henry gas solubility optimization for feature selection. Expert Syst. Appl. 152, 113364 (2020)

Hussain, K., Neggaz, N., Zhu, W., Houssein, E.H.: An efficient hybrid sine-cosine Harris Hawks optimization for low and high-dimensional feature selection. Expert Syst. Appl. 176, 114778 (2021)

Houssein, E.H., Helmy, B.E.-D., Oliva, D., Elngar, A.A., Shaban, H.: A novel black widow optimization algorithm for multilevel thresholding image segmentation. Expert Syst. Appl. 167, 114159 (2021)

Houssein, E.H., Mahdy, M.A., Blondin, M.J., Shebl, D., Mohamed, W.M.: Hybrid slime mould algorithm with adaptive guided differential evolution algorithm for combinatorial and global optimization problems. Expert Syst. Appl. 174, 114689 (2021)

Kirkpatrick, S., Daniel Gelatt, C., Jr., Vecchi, M.P.: Science. Optimization by simulated annealing 220(4598), 671–680 (1983)

Holland, J.H.: Genetic algorithms. Sci. Am. 267(1), 66–73 (1992)

Luo, J., Chen, H., Yueting, X., Huang, H., Zhao, X., et al.: An improved grasshopper optimization algorithm with application to financial stress prediction. Appl. Math. Model. 64, 654–668 (2018)

Zhang, Q., Chen, H., Luo, J., Yueting, X., Chengwen, W., Li, C.: Chaos enhanced bacterial foraging optimization for global optimization. IEEE Access 6, 64905–64919 (2018)

Mafarja, M., Aljarah, I., Heidari, A.A., Hammouri, A.I., Faris, H., Ala’M, A.-Z., Mirjalili, S.: Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowl.-Based Syst. 145, 25–45 (2018)

Mafarja, M., Aljarah, I., Heidari, A.A., Faris, H., Fournier-Viger, P., Li, X., Mirjalili, S.: Binary dragonfly optimization for feature selection using time-varying transfer functions. Knowl.-Based Syst. 161, 185–204 (2018)

Baykasoğlu, A., Ozsoydan, F.B.: Evolutionary and population-based methods versus constructive search strategies in dynamic combinatorial optimization. Inf. Sci. 420, 159–183 (2017)

Kennedy, J., Eberhart, R.: Particle swarm optimization. In: Proceedings of ICNN’95-international conference on neural networks, vol. 4, pp. 1942–1948. IEEE (1995)

Dorigo, M., Birattari, M., Stutzle, T.: Ant colony optimization. IEEE Comput. Intell. Magn. 1(4), 28–39 (2006)

Zamani, H., Nadimi-Shahraki, M.H., Gandomi, A.H.: Qana: quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 104, 104314 (2021)

Gandomi, A.H., Yang, X.-S., Alavi, A.H.: Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng. Comput. 29, 17–35 (2013)

Nama, S.: A modification of I-SOS: performance analysis to large scale functions. Appl. Intell. 51(11), 7881–7902 (2021)

Saha, A., Nama, S., Ghosh, S.: Application of HSOS algorithm on pseudo-dynamic bearing capacity of shallow strip footing along with numerical analysis. Int. J. Geotech. Eng. (2019). https://doi.org/10.1080/19386362.2019.1598015

Sharma, S., Chakraborty, S., Saha, A.K., et al.: A modified butterfly optimization algorithm with Lagrange interpolation for global optimization. J. Bionic Eng. 19(4), 1161–1176 (2022)

Karaboga, D., Gorkemli, B., Ozturk, C., Karaboga, N.: A comprehensive survey: artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 42, 21–57 (2014)

Nama, S.: A novel improved SMA with quasi reflection operator: performance analysis, application to the image segmentation problem of covid-19 chest x-ray images. Appl. Soft Comput. 118, 108483 (2022)

Hashim, F.A., Hussain, K., Houssein, E.H., Mabrouk, M.S., Al-Atabany, W.: Honey badger algorithm New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 192, 84–110 (2022)

Koza, J.R.: Genetic Programming II: Automatic Discovery of Reusable Programs. MIT Press, Cambridge (1994)

Price, K.V.: Differential evolution. In: Zelinka, I., Snasael, V., Abraham, A. (eds.) Handbook of Optimization: From Classical to Modern Approach, pp. 187–214. Springer, Cham (2013)

Zamani, H., Nadimi-Shahraki, M.H., Gandomi, A.H.: Starling murmuration optimizer: a novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 392, 114616 (2022)

Sette, S., Boullart, L.: Genetic programming: principles and applications. Eng. Appl. Artif. Intell. 14(6), 727–736 (2001)

Nama, S., Saha, A.K.: A bio-inspired multi-population-based adaptive backtracking search algorithm. Cogn. Comput. 14(2), 900–925 (2022)

Simon, D.: Biogeography-based optimization. IEEE Trans. Evol. Comput. 12(6), 702–713 (2008)

Laguna, M.: Tabu search. In: Mart, R., Pardalos, P.M., Resende, M.G. (eds.) Handbook of Heuristics, pp. 741–758. Springer, Cham (2018)

Zou, F., Chen, D., Qingzheng, X.: A survey of teaching-learning-based optimization. Neurocomputing 335, 366–383 (2019)

Rashedi, E., Nezamabadi-Pour, H., Saryazdi, S.: GSA: a gravitational search algorithm. Inf. Sci. 179(13), 2232–2248 (2009)

Erol, O.K., Eksin, I.: A new optimization method: big bang-big crunch. Adv. Eng. Softw. 37(2), 106–111 (2006)

Abdel-Basset, M., El-Shahat, D., Jameel, M., Abouhawwash, M.: Exponential distribution optimizer (EDO): a novel math-inspired algorithm for global optimization and engineering problems. Artif. Intell. Rev. 56, 1–72 (2023)

Siddique, N., Adeli, H.: Nature-inspired chemical reaction optimisation algorithms. Cogn. Comput. 9, 411–422 (2017)

Askari, Q., Younas, I., Saeed, M.: Political optimizer: a novel socio-inspired meta-heuristic for global optimization. Knowl.-Based Syst. 195, 105709 (2020)

Huang, D., Yang, J., Xiang, D., Xu, G.: Gaussian swarm optimization: A math-inspired metaheuristic algorithm for solving optimization problems. Available at SSRN 4313360 (2022)

Mirjalili, S.: SCA: a sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 96, 120–133 (2016)

Houssein, E.H., Saad, M.R., Hashim, F.A., Shaban, H., Hassaballah, M.: Lévy flight distribution: a new metaheuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 94, 103731 (2020)

Hashim, F.A., Hussain, K., Houssein, E.H., Mabrouk, M.S., Al-Atabany, W.: Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51, 1–21 (2020)

Salcedo-Sanz, S.: Modern meta-heuristics based on nonlinear physics processes: a review of models and design procedures. Phys. Rep. 655, 1–70 (2016)

Heidari, A.A., Mirjalili, S., Faris, H., Aljarah, I., Mafarja, M., Chen, H.: Harris hawks optimization: algorithm and applications. Future Gener. Comput. Syst. 97, 849–72 (2019)

Wolpert, D.H., Macready, W.G.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

Shi, L., Luo, J.: Evaluation of axis straightness error in the machining of hole and shaft parts based on improved exponential distribution optimizer. Proc. Inst. Mech. Eng. B (2024). https://doi.org/10.1177/09544054231224